This repo will store some of the AI work I do as progress to my new years resolution of working on more AI. Alot of the AI work I do in my research cannot be shared and that is how I spend alot of the free time I have dedicated to AI, so this will be a small subset of all the AI I do in 2019.

I am starting off with the #100DaysOfMLCode challenge.

I am also very fond of Kaggle, here is mine.

Tried to classify CIFAR 10 using a 4 layer model in Keras. Link to Notebook

Added a 6 layer model, trained it and compared accuracy between the 2 models.

Tried to figure out why my model sucks so badly.

Made a new model and got 84.98% accuracy.

Started to work on the CIFAR100 Dataset.

Made a model for CIFAR 100 using the 100 labels, achieving validation accuracy of 57.73% but accuracy of 0.7249.

Playing with hyper-params and making a program for facial detection.

Creating bounding boxes for faces from CIFAR-100 Dataset. Link to Notebook

Used leaky relu and managed to get 60% accuracy. Link to Notebook

Looked into training my own haar cascade classifier.

Looking into different arcitectures and training different models for CIFAR100

Learning about RNNs.

Kaggle Timeseries prediction with Siraj Raval.

Stock price prediction.

Read up on ARIMA, Moving averages, Single Exponential smoothing, Holt's linear and Holts Winter methods.

Read Man vs Big Data

Started Quantopian Lectures

More quantopian tutorials and also setting up Zipline.

Zipline tutorials and making a Neural Net to predict crypto.

Data preperation for the neural net.

Understanding input sizes for an LSTM.

Made an LSTM but only got 47% accuracy.

Setting up a Deep learning server, a MongoDB server and trying to create a Deep Learning pipeline.

Making a backtester.

Working on super secret trading algorithms for herobots.

Working on the super secret trading algorithms for herobots.

Learned about the importance of not using shuffle to create test/train splits. Scaling and normalising data. Then implemented a preprocessing algorithm in python.

Learned Tensorboard and started to remake a model using different data.

Looked into different moving averages for modelling. Also looked into GANs.

Working on data preprocessing and gathering BTC price data. Checking for missing data.

Looked info reinforcement learning.

Data cleaning using Pandas.

Data Lit 2- Intro to Statistics

More data cleaning.

Checking for time continutity in 2 time series datasets of over 750,000 points each.

Fitting hyper parameters for BTC prediction model.

Connected to a new MongoDB server, lined up different time series in pandas while checking for missing results. Started to make a pipeline to pull data minutely and run a LTSM on it.

Started to set up a machine learning pipeline that will pull data, run a model and submit the predictions to a database.

Continued working on a pipeline.

Continued working on a pipeline, gathered and cleaned more data sources.

Started making a model with more data sources.

Deployed a test version of the algorithm to the server, set up authentification and cronjobs.

Backtesting and changing the algorithms.

Making a new LSTM model using more data. Looked into different ways to automate hyperparameter picking.

Looked at the effect of equalising the amount of data I have in each catagory on classification validation accuracy, changed hyperparameters.

When pulling the data from apis to run the model on, most of the values are NaNs, today was spent figuirng out why. I also remade data preprocessing algorithms.

Deplotying and testing a new LSTM on the BTC data.

Making a new model for ETH data, trying new arcitectures and seeing the effect of removing sparse data sources to give more data overall.

Testing out more new models.

Looked into decision trees and the mathematics behind neural networks.

Migrating to a MongoDB database to fix the problem of getting NaN values so we can make a Decision Tree.

Wrote a script to use a backup model for when I am creating a new model so the GPU is busy and cannot commit.

Started to make a decision tree.

Made backups of all of my Python Notebooks from my summer work. Allowing me to search through and reuse my models but also migrate to a new server. Started Fast.ai Practical Deeplearning for coders.

Learned about k fold validation and Reinforcement learning.

Calculated decision trees manually calculating entropy and gain.

Looked into the mathematics behind Reinforcement learning.

Started making an accuracy checker for different machine learning models.

Continued making the accuracy checker and found bugs in the API we are using.

Finished work on the accuracy checker.

Looked into using a SAT solver to create a suduko AI.

Made a 3 step system for testing ML models and backing up cronjobs.

Compared our different models using the accuracy checker.

Adding Sentry tracking to testing submissions.

Adding Sentry tracking to BTC submissions and backup submissions incase of errors.

After taking some time off for personal reasons, today I got back into things. Added some more models and checked my progress for herobots planning what I need to do next.

Switched to a new model for ETH prediction. Created a ETH spot check using Logistic Regression, Random Forests, KNN, Naive Bayes and XGBoost. Looked at the feature importances for XGBoost.

Moved the new model for ETH prediction to testing. Added documentation. Set up logging into the database submissions if prediction fails.

Retested old LSTM models and algorithmically created new features, creating a pipeline to test XGBoost models.

Made various Logistic Regression models but most of my day was spent making my own backtester. Then creating a fuly automated pipeline that creates models for me, backtests them and then saves the results. I am very happy.

Watched Statistics lectures. Made more XGBoost models, created and backtested many more models and added more features to my model creator.

Watched lectures on Autocorrelation, learned about how variance estimates are different for autocorrelated data. Tested data for auto correletion. Reran my auto model generator and backtester on ETH. Derived Perceptrons from scratch.

Manually made a new model that predicts with 100%. ... And solved P = NP, well P != NP. Presented a demo of Sigmoids and Universal Apprximation Theory.

Made an AR model and more logistic regression models with added features.

ExplAIn

ExplAIn

Showing how a linear line is equlivant to a perceptron without an activation function.

Using my Perceptron Demo to solve XOR.

Fast AI - Meet up 7

Also watched: https://ml4a.github.io/classes/itp-F18/06/

Although creating Conway's Game of Life, which practically started the field of Cellular Autonama, would have been enough for one life to be will lived. It was just one small detour on your journey of mathematical genius. Seeing this as a child lead me to where I am now and gave me a passion for alife. R.I.P John Conway.

On this day I made an implimentation of Conways Game of life and started CodeLife, a project in alife.

Created the player and perceptron agents for codelife.

Making a new agent type on codelife.

The agent gets stuck spinning in circles...

Adding Q Learning to codelife:

My best performing RL agents before this point, only cared about immediate reward. Given that an agent could:

- see a half dead grass patch and move onto it immeditely

- see a half dead grass patch, rotate left and right, see a fully live grass patch and move onto it.

I thought that being able make decisions based on long term reward would help a new type of agent beat the 5329 frame high score. Excited at this prospect, I decided to code a new agent which uses a Q Table to make decisions.

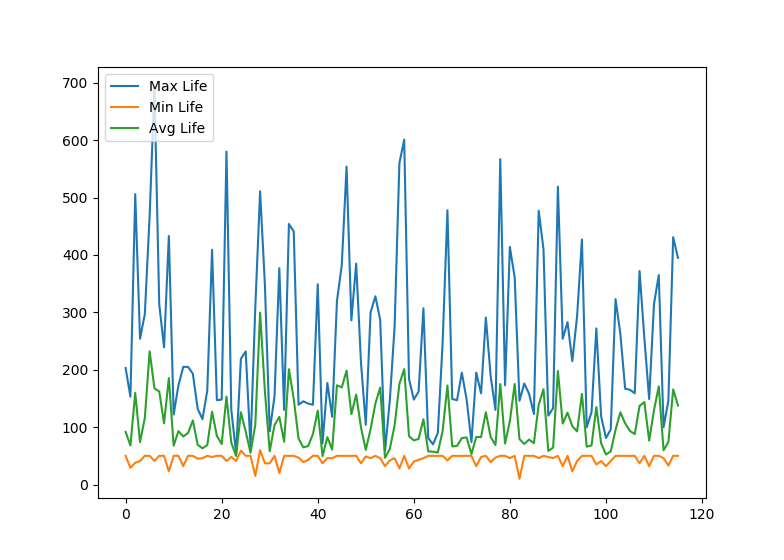

This new type of agent now has some parameters, which I decided to optimise using a genetic algorithm. I track the max, min and average scores of each generation and plot them when I exit the simulation, however the results were not what I was looking for:

Used the Python NEAT Libary to make a new codelife agent.

Started making a particle system to create a dynamic enviroment for codelife.