This package exports a dataset with deaths number for all cities of Brazil since 2015. To obtain the most recent data, it also exports a web scraping function to get data every time you want.

pip install brazil-monthly-deathsIf you want to get the most recent data, probably not available on this package, since the deaths numbers change every day, install the chrome driver in order to use selenium, you can see more information in the selenium documentation and the chrome driver download page.

⚠️ When getting new data, this package will access an official website for new records, but as it is made with web scraping, if the html is updated, this package will no longer work, needing to be updated accordingly. Please if you experience any kind of failure, open an issue.

Assuming you have installed the chrome driver

from brazil_monthly_deaths import brazil_deaths, data, update_df

# data is the data from 2015 to 2020

print(data)

# Everyday there are new records,

# so you should get the most recent data.

# Depending on your internet connection

# it may take up to 6 minutes for each month

# if you run for all states. Consider selecting

# only the states you want to work on.

new_data = brazil_deaths(years=[2020], months=[5])

# update the lagging data provided by this package

current_data = update_df(data, new_data)

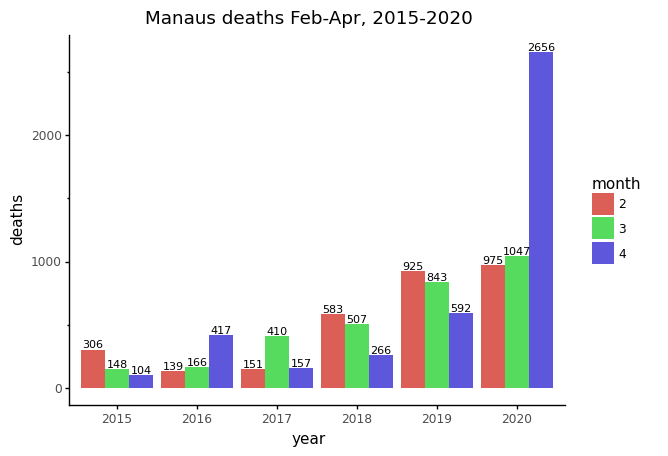

print(current_data)See the jupyter example to generate the following plot:

The example running:

| city_id | year | month | region | state | city | deaths |

|---|---|---|---|---|---|---|

| 3516805 | 2020 | 1 | Southeast | Rio de Janeiro | Tracunhaém | 8 |

| 21835289 | 2020 | 1 | Southeast | Rio de Janeiro | Trindade | 13 |

| 10791950 | 2020 | 1 | Southeast | Rio de Janeiro | Triunfo | 16 |

| 81875827 | 2020 | 1 | Southeast | Rio de Janeiro | Tupanatinga | 18 |

| 99521011 | 2020 | 1 | Southeast | Rio de Janeiro | Tuparetama | 4 |

This package exports some pandas dataframe with the following columns:

- city_id : unique integer from state and city,

- year : from 2015 to 2020,

- month : from 1 to 12,

- region : [North, Northeast, South, Southeast, Center_West],

- state : one of the 27 states of Brazil, including country capital,

- city : city name

- deaths : number os deaths

from brazil_monthly_deaths import (

data, # full data

data_2015,

data_2016,

data_2017,

data_2018,

data_2019,

data_2020 # always out of date, you need to update it

)You can use this function to scrap new data directly from the Civil Registry Offices website. Just make sure you have installed the chrome driver, as pointed above.

Official note about the legal deadlines:

The family has up to 24 hours after the death to register the death in the Registry, which, in turn, has up to five days to perform the death registration, and then up to eight days to send the act done to the National Information Center of the Civil Registry ( CRC Nacional), which updates this platform.

It means: The last 13 days are always changing.

from brazil_monthly_deaths import brazil_deathsSince it will access an external website, it will depend on your internet connection and world location. Consider selecting only the states you want to work on. For each month, for all states it may take up to 6 min to run for a single year.

df = brazil_deaths(

years=[2015, 2016, 2017, 2018, 2019, 2020],

months=range(1, 13, 1),

regions=_regions_names,

states=_states,

filename="data",

return_df=True,

save_csv=True,

verbose=True,

*args,

**kwargs

) The _regions_names is:

["North", "Northeast", "South", "Southeast", "Center_West"]The _states is:

[

"Acre", "Amazonas", "Amapá", "Pará",

"Rondônia", "Roraima", "Tocantins", "Paraná",

"Rio Grande do Sul", "Santa Catarina", "Espírito Santo",

"Minas Gerais", "Rio de Janeiro", "São Paulo",

"Distrito Federal", "Goiás", "Mato Grosso do Sul",

"Mato Grosso", "Alagoas", "Bahia", "Ceará",

"Maranhão", "Paraíba", "Pernambuco",

"Piauí", "Rio Grande do Norte", "Sergipe"

]The *args and **kwargs are passed down to df.to_csv(..., *args, **kwargs)

Use this function after you have scraped recent data from the Civil Registry Offices website to update the data provided in this package.

from brazil_monthly_deaths import brazil_deaths, data, update_df

new_data = brazil_deaths(years=[2020], months=[5])

current_data = update_df(data, new_data)It basically put the new data below the old data in the dataframe, then remove the duplicates (excluding deaths) keeping the most recent entries.

Get the unique id of the combination of the state and city.

from brazil_monthly_deaths import get_city_id

sao_paulo_id = get_city_id(state='São Paulo', city='São Paulo')

print(sao_paulo_id) # 89903871