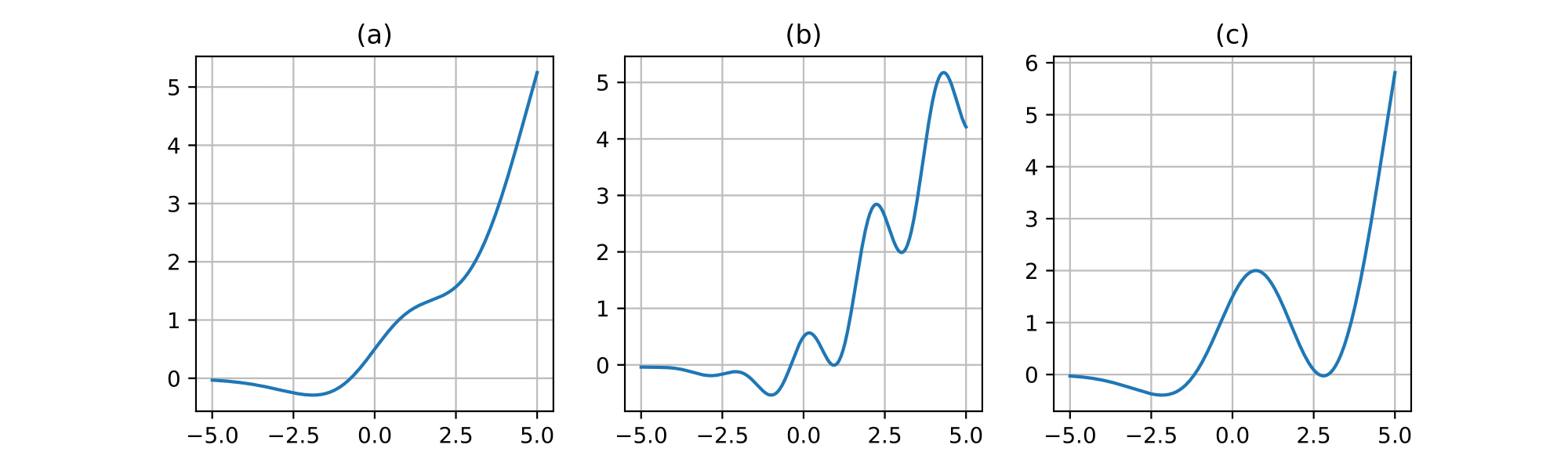

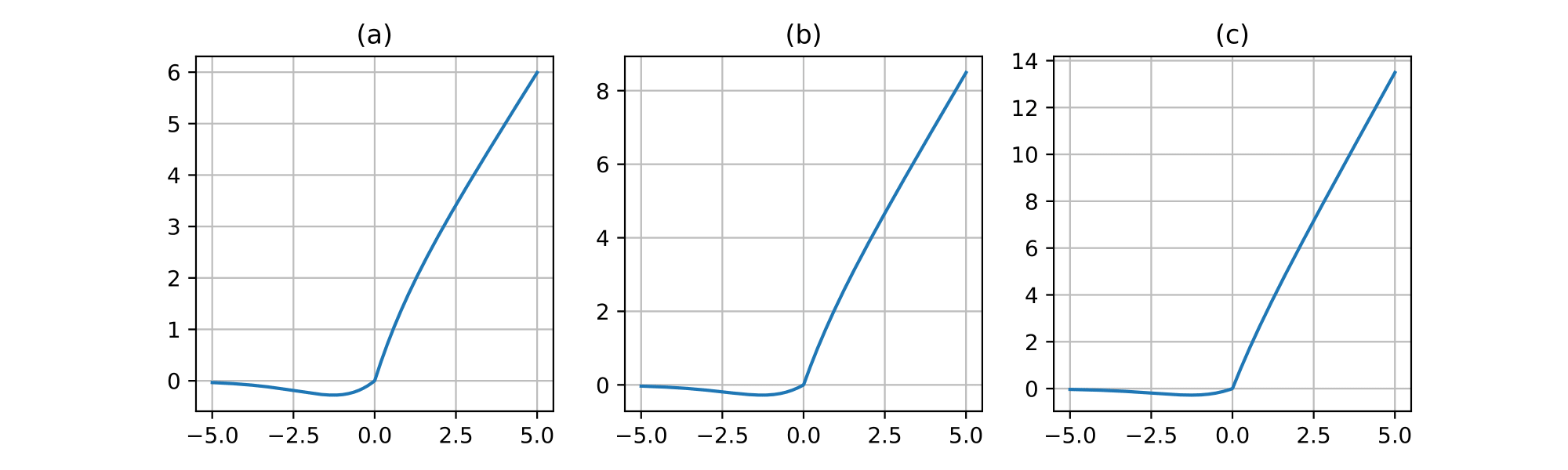

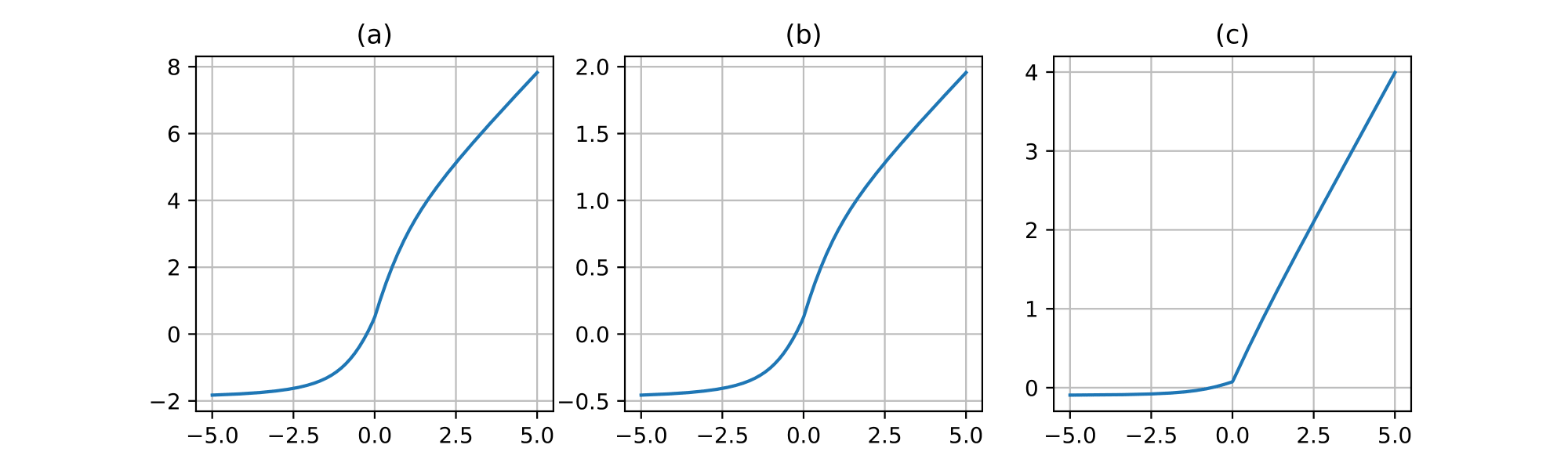

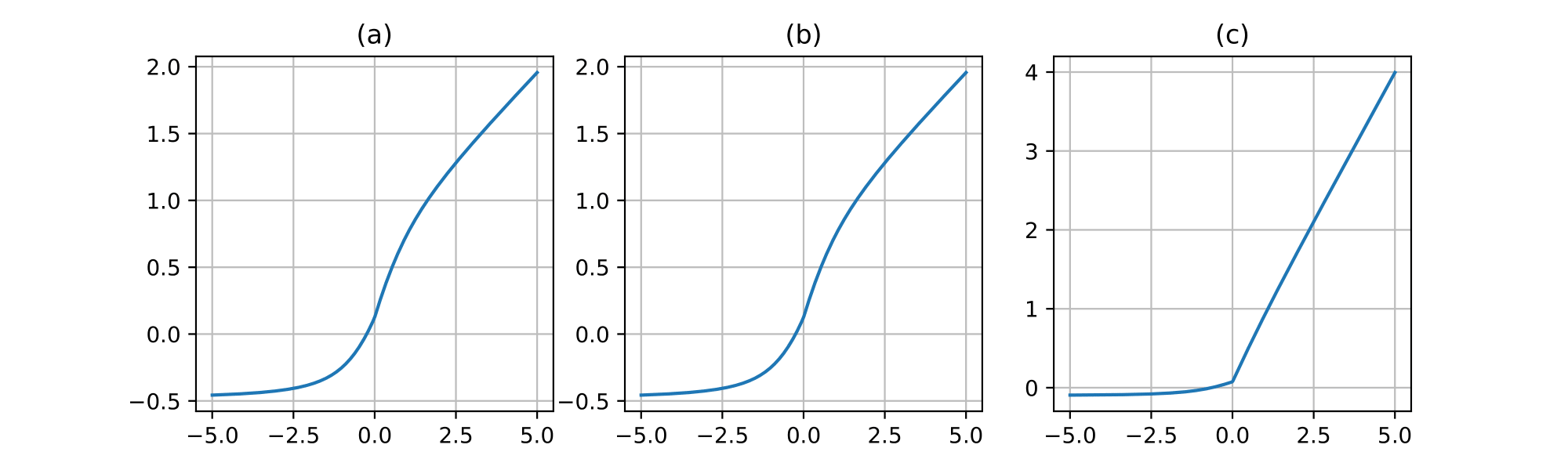

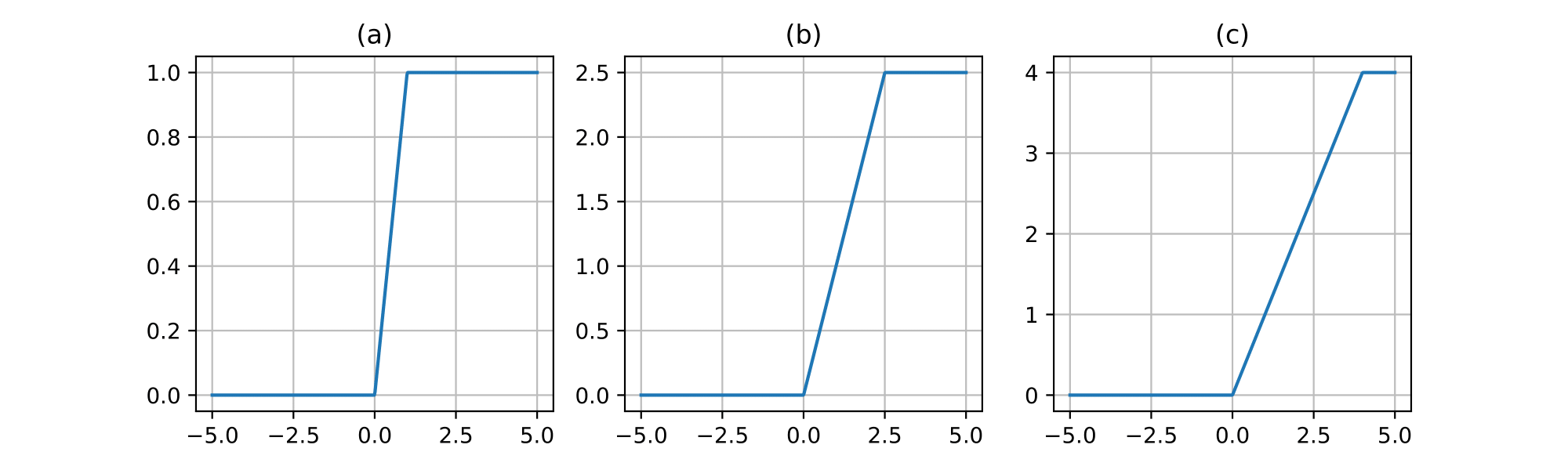

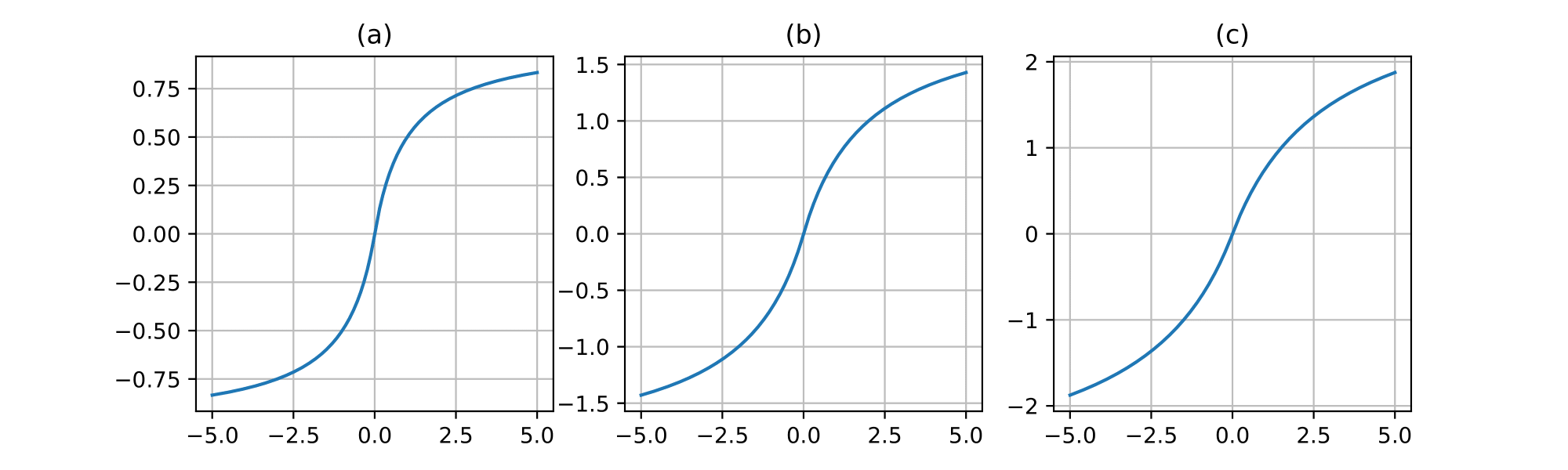

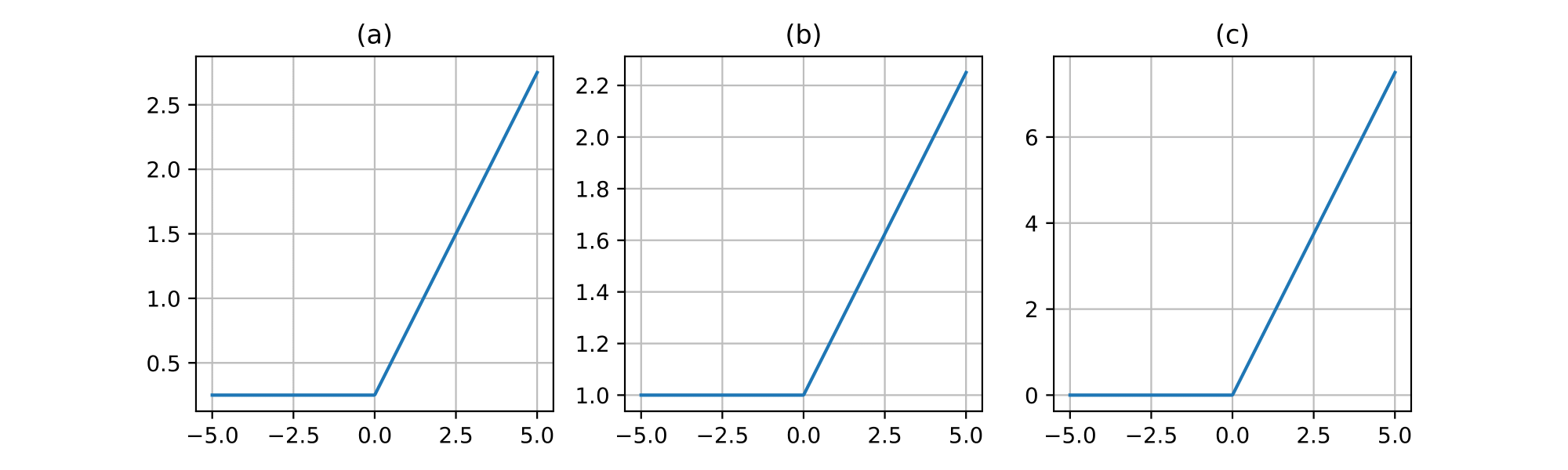

We propose a set of the trainable activation functions — Cosinu-Sigmoidal Linear Unit (CosLU), DELU, Linear Combination (LinComb), Normalized Linear Combination (NormLinComb), Rectified Linear Unit N (ReLUN), Scaled Soft Sign (ScaledSoftSign), Shifted Rectified Linear Unit (ShiLU).

Create venv.

python3 -m venv venvActivate venv.

source venv/bin/activateInstall dependencies.

pip install -r requirements.txtThere are 3 main files - train.py, test.py, plot.py. You should run train.py first, then test.py, then plot.py.

Use whatever configuration you want to test. Configurations can be found in the configs folder, train.py and test.py use the same config. There are several plot configurations in the configs/plot folder.

There are many predefined run scripts in the scripts folder, just run one of them as .sh, scripts/train.sh and scripts/test.sh are scripts to train and test all possible configurations, scripts/plot.sh to plot results after training and testing.

All the results of the train / test phases are in the logs folder.

All proposed trainable activations are in activation.py.

Let's say I want to train and test the ResNet-8 model with CosLU trainable activation on the CIFAR-10 dataset.

python train.py --config configs/coslu/cifar10/resnet8.yaml

python test.py --config configs/coslu/cifar10/resnet8.yamlIf you want to train and test all proposed trainable activations with a specific model and dataset, you can use the script from the scripts folder. For example, train and test the DNN2 model on the MNIST dataset.

sh scripts/dnn2_mnist.shTrain and test all possible configurations.

sh scripts/train.sh

sh scripts/test.shPlot graphics for all configurations, it will work even if some configurations haven't been trained.

sh scripts/plot.shProject CITATION.

Project is distributed under MIT License.