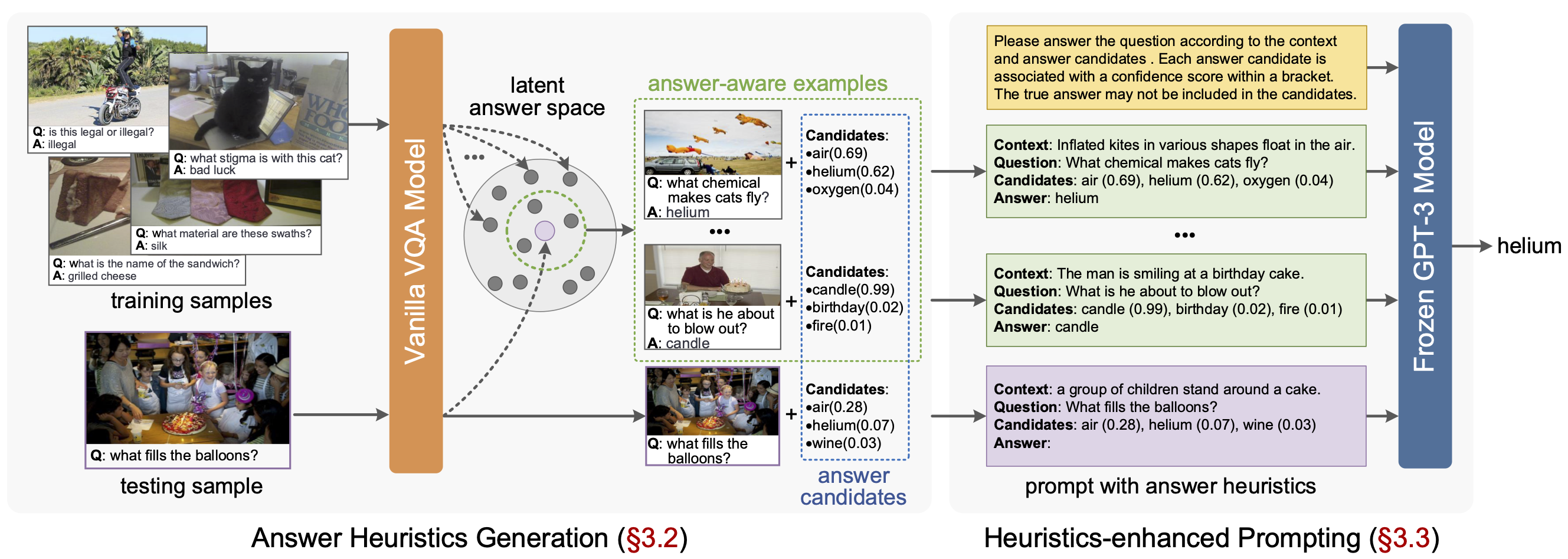

This repository is the official implementation of the Prophet, a two stage framework designed to prompt GPT-3 with answer heuristics for knowledge-based VQA. In stage one, we train a vanilla VQA model on a specific knowledge-based VQA dataset and extract two types of complementary answer heuristics from the model: answer candidates and answer-aware examples. In stage two, answer heuristics are used to prompt GPT-3 to generate better answers. Prophet significantly outperforms existing state-of-the-art methods on two datasets, delivering 61.1% on OK-VQA and 55.7% on A-OKVQA. Please refer to our paper for details.

To conduct the following experiments, a machine with at least 1 RTX 3090 GPU, 50GB memory, and 300GB free disk space is recommended. We strongly recommend using an SSD drive to guarantee high-speed I/O.

Following software is needed:

- Python >= 3.9

- Cuda >= 11.3

- Pytorch >= 12.0

- what you can find in environment.yml

We recommend downloading Anaconda first and then creating a new environment with the following command:

$ conda env create -f environment.ymlThis command will create a new environment named prophet with all the required packages. To activate the environment, run:

$ conda activate prophetBefore running the code, prepare two folders: datasets and assets. The datasets folder contains all the datasets and features used in this project, and the assets folder contains the pre-computed resources and other intermediate files (you can use them to skip some early experiment steps and save time).

First, download the datasets and assets. Then put the datasets and assets folder in the root directory of this project. Download MSCOCO 2014 and 2017 images from here (you can skip MSCOCO 2017 if you only experiments on OK-VQA) and put them in the datasets folder. Run the following command to extract the features of the images:

$ bash scripts/extract_img_feats.shAfter that, the datasets folder will have the following structure:

Click to expand

datasets

├── aokvqa

│ ├── aokvqa_v1p0_test.json

│ ├── aokvqa_v1p0_train.json

│ └── aokvqa_v1p0_val.json

├── coco2014

│ ├── train2014

│ └── val2014

├── coco2014_feats

│ ├── train2014

│ └── val2014

├── coco2017

│ ├── test2017

│ ├── train2017

│ └── val2017

├── coco2017_feats

│ ├── test2017

│ ├── train2017

│ └── val2017

├── okvqa

│ ├── mscoco_train2014_annotations.json

│ ├── mscoco_val2014_annotations.json

│ ├── OpenEnded_mscoco_train2014_questions.json

│ └── OpenEnded_mscoco_val2014_questions.json

└── vqav2

├── v2_mscoco_train2014_annotations.json

├── v2_mscoco_val2014_annotations.json

├── v2_OpenEnded_mscoco_train2014_questions.json

├── v2_OpenEnded_mscoco_val2014_questions.json

├── v2valvg_no_ok_annotations.json

├── v2valvg_no_ok_questions.json

├── vg_annotations.json

└── vg_questions.json

We've also provided a tree structure of the entire project in misc/tree.txt.

We provide bash scripts for each stage of the Prophet framework. You can find them in the scripts directory. There are two common arguments you should take care of when running each script:

--task: specify the task (i.e., the target dataset) you want to deal with. The available options areok(training on train set of OK-VQA and evaluating on the test set of OK-VQA),aok_val(training on train set of A-OKVQA and evaluating on the validation set of A-OKVQA) andaok_test(training on train set and validation set of A-OKVQA and evaluating evaluated on the test set of A-OKVQA);--version: specify the version name of this run. This name will be used to create a new folder in theoutputsdirectory to store the results of this run.

Notice that you can omit any arguments when invoking following scripts, it will then use the default arguments written in the script files.

Before running any script, you can also update the configuration files (*.yml) in the configs directory to change hyperparameters.

At this stage, we train an improved MCAN model (check the paper for detail description) through pretraning on VQA v2 and finetuning on target dataset. Take OK-VQA for example, run pretraining step with commands:

$ bash scripts/pretrain.sh \

--task ok --version okvqa_pretrain_1 --gpu 0Multiple GPUs are supported by setting --gpu 0,1,2,3 (for example). We've provided a pretrained model for OK-VQA here. Then, run finetuning step with commands:

$ bash scripts/finetune.sh \

--task ok --version okvqa_finetune_1 --gpu 0 \

--pretrained_model outputs/okvqa_pretrain_1/ckpts/epoch_13.pklAll epoch checkpoints are saved in outputs/ckpts/{your_version_name}. We've also provided a finetuned model for OK-VQA here. You may pick one to generate answer heuristics by run following command:

$ bash scripts/heuristics_gen.sh \

--task ok --version okvqa_heuristics_1

--gpu 0 --ckpt_path outputs/okvqa_finetune_1/ckpts/epoch_6.pkl

--candidate_num 10 --example_num 100The extracted answer heuristics will be stored as candidates.json and examples.json in outputs/results/{your_version_name} directory.

You may need the candidates.json and examples.json files generated in the former stage to step into this stage. Or you can just skip stage one, and use the files of answer heuristics we provided in assets. To prompt GPT-3 with answer heuristics and generate better answers, run the following command:

$ bash scripts/prompt.sh \

--task ok --version okvqa_prompt_1 \

--examples_path outputs/results/okvqa_heuristics_1/examples.json \

--candidates_path outputs/results/okvqa_heuristics_1/candidates.json \

--openai_key sk-xxxxxxxxxxxxxxxxxxxxxxFor the task of ok and aok_val whose annotations are available, the scores are automatically computed after finetuning and prompting. You can also evaluate the result files that outputted after finetuning or prompting, by run

$ bash scripts/evaluate_file.sh \

--task ok --result_path outputs/results/okvqa_prompt_1/result.jsonUsing the provideded models we obtain the corresponding prediction files, resulting in the results in the following table:

| Model | MCAN | Prophet |

|---|---|---|

| OK-VQA Accuracy | 53.0% | 61.1% |

For the task of aok_test, you need to submit the result file to the A-OKVQA Leaderboard to evaluate the result.

If you use this code in your research, please cite our paper:

@article{shao2023prompting,

title={Prompting Large Language Models with Answer Heuristics for Knowledge-based Visual Question Answering},

author={Shao, Zhenwei and Yu, Zhou and Wang, Meng and Yu, Jun},

journal={Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}This project is licensed under the Apache License 2.0 - see the LICENSE file for details.