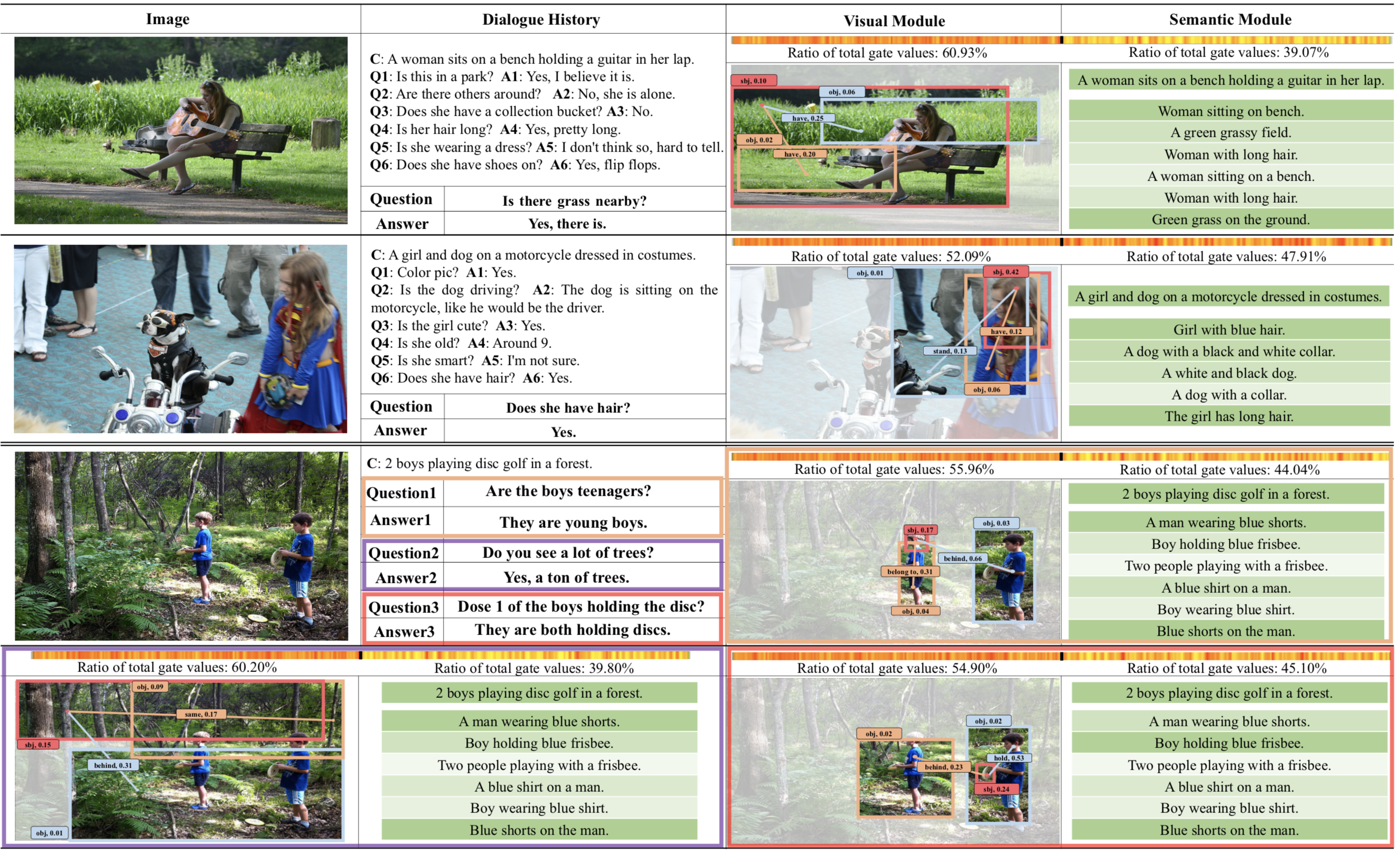

Example results from the VisDial v1.0 validation dataset.

This is a PyTorch implementation for DualVD: An Adaptive Dual Encoding Model for Deep Visual Understanding in Visual Dialogue, AAAI2020.

If you use this code in your research, please consider citing:

@inproceedings{jiang2019dualvd,

title = {DualVD: An Adaptive Dual Encoding Model for Deep Visual Understanding in Visual Dialogue},

author = {Jiang, Xiaoze and Yu, Jing and Qin, Zengchang and Zhuang, Yingying and Zhang, Xingxing and Hu, Yue and Wu, Qi},

year = {2020},

pages = {11125--11132},

booktitle = {Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20)}

}

This code is implemented using PyTorch v1.0, and provides out of the box support with CUDA 9 and CuDNN 7.

conda create -n visdialch python=3.6

conda activate visdialch # activate the environment and install all dependencies

cd DualVD/

pip install -r requirements.txt- Download the VisDial v1.0 dialog json files and images from here.

- Download the word counts file for VisDial v1.0 train split from here. They are used to build the vocabulary.

- Use Faster-RCNN to extract image features from here.

- Use Large-Scale-VRD to extract visual relation embedding from here.

- Use Densecap to extract local captions from here.

- Generate ELMo word vectors from here.

- Download pre-trained GloVe word vectors from here.

Train the DualVD model as:

python train.py --config-yml configs/lf_disc_faster_rcnn_x101_bs32.yml --gpu-ids 0 1 # provide more ids for multi-GPU execution other args...The code have an --overfit flag, which can be useful for rapid debugging. It takes a batch of 5 examples and overfits the model on them.

This script will save model checkpoints at every epoch as per path specified by --save-dirpath. Refer visdialch/utils/checkpointing.py for more details on how checkpointing is managed.

Use Tensorboard for logging training progress. Recommended: execute tensorboard --logdir /path/to/save_dir --port 8008 and visit localhost:8008 in the browser.

Evaluation of a trained model checkpoint can be done as follows:

python evaluate.py --config-yml /path/to/config.yml --load-pthpath /path/to/checkpoint.pth --split val --gpu-ids 0- This code began with batra-mlp-lab/visdial-challenge-starter-pytorch. We thank the developers for doing most of the heavy-lifting.