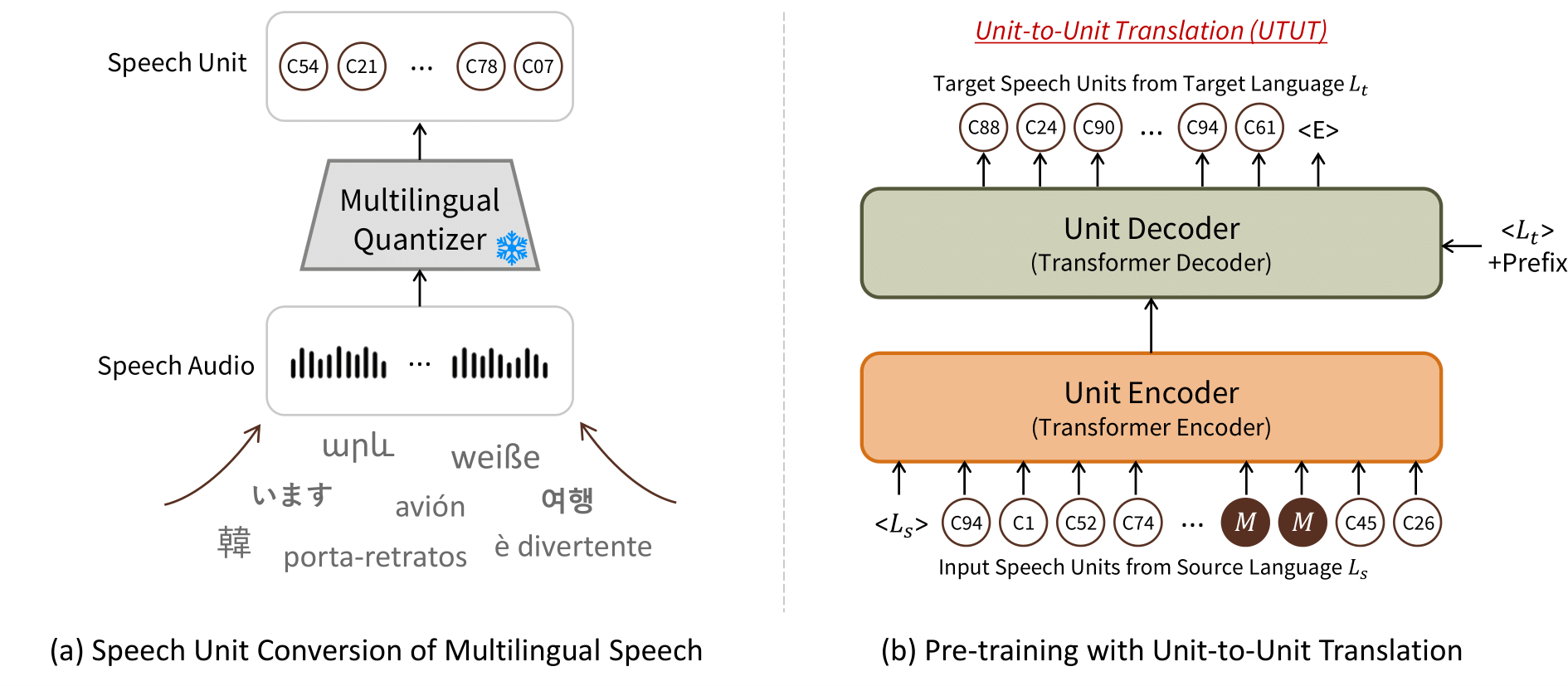

UTUT: Textless Unit-to-Unit Pre-training for Many-to-Many Multimodal-to-Speech Machine Translation by Learning Unified Speech and Text Representations

Official PyTorch implementation for the following paper:

Textless Unit-to-Unit Pre-training for Many-to-Many Multimodal-to-Speech Machine Translation by Learning Unified Speech and Text Representations

Minsu Kim*, Jeongsoo Choi*, Dahun Kim, Yong Man Ro

[Demo]

git clone -b main --single-branch https://github.com/choijeongsoo/utut

cd utut

git submodule init

git submodule update

pip install --editable fairseq

-

mHuBERT Base, layer 11, km 1000

reference: textless_s2st_real_data

Current versiocn only provides pre-trained UTUT model checkpoint and inference code for multilingual speech-to-speech translation.

-

En (English), Es (Spanish), and Fr (French)

reference: textless_s2st_real_data

-

It (Italian), De (German), and Nl (Dutch)

Unit config Unit size Vocoder language Dataset Model mHuBERT, layer 11 1000 It M-AILABS (male) ckpt, config mHuBERT, layer 11 1000 De CSS10 ckpt, config mHuBERT, layer 11 1000 Nl CSS10 ckpt, config

UTUT is pre-trained on Voxpopuli and mTEDx, where a large portion of data is from European Parliament events.

Before utilizing the pre-trained model, please consider the data domain where you want to apply it.

$ cd utut

$ PYTHONPATH=fairseq python inference.py \

--in-wav-path samples/en/1.wav samples/en/2.wav samples/en/3.wav \

--out-wav-path samples/es/1.wav samples/es/2.wav samples/es/3.wav \

--src-lang en --tgt-lang es \

--mhubert-path /path/to/mhubert_base_vp_en_es_fr_it3.pt \

--kmeans-path /path/to/mhubert_base_vp_en_es_fr_it3_L11_km1000.bin \

--utut-path /path/to/utut_sts.pt \

--vocoder-path /path/to/vocoder_es.pt \

--vocoder-cfg-path /path/to/config_es.json

$ cd utut

$ python

>>> import fairseq

>>> import unit2unit.utut_pretraining

>>> ckpt_path = "/path/to/utut_sts.pt"

>>> models, _, _ = fairseq.checkpoint_utils.load_model_ensemble_and_task([ckpt_path])

>>> model = models[0]

$ cd utut

$ PYTHONPATH=fairseq python -m speech2unit.inference \

--in-wav-path samples/en/1.wav samples/en/2.wav samples/en/3.wav \

--out-unit-path samples/en/1.unit samples/en/2.unit samples/en/3.unit \

--mhubert-path /path/to/mhubert_base_vp_en_es_fr_it3.pt \

--kmeans-path /path/to/mhubert_base_vp_en_es_fr_it3_L11_km1000.bin \

We use mHuBERT model trained on 3 languages (en, es, and fr) as the quantizer.

$ cd utut

$ python -m unit2unit.inference \

--in-unit-path samples/en/1.unit samples/en/2.unit samples/en/3.unit \

--out-unit-path samples/es/1.unit samples/es/2.unit samples/es/3.unit \

--utut-path /path/to/utut_sts.pt \

--src-lang en --tgt-lang es

UTUT supports 19 languages: en (English), es (Spanish), fr (French), it (Italian), pt (Portuguese), el (Greek), ru (Russian), cs (Czech), da (Danish), de (German), fi (Finnish), hr (Croatian), hu (Hungarian), lt (Lithuanian), nl (Dutch), pl (Polish), ro (Romanian), sk (Slovak), and sl (Slovene)

$ cd utut

$ python -m unit2speech.inference \

--in-unit-path samples/es/1.unit samples/es/2.unit samples/es/3.unit \

--out-wav-path samples/es/1.wav samples/es/2.wav samples/es/3.wav \

--vocoder-path /path/to/vocoder_es.pt \

--vocoder-cfg-path /path/to/config_es.json

We support 6 languages: en (English), es (Spanish), fr (French), it (Italian), de (German), and nl (Dutch)

This repository is built upon Fairseq and speech-resynthesis. We appreciate the open source of the projects.

If our work is useful for your research, please cite the following paper:

@article{kim2023many,

title={Many-to-Many Spoken Language Translation via Unified Speech and Text Representation Learning with Unit-to-Unit Translation},

author={Minsu Kim and Jeongsoo Choi and Dahun Kim and Yong Man Ro},

journal={arXiv preprint arXiv:2308.01831},

year={2023}

}