uaMix-MAE: EFFICIENT TUNING OF PRETRAINED AUDIO TRANSFORMERS WITH UNSUPERVISED AUDIO MIXTURES (paper)

This is the official implementation of uaMix-MAE: Efficient Tuning of Pretrained Audio Transformers with Unsupervised Audio Mixtures by Afrina Tabassum (Virginia Tech), Dung Tran (Microsoft Applied Sciences Group), Trung Dang (Microsoft Applied Sciences Group), Ismini Lourentzou (University of Illinois Urbana - Champaign), Kazuhito Koishida (Microsoft Applied Sciences Group).

Comming soon.....

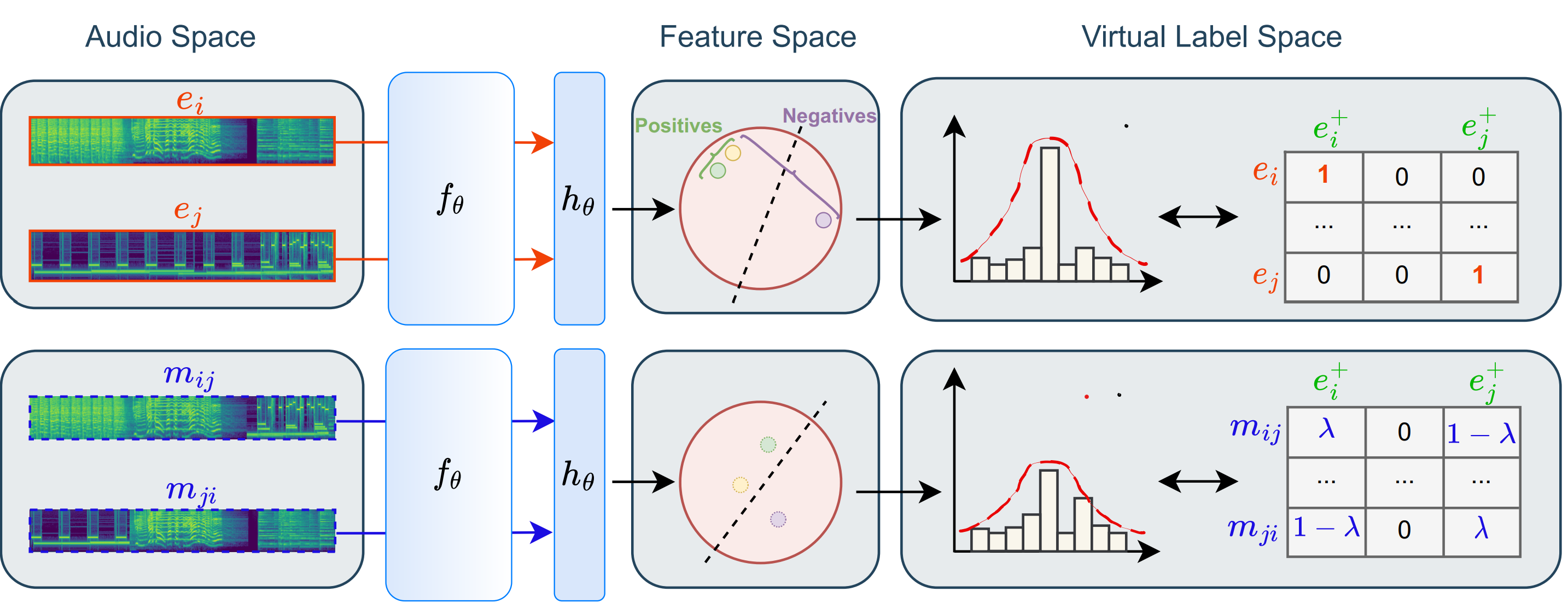

This paper proposes uaMix-MAE, an efficient ID tuning strategy that leverages unsupervised audio mixtures. Utilizing contrastive tuning, uaMix-MAE aligns the representations of pretrained MAEs, thereby facilitating effective adaptation to task-specific semantics. To optimize the model with small amounts of unlabeled data, we propose an audio mixing technique that manipulates audio samples in both input and virtual label spaces. Experiments in low/few-shot settings demonstrate that uaMix-MAE achieves 4-6% accuracy improvements over various benchmarks when tuned with limited unlabeled data, such as AudioSet-20K.

If you find this method and/or code useful, please consider citing:

@inproceedings{tabassum2024uamixmae,

title = {uaMix-MAE: Efficient Tuning of Pretrained Audio Transformers with Unsupervised Audio Mixtures},

author = {Afrina Tabassum$,Dung Tran, Trung Dang, Ismini Lourentzou, Kazuhito Koishida},

booktitle = {IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year = {2024}

}