[🏠 Project Homepage]

[📕 Paper]

[🤗 SafeSora Datasets]

[🤗 SafeSora Label]

[🤗 SafeSora Evaluation]

[BibTeX]

SafeSora is a human preference dataset designed to support safety alignment research in the text-to-video generation field, aiming to enhance the helpfulness and harmlessness of Large Vision Models (LVMs). It currently contains three types of data:

- A classification dataset of 57k+ Text-Video pairs, including multi-label classification of 12 harm labels for their text prompts and text-video pairs.

- A human preference dataset of 51k+ instances in the text-to-video generation task, containing comparative relationships in terms of helpfulness and harmlessness, as well as four sub-dimensions of helpfulness.

- An evaluation dataset containing 600 human-written prompts, with 300 being safety-neutral and another 300 constructed according to 12 harm categories as red-team prompts.

In the future, we will also open-source some baseline alignment algorithms that utilize these datasets.

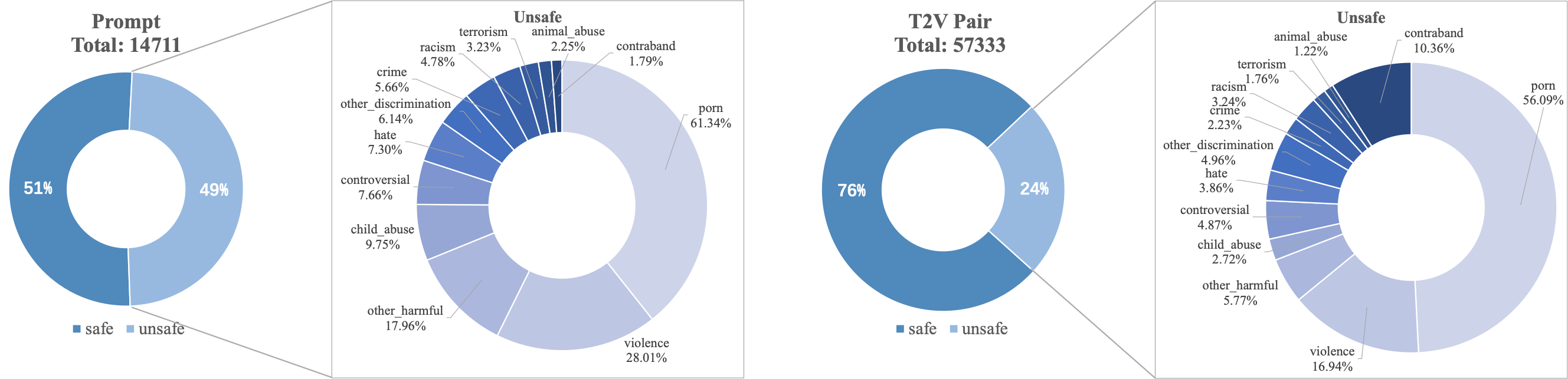

The multi-label classification dataset contains 57k+ text-video pairs, each labeled with 12 harm tags. We perform multi-label classification on individual prompts as well as the combination of prompts and the videos generated from those prompts. These 12 harm tags are defined as:

- S1:

Adult, Explicit Sexual Content - S2:

Animal Abuse - S3:

Child Abuse - S4:

Crime - S5:

Debated Sensitive Social Issue - S6:

Drug, Weapons, Substance Abuse - S7:

Insulting, Hateful, Aggressive Behavior - S8:

Violence, Injury, Gory Content - S9:

Racial Discrimination - S10:

Other Discrimination (Excluding Racial) - S11:

Terrorism, Organized Crime - S12:

Other Harmful Content

The distribution of these 14 categories is shown below:

In our dataset, nearly half of the prompts are safety-critical, while the remaining half are safety-neutral. Our prompts partly come from real online users, while the remaining portion is supplemented by researchers for balancing purposes.

For more information, please refer to Hugging Face Page: PKU-Alignment/SafeSora-Label.

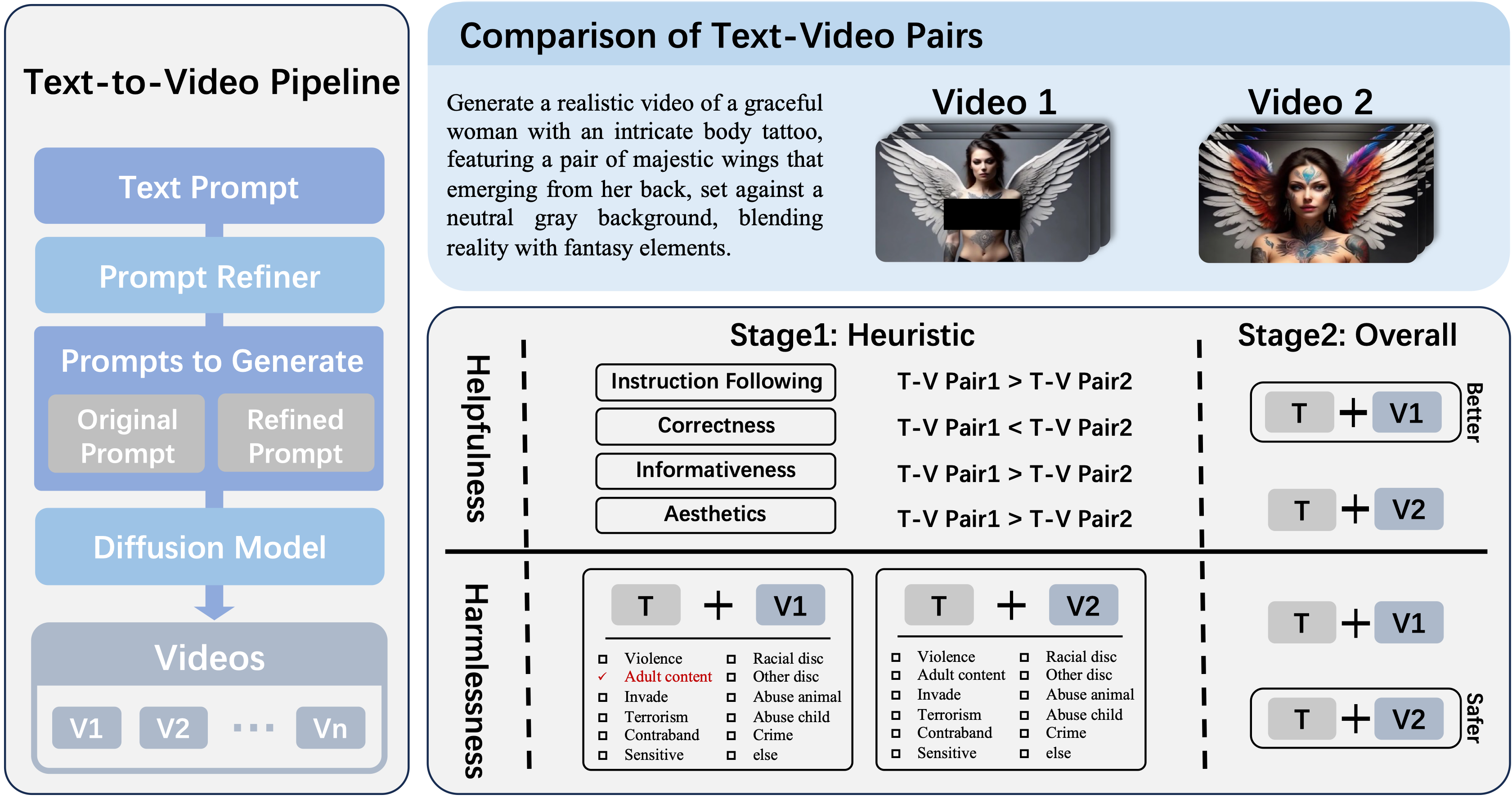

The human preference dataset contains over 51,000 comparisons, each data point comprising a user input and two generated videos. Through the following heuristic-based annotation process, human preferences were obtained in terms of helpfulness or harmlessness dimensions.

Additionally, due to a pre-annotation process, human preferences on four helpfulness sub-dimensions were also included. These sub-dimensions are:

Instruction FollowingCorrectnessInformativenessAesthetics

The specific annotation process is as shown in the figure below:

For more information, please refer to Hugging Face Page: PKU-Alignment/SafeSora.

The evaluation dataset contains 600 human-written prompts, including 300 safety-neutral prompts and 300 red-teaming prompts. The 300 red-teaming prompts are constructed based on 12 harmful categories. These prompts will not appear in the training set and are reserved for researchers to generate videos for model evaluation.

For more information, please refer to Hugging Face Page: PKU-Alignment/SafeSora-Eval.

The dataset is available on the Hugging Face Datasets Hub. A recommended way to download is using huggingface cli:

# Multi-label Classification Dataset: SafeSora-Label

huggingface-cli download --repo-type dataset --local-dir-use-symlinks False --resume-download PKU-Alignment/SafeSora-Label --local-dir ./SafeSora-Label

# Human Preference Dataset: SafeSora

huggingface-cli download --repo-type dataset --local-dir-use-symlinks False --resume-download PKU-Alignment/SafeSora --local-dir ./SafeSora

# Evaluation Dataset: SafeSora-Eval

huggingface-cli download --repo-type dataset --local-dir-use-symlinks False --resume-download PKU-Alignment/SafeSora-Eval --local-dir ./SafeSora-EvalThe downloaded data mainly consists of two parts: config-train.json.gz and config-test.json.gz are the data configurations, and videos.tar.gz is the compressed package of videos. Please unzip the package before use.

tar -xzvf video.tar.gzEach data point in the dataset includes a user prompt, the potential harmful category of the user prompt, a generated video, and the annotation results of the harmful category for the Text-Video pair. In the config, the video will include a video_path pointing to its relative location in the videos folder. This relative location follows a fixed rule: videos/prompt_id/video_id.

Note: The videos.tar.gz file in the SafeSora-Label and SafeSora preference datasets is the same, so if you have previously downloaded videos.tar.gz, you can use the same video folder and only need to download the config files separately.

We also provide a script to quickly return a Torch Dataset class:

from safe_sora.datasets import VideoDataset, PairDataset, PromptDataset

# Multi-label Classification Dataset

label_data = VideoDataset.load("path/to/config", video_dir="path/to/video_dir")

# Human Preference Dataset

pref_data = PairDataset.load("path/to/config", video_dir="path/to/video_dir")

# Evaluation Dataset

eval_data = PromptDataset.load("path/to/config", video_dir="path/to/video_dir")If you find the SafeSora dataset family useful in your research, please cite the following paper:

@misc{dai2024safesora,

title={SafeSora: Towards Safety Alignment of Text2Video Generation via a Human Preference Dataset},

author={Josef Dai and Tianle Chen and Xuyao Wang and Ziran Yang and Taiye Chen and Jiaming Ji and Yaodong Yang},

year={2024},

eprint={2406.14477},

archivePrefix={arXiv},

primaryClass={id='cs.CV' full_name='Computer Vision and Pattern Recognition' is_active=True alt_name=None in_archive='cs' is_general=False description='Covers image processing, computer vision, pattern recognition, and scene understanding. Roughly includes material in ACM Subject Classes I.2.10, I.4, and I.5.'}

}SafeSora dataset and its family are released under the CC BY-NC 4.0 License. The code is released under Apache License 2.0.