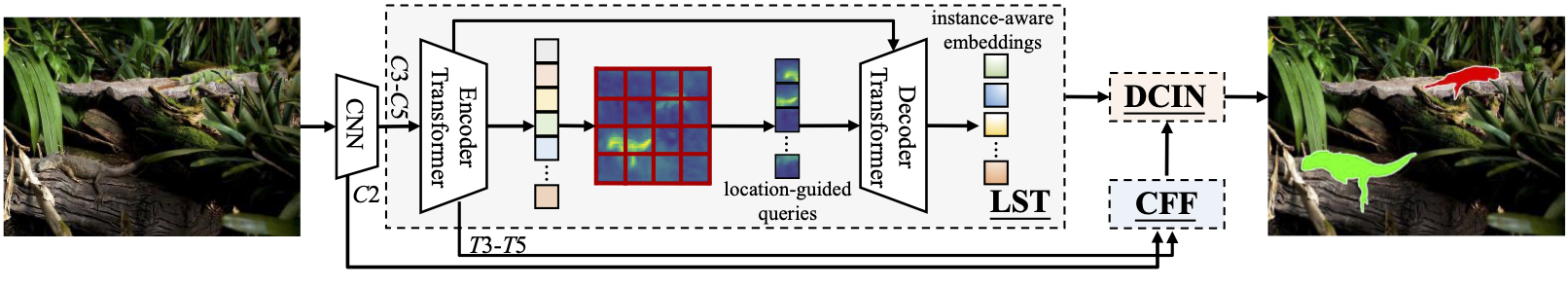

Official Implementation of "OSFormer: One-Stage Camouflaged Instance Segmentation with Transformers"

Jialun Pei*, Tianyang Cheng*, Deng-Ping Fan, He Tang, Chuanbo Chen, and Luc Van Gool

[Paper]; [Chinese Version]; [Official Version]; [Project Page]

Contact: dengpfan@gmail.com, peijl@hust.edu.cn

| Sample 1 | Sample 2 | Sample 3 | Sample 4 |

|---|---|---|---|

|

|

|

|

The code is tested on CUDA 11.1 and pytorch 1.9.0, change the versions below to your desired ones.

git clone https://github.com/PJLallen/OSFormer.git

cd OSFormer

conda create -n osformer python=3.8 -y

conda activate osformer

conda install pytorch==1.9.0 torchvision cudatoolkit=11.1 -c pytorch -c nvidia -y

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu111/torch1.9/index.html

python setup.py build develop- COD10K: Baidu (password:hust) / Google / Quark; Json files: Baidu (password:hust) / Google

- NC4K: Baidu (password:hust) / Google; Json files: Baidu (password:hust) / Google

- generate coco annotation files, you may refer to the tutorial of mmdetection for some help

- change the path of the datasets as well as annotations in

adet/data/datasets/cis.py, please refer to the docs of detectron2 for more help

# adet/data/datasets/cis.py

# change the paths

DATASET_ROOT = 'COD10K-v3'

ANN_ROOT = os.path.join(DATASET_ROOT, 'annotations')

TRAIN_PATH = os.path.join(DATASET_ROOT, 'Train/Image')

TEST_PATH = os.path.join(DATASET_ROOT, 'Test/Image')

TRAIN_JSON = os.path.join(ANN_ROOT, 'train_instance.json')

TEST_JSON = os.path.join(ANN_ROOT, 'test2026.json')

NC4K_ROOT = 'NC4K'

NC4K_PATH = os.path.join(NC4K_ROOT, 'Imgs')

NC4K_JSON = os.path.join(NC4K_ROOT, 'nc4k_test.json')Model weights: Baidu (password:l6vn) / Google / Quark

| Model | Config | COD10K-test AP | NC4K-test AP |

|---|---|---|---|

| R50-550 | configs/CIS_RT.yaml | 36.0 | 41.4 |

| R50 | configs/CIS_R50.yaml | 41.0 | 42.5 |

| R101 | configs/CIS_R101.yaml | 42.0 | 44.4 |

| PVTv2-B2-Li | configs/CIS_PVTv2B2Li | 47.2 | 50.5 |

| SWIN-T | configs/CIS_SWINT.yaml | 47.7 | 50.2 |

The visual results are achieved by our OSFormer with ResNet-50 trained on the COD10K training set.

- Results on the COD10K test set: Baidu (password:hust) / Google

- Results on the NC4K test set: Baidu (password:hust) / Google

python tools/train_net.py --config-file configs/CIS_R50.yaml --num-gpus 1 \

OUTPUT_DIR {PATH_TO_OUTPUT_DIR}Please replace {PATH_TO_OUTPUT_DIR} to your own output dir

python tools/train_net.py --config-file configs/CIS_R50.yaml --eval-only \

MODEL.WEIGHTS {PATH_TO_PRE_TRAINED_WEIGHTS}Please replace {PATH_TO_PRE_TRAINED_WEIGHTS} to the pre-trained weights

python demo/demo.py --config-file configs/CIS_R50.yaml \

--input {PATH_TO_THE_IMG_DIR_OR_FIRE} \

--output {PATH_TO_SAVE_DIR_OR_IMAGE_FILE} \

--opts MODEL.WEIGHTS {PATH_TO_PRE_TRAINED_WEIGHTS}{PATH_TO_THE_IMG_DIR_OR_FIRE}: you can put image dir or image paths here{PATH_TO_SAVE_DIR_OR_IMAGE_FILE}: the place where the visualizations will be saved{PATH_TO_PRE_TRAINED_WEIGHTS}: please put the pre-trained weights here

This work is based on:

We also get help from mmdetection. Thanks them for their great work!

If this helps you, please cite this work:

@inproceedings{pei2022osformer,

title={OSFormer: One-Stage Camouflaged Instance Segmentation with Transformers},

author={Pei, Jialun and Cheng, Tianyang and Fan, Deng-Ping and Tang, He and Chen, Chuanbo and Van Gool, Luc},

booktitle={European conference on computer vision},

year={2022},

organization={Springer}

}