Kubernetes and containers are developer technologies, as such almost all of the tooling assumes either Linux or macos as an operating system, that includes things like exporting environment variables and other shell commands - this guide is written to follow those guidelines and it is recommended you use a Linux VM or otherwise to ease troubleshooting. If you are running Windows you will need to adjust the commands to their Windows equivalents.

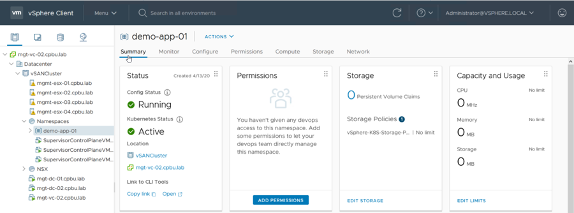

To interact with vSphere with Tanzu you will need to download kubectl and the associated vSphere CLI plugin for kubectl, both of these are available at the Namespace level in vCenter.

Navigate to the Namespace and look in the Status column for "Link to CLI Tools", Click Open and follow the instructions on the web page you are taken to to install the kubectl CLI and associated plugin.

To interact with our new vSphere with Tanzu supervisor cluster and begin provisioning resources we need to login first. Below we export the environment variables SC_IP (Supervisor Cluster IP) and NAMESPACE (your vSphere with Tanzu namespace name) with the information specific to our environment. You will be prompted to put in your password after running this command. It is important to ensure the user has been given RBAC permissions (Namespaces -> Namespace -> Permissions -> Add) on the vSphere with Tanzu namespace for you to be allowed to log in.

export SC_IP=10.198.52.128

export NAMESPACE=myles

kubectl vsphere login --server=https://$SC_IP --vsphere-username administrator@vsphere.local --insecure-skip-tls-verifyAfter successfully logging in, we now need to tell the Kubernetes command line that it should use the newly downloaded context (you can think of a context as a keychain to access a specific K8s cluster). Setting the context tells kubectl that any commands we issue through it from that point on will be sent to that specific K8s cluster.

As you can see from the below, vSphere with Tanzu contexts are named after the Namespace created in vCenter, so a direct copy and paste will work as long as you've exported the environment variables in the last step.

kubectl config use-context $NAMESPACEIf the above command doesn't connect you to the correct context, that may be because you already have a context in your ~/.kube/config file with the same name as the Namespace.

In this case, vSphere with Tanzu will have created a context in the Namespace-Supervisor Cluster IP pattern. As such, the below command should connect you to the correct context:

kubectl config use-context $NAMESPACE-$SC_IPNext up, we need a Kubernetes cluster to deploy some workloads to - when you deploy vSphere with Tanzu it gains you a control plane that acts like Kubernetes to allow you to request and create resources in a K8s-like fashion. E.g. you ask the Supervisor cluster for a Kubernetes cluster and it will build one for you using TKG Service.

So let's deploy a sample Tanzu Kubernetes Cluster with one control plane node and one worker node.

kubectl apply -f https://raw.githubusercontent.com/vsphere-tmm/vsphere-with-tanzu-quick-start/master/manifests/tkc.yamlYou can look at the tkc.yaml manifest file to see exactly what we're requesting from the Supervisor Cluster if you want.

Now, this will take some time, it needs to build out some VMs, spin them up and install the requested packages and set up K8s - so either go grab a coffee, or you can watch the deployment in one of two ways.

Note: The deployment should take around 30 minutes

Navigate to your Namespace in the vCenter UI and you should see an object tkc-1 pop up under your namespace, this is your TKG Service K8s cluster. You can watch the two VMs spin up.

The primary way to watch your deployment via kubectl is to describe the Tanzu Kubernetes Cluster deployment with the following command:

kubectl describe tkcThis will give all kinds of status information about the components that make up the TKG cluster.

Another way to monitor or troubleshoot the deployment is using the watch flag (-w) against all VMs created by vSphere with Tanzu on the underlying vSphere infrastructure:

$ kubectl get virtualmachine -w

NAME AGE

tkc-1-control-plane-p8tbw 30d

tkc-1-workers-vn8gz-66bb8c554d-hnhgm 30dYou can gather more info on a given VM instance by changing the get to a describe and adding the name of one of the instances from the above output:

kubectl describe virtualmachine tkc-1-control-plane-p8tbwOnce your TKG Service K8s cluster is deployed, we need to tell kubectl to use it as the target, instead of the supervisor cluster.

This will allow us to deploy workloads and such to the TKG cluster that was just deployed.

If you have been following these step by step instructions up to this point, you can directly copy and paste this command in and it will log you in to the TKG cluster:

kubectl vsphere login --server=$SC_IP --tanzu-kubernetes-cluster-name tkc-1 --tanzu-kubernetes-cluster-namespace $NAMESPACE --vsphere-username administrator@vsphere.local --insecure-skip-tls-verifyAs before, this will download the available contexts to your local machine, and you then need to tell kubectl to target the TKG cluster context to allow you to deploy to it.

kubectl config use-context tkc-1We're now up and running with a new TKG K8s cluster and can have som fun deploying workloads to it!

This shouldn't be done in production, but for a quick start, this will bind all authenticated users to run any type of container:

kubectl create clusterrolebinding default-tkg-admin-privileged-binding --clusterrole=psp:vmware-system-privileged --group=system:authenticatedThe simplest possible proof of concept is to run a busybox container on the K8s cluster and then get a shell in it, this uses no fancy features of K8s and will just show you that it can run generic containers.

The following command will run the busybox container on your TKG cluster (because that's the context we told kubectl to use) and to get a shell inside the container, additionally, once we exit the container, it will be stopped.

kubectl run -i --tty busybox --image=quay.io/quay/busybox --restart=Never -- shYou can run generic linux commands once you get your shell, so i've listed a few, but feel free to poke around.

ping google.com

traceroute -n google.com

exitThe container will automatically shut down after you exit because it is invoked via kubectl run which tells K8s to run the pod until it is exited.

kubectl get podskubectl delete pod busyboxWe are now going to deploy a pod using a Kubernetes manifest - these are written in yaml, you can find the ones we are using in the manifests folder.

Let's deploy an application that needs storage and we will update the StorageClass provided with the cluster to be the default to make provisioning easier.

When you set up vSphere with Tanzu, you added storage to your install, this will have been automatically added to your TKG service cluster for you.

You can get its name by running:

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

vsan-default-storage-policy csi.vsphere.vmware.com Delete Immediate true 8dIf you used the tkc.yaml file in the /manifests folder here, the default StorageClass is already set for you (by the last three lines).

Next up, let's create a PersistentVolume (PV) so we can store some data, to do this, you must create a PersistentVolumeClaim (PVC) - that is essentially asking Kubernetes "please give me X amount of storage".

I have created a manifest called pvc.yaml that requests 3GB of storage from Kubernetes (and therefore, from the underlying storage system - in this case, vSphere).

Let's deploy that manifest and see the volume get created:

kubectl apply -f https://raw.githubusercontent.com/vsphere-tmm/vsphere-with-tanzu-quick-start/master/manifests/pvc.yamlLet's find out what the states are of the PersistentVolumeClaim (PVC), and the PersistentVolume (PV) it requested:

$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-7b2738d9-4474-41c1-9177-392654c274e8 3Gi RWO Delete Bound default/vsphere-with-tanzu-pv vsan-default-storage-policy 54s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/vsphere-with-tanzu-pv Bound pvc-7b2738d9-4474-41c1-9177-392654c274e8 3Gi RWO vsan-default-storage-policy 64sNote: You can continually watch a resource by appending -w to the command, e.g:

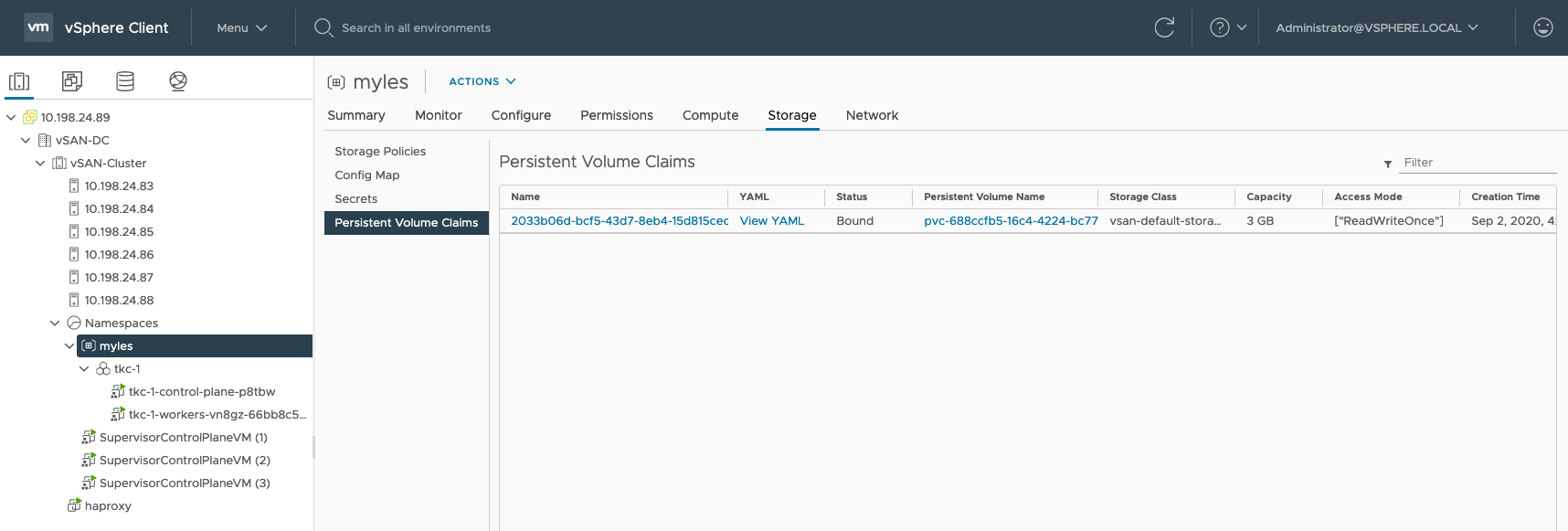

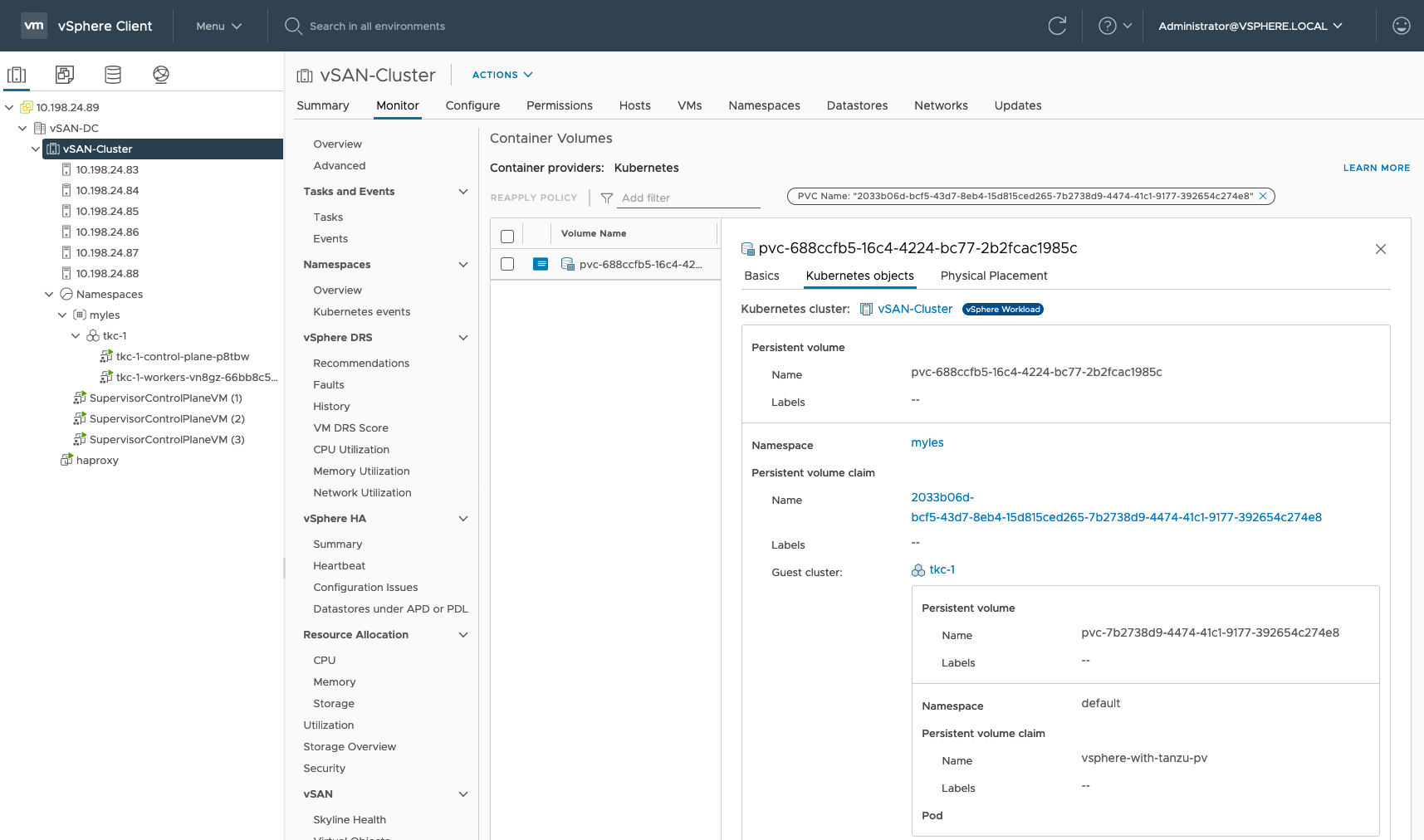

kubectl get pv -wIn your vCenter, after you kicked off the above command the integration exposes all of the information about the Kubernetes volume to vSphere with Tanzu.

Log into your VC and navigate to your namespace -> Storage -> PersistentVolumeClaims and you should see the PVC in the UI as below:

If you click on the Name you will be brought to the new Cloud Native Storage UI which will give you even more detail on the volume - more on that in this YouTube video.

Pods are Kubernetes-speak for a container or a group of containers (you can have more than one container in a Pod in some cases).

So let's create a Pod that uses the PersistentVolume we just created.

Again, just like last time - I created a Pod manifest that consumes the PVC we just deployed. This is a simple name mapping (note: vsphere-with-tanzu-pv is used in both) as can be seen in the snippet below:

pod.yaml

...

spec:

volumes:

- name: my-pod-storage

persistentVolumeClaim:

claimName: vsphere-with-tanzu-pv

...pvc.yaml

...

metadata:

name: vsphere-with-tanzu-pv

...kubectl apply -f https://raw.githubusercontent.com/vsphere-tmm/vsphere-with-tanzu-quick-start/master/manifests/pod.yamlThe above creates the mapping between the Pod and the PVC, and now, we have an up and running NginX container with storage attached.

$ kubectl get po

NAME READY STATUS RESTARTS AGE

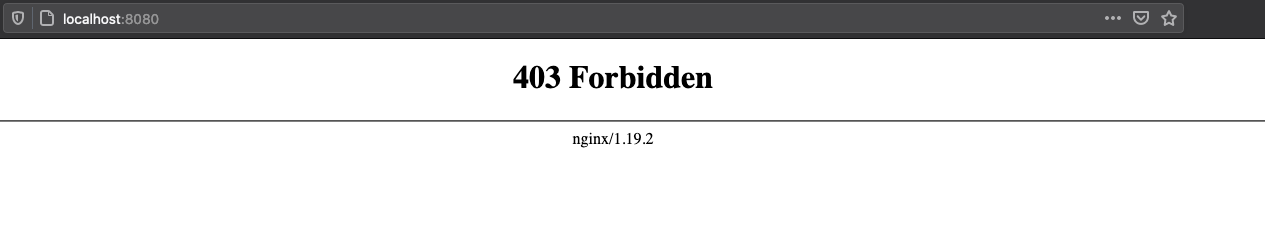

vsphere-with-tanzu-pod 1/1 Running 0 2m52sIf we access the pod now (below), we should get an Error: 403 as we haven't added any content to the NginX web server yet.

The below command creates a tunnel, from your local machine on port 8080 to the pod inside the kubernetes cluster on port 80, so when you access localhost:8080 it gets tunnelled directly to the pod on port 80.

kubectl port-forward vsphere-with-tanzu-pod 8080:80Open http://localhost:8080 in your web browser and you should see the below:

So let's fix that - let's add a web page to it. I pre-created a (very) simple web page to allow you to copy it into the container easily. Let's get into the container and pull the web page into it's PersistentVolume:

kubectl exec -it vsphere-with-tanzu-pod -- bashAs in the busybox container from the earlier example, you now have a shell in the NginX container, let's change directory to where NginX serves content from and pull the web page in:

cd /usr/share/nginx/html

curl https://raw.githubusercontent.com/vsphere-tmm/vsphere-with-tanzu-quick-start/master/site/index.html -o index.html

exitNow we can hit our site again by opening another port-forward connection to it:

kubectl port-forward vsphere-with-tanzu-pod 8080:80Open http://localhost:8080 in your web browser and you should see the message:

Rather than connecting over the tunnel, as we have been up to now - you can use something called a Kubernetes Service, this allows the Pod to be accessible to either other Pods in the cluster in the case of the service type ClusterIP, or to the outside world, in the case of the service types NodePort and LoadBalancer.

For ease of demonstration (and frankly, what most people use in production) is a Service with Type: LoadBalancer. In vSphere with Tanzu, this will automatically allocate the service an IP from the Virtual IP range that was set when creating your vSphere with Tanzu deployment. It will use HAProxy to automatically route traffic from the IP address allocated, to the K8s cluster.

In the case of our service svc.yaml - it will expose port 80 on whatever load-balanced IP it is assigned by vSphere with Tanzu to port 80 on the container(s). Additionally, Kubernetes uses a selector of app: nginx to figure out what backend Pods it should load balance across, this same label can be seen in pod.yaml in the metadata section and is what creates the mapping from the Service to the Pod.

kubectl apply -f https://raw.githubusercontent.com/vsphere-tmm/vsphere-with-tanzu-quick-start/master/manifests/svc.yamlThe Service will spin up on the cluster, claim an IP and make it accessible to you. You can find out what IP it has been assigned by issuing the following command:

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service LoadBalancer 10.103.49.246 192.168.1.51 80:80/TCP 1hWhatever is listed in the above EXTERNAL-IP column, you can then navigate to in your browser and receive the same web page as before - but this time over your corporate network, and it's fully fronted and load balanced by vSphere with Tanzu!