A fast and modular coordinate descent solver for sparse generalized linear models with convex and non-convex penalties.

The algorithm behind sparseglm is explained here.

This work has been accepted at NeurIPS 2022.

It provides theoretical guarantees of convergence and extensively demonstrates the superiority

of this solver over existing ones. The original package written in pure Python can be found

here: skglm.

sparseglm leverages Anderson acceleration

and working sets to propose a fast and

memory-efficient solver on a wide variety of algorithms. It can solve problems

with millions of samples and features in seconds. It supports dense and

sparse matrices via CSC arrays.

The philosophy of sparseglm consists in offering a highly flexible API.

Any sparse GLM can be implemented in under 30 lines of code by providing its datafit

term and its penalty term, which makes it very easy to support new estimators.

// Load some data and wrap them in a Dataset

let dataset = DatasetBase::from((x, y));

// Define a datafit (here a quadratic datafit for regression)

let mut datafit = Quadratic::new();

// Define a penalty (here a L1 penalty for Lasso)

let penalty = L1::new(0.7);

// Instantiate a Solver with default parameters

let solver = Solver::new();

// Solve the problem using coordinate descent

let coefficients = solver.solve(&dataset, &mut datafit, &penalty).unwrap();For the most well-known models like Lasso or ElasticNet, sparseglm already have off-the-shelf

implementations.

// Load some data and wrap them in a Dataset

let dataset = DatasetBase::from((x, y));

// Instantiate and fit the estimator

let estimator = Lasso::params()

.alpha(2.)

.fit(&dataset)

.unwrap();

// Get the fitted coefficients

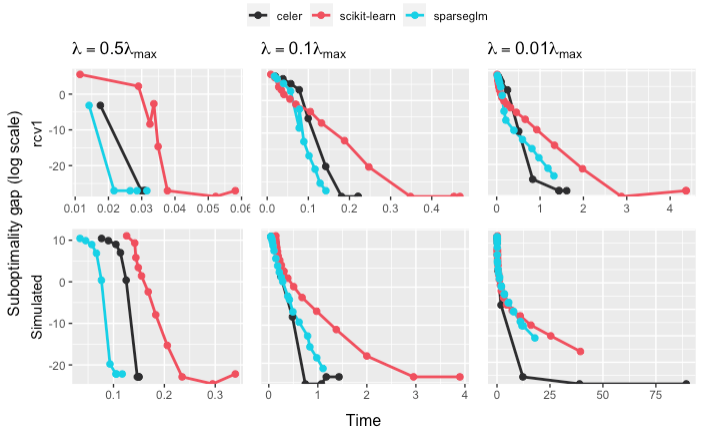

let coefficients = estimator.coefficients();We provide below a demonstration of sparseglm against other fast coordinate

descent solvers using the optimization benchmarking tool Benchopt.

The benchmark below solves a Lasso optimization problem. We select three solvers:

scikit-learn, celer

and sparseglm. The solvers are tested at different level of regularization from high sparsity to low

sparsity.

The simulations were made on two different datasets: one sparse and one dense. rcv1 is a dataset made of more than 804,414 samples and 47,236 features. The data comes in the form of a sparse matrix. For the dense dataset, we simulated a dense design matrix of 1,000 samples and 10,000 features.

The simulations were made on two different datasets: one sparse and one dense. Both datasets contain 100 samples, 3,000 samples and 80 tasks.

Currently we support:

| Model | Single task | Multi task | Convexity |

|---|---|---|---|

| Lasso | ✔️ | ✔️ | Convex |

| MCP | ✔️ | ✔️ | Non-convex |

| Elastic-Net | ✔️ | ✔️ | Convex |

| L0.5 | ✔️ | ✔️ | Non-convex |

| L2/3 | - | - | Non-convex |

| SCAD | - | - | Non-convex |

| Indicator box | - | - | Convex |

| Sparse logistic regression | ✔️ | - | Convex |

| Dual SVM with hinge loss | - | - | Convex |

This repo includes Python bindings to run the existing estimators (in the Estimators crate)

in a Python environment. To install it, run at the root of the repo:

# Install requirements

pip install -r requirements.txt

# Compile and build Python wheel

cd python

python ./setup.py install