Area2Area Forecasting: Looser Constraints, Better Predictions

This is the origin Pytorch implementation of Area2Area forecasting formula in the following paper: [Area2Area Forecasting: Looser Constraints, Better Predictions](Manuscript submitted to journal Information Sciences).

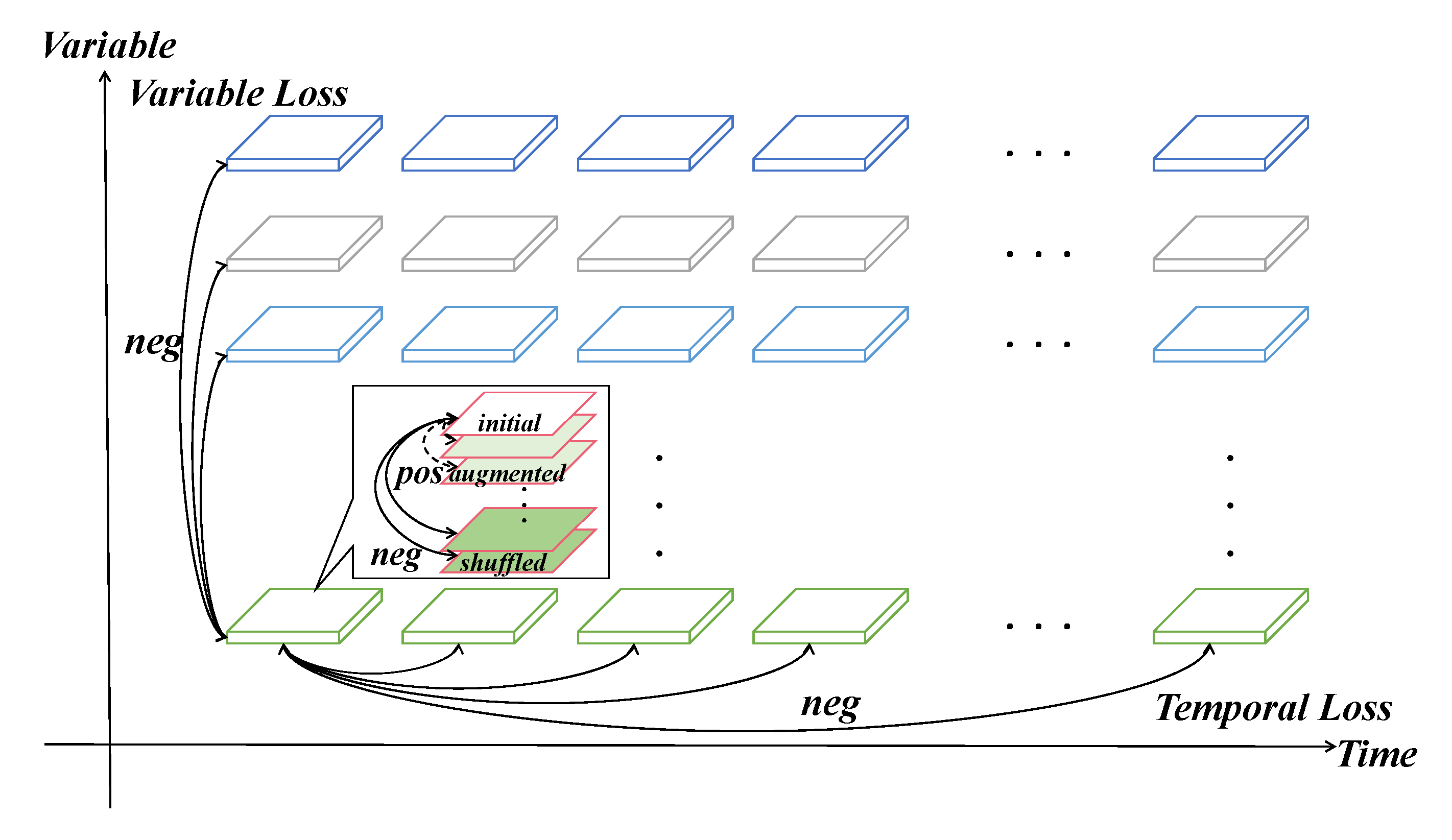

Causal Sequence-wise Contrastive Learning

Positive sampling: We treat input sequences with/without data augmentation which span the same timestamps as positive examples with each other. Meanwhile, other input sequences are treated as negative examples of the current selected input sequence, even if they only contain one different timestamp. (2) Negative sampling: We introduce extra negative data augmentation into each input sequence where we randomly shuffle all elements of input sequence and treat shuffled input sequences as negative examples of the current selected input sequence.

Figure 1. An overview of the final contrastive loss. Each block denotes the representation of input sequence under a timespan

of a variable. Blocks with different colors refer to representations of different variables. Only input sequences with data augmentation (parallelogram with light color) which span the same timestamps of initial sequences are treated as their positive

examples (dotted lines) and other sequences are treated as their negative examples (solid lines).

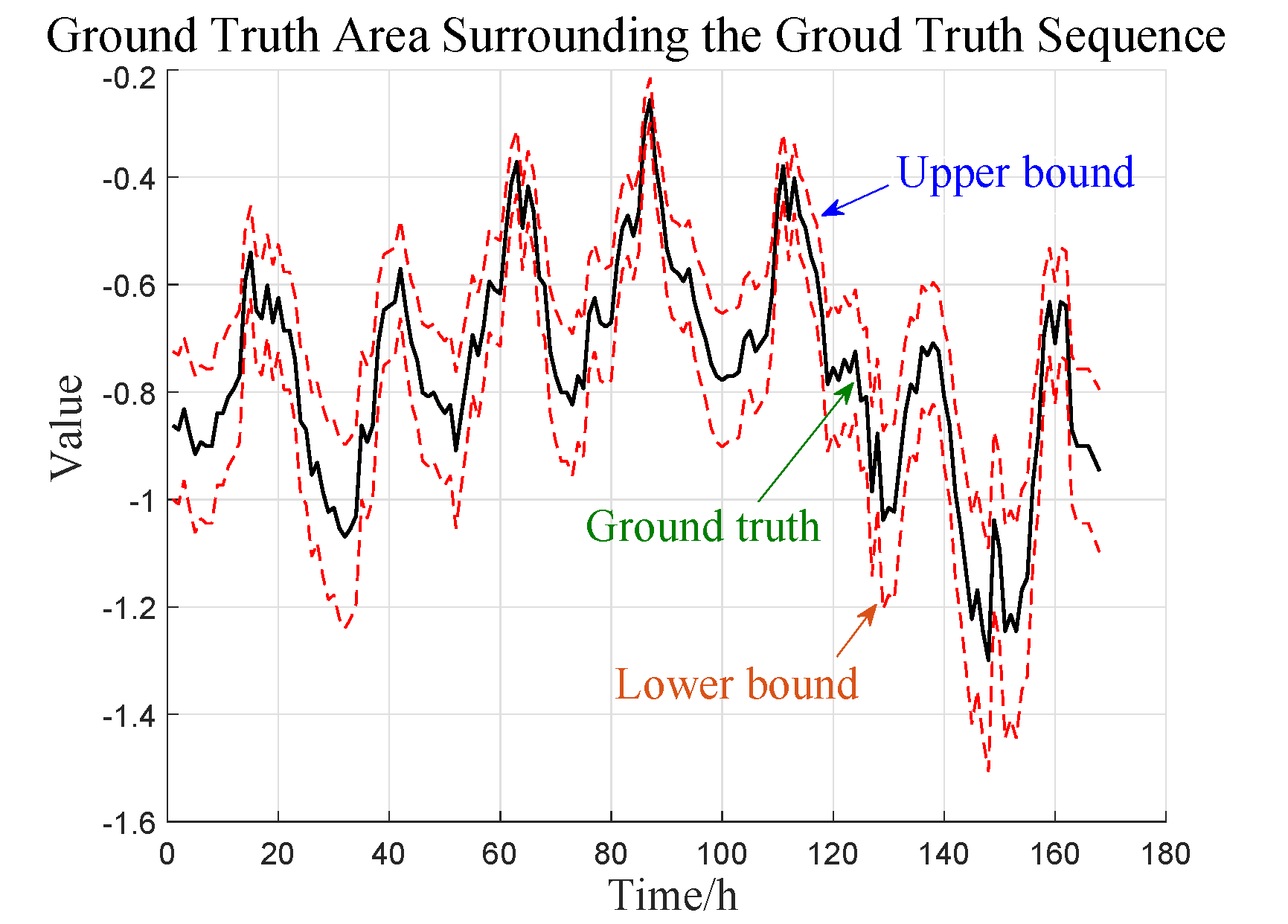

Area Loss

Area Loss (AL) replaces the certain truth of prediction sequence with an area surrounding it as shown in Figure 6. We choose a fixed upper bound as the maximum of truth area and a fixed lower bound as the minimum one. It means that values of prediction elements will be treated as truth if they are within aforementioned area

Figure 2. AL extends the truth (black line) to an area encompassed by two red dotted lines which separately refer to the

upper and lower bound.

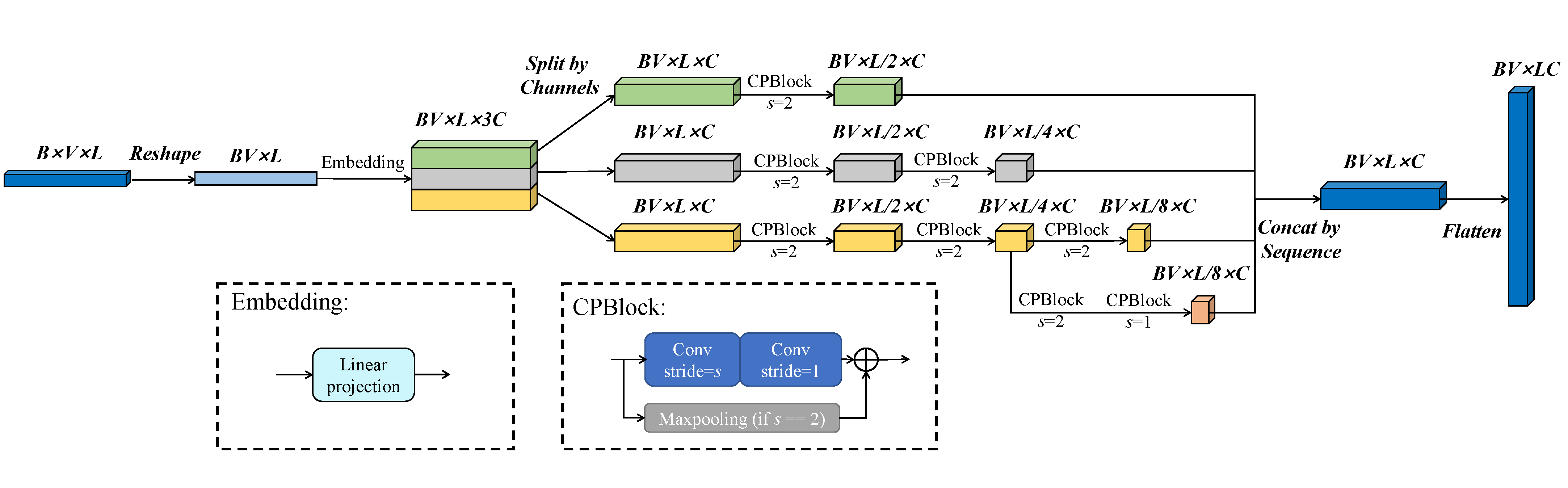

Temporal ELAN

we propose Temporal ELAN, a simple and efficient feature extractor and use it as the backbone in the first stage. It is a CNN based network and has at least two merits compared with canonical Temporal Convolution Network (TCN):

- It abandons dilated convolution and utilizes only normal convolutions and pooling to extract universal feature maps of input sequence.

- It is derived from ELAN, a 2D CNN which has been proved to be powerful in Computer Vision (CV) tasks. Built upon ELAN, it not only acquires its merits, but also is capable of extracting hierarchical multi-scale feature maps of input sequence.

Figure 3. The architecture of Temporal ELAN. Each CPblock contains two convolutional layers and a maxpooling layer to shrink sequence length.

Requirements

- Python 3.8

- matplotlib == 3.3.4

- numpy == 1.20.1

- pandas == 1.2.4

- scikit_learn == 0.24.1

- scipy == 1.9.0

- torch == 1.11.0

Dependencies can be installed using the following command:

pip install -r requirements.txtData

ETT, ECL, Traffic, Exchange and weather datasets were acquired at: datasets;

Data Preparation

After you acquire raw data of all datasets, please separately place them in corresponding folders at ./data.

We place ETT in the folder ./ETT-data, ECL in the folder ./electricity, Exchange in the folder ./exchage_rate, Traffic in the folder ./traffic and weather in the folder ./weather of here (the folder tree in the link is shown as below) into folder ./data and rename them from ./ETT-data, ./electricity, ./exchange_rate, ./traffic and ./weather to ./ETT, ./ECL, ./Exchange, ./Traffic and./weather respectively. We rename the file of ECL/ Exchange from electricity.csv/ exchange_rate.csv/traffic.csv to ECL.csv/ Exchange.csv/ Traffic.csv and rename the last variable of ECL/Exchange/Traffic from OT to original MT_321/ Singapore/ Sensor_861.

|-Autoformer

| |-ETT-data

| | |-ETTh1.csv

| | |-ETTh2.csv

| | |-ETTm1.csv

| | |-ETTm2.csv

| |

| |-electricity

| | |-electricity.csv

| |

| |-exchange_rate

| | |-exchange_rate.csv

| |

| |-traffic

| | |-traffic.csv

| |

| |-weather

| | |-weather.csv

Then you can get the folder tree shown as below:

|-data

| |-ECL

| | |-ECL.csv

| |

| |-ETT

| | |-ETTh1.csv

| | |-ETTh2.csv

| | |-ETTm1.csv

| | |-ETTm2.csv

| |

| |-Exchange

| | |-Exchange.csv

| |

| |-Traffic

| | |-Traffic.csv

| |

| |-weather

| | |-weather.csv

Usage

Commands for training and testing Temporal ELAN-A2A of all datasets are in ./scripts/A2A.sh.

More parameter information please refer to main.py.

We provide a complete command for training and testing Temporal ELAN-A2A:

python -u main.py --model <model> --data <data> --root_path <root_path> --features <features> --label_len <label_len> --pred_list <pred_list> --d_model <d_model> --repr_dim <repr_dim> --kernel <kernel> --attn_nums <attn_nums> --pyramid <pyramid> --criterion <criterion> --dropout <dropout> --batch_size <batch_size> --cost_batch_size <cost_batch_size> --order_num <order_num> --aug_num <aug_num> --jitter <jitter> --activation <activation> --cost_epochs <cost_epochs> --cost_grow_epochs <cost_grow_epochs> --train_epochs <train_epochs> --itr <itr>

Here we provide a more detailed and complete command description for training and testing the model:

| Parameter name | Description of parameter |

|---|---|

| model | The model of experiment. This can be set to A2A |

| data | The dataset name |

| root_path | The root path of the data file |

| data_path | The data file name |

| features | The forecasting task. This can be set to M,S (M : multivariate forecasting, S : univariate forecasting |

| target | Target feature in S task |

| checkpoints | Location of model checkpoints |

| label_len | Input sequence length |

| pred_list | Group of prediction sequence length |

| enc_in | Input size |

| c_out | Output size |

| d_model | Dimension of model |

| aug_num | The number of sequences with data augmentation, including initial sequences |

| order_num | The number of shuffled sequences |

| jitter | Supremum data augmentation amplification |

| aug | Augmentation method used in Area Loss. This can be set to Scaling,Jittering |

| instance_loss | Including instance loss during the first stage |

| dropout | Dropout |

| kernel | The kernel size |

| criterion | Standardization |

| itr | Experiments times |

| activation | Activation function. This can be set to Gelu,Tanh |

| cost_epochs | Train epochs of the first stage |

| cost_grow_epochs | Train growing epochs of the first stage per experiment |

| train_epochs | Train epochs of the second stage |

| cost_batch_size | The batch size of training input data in the first stage |

| batch_size | The batch size of training input data in the second stage |

| patience | Early stopping patience |

| learning_rate | Optimizer learning rate |

| pyramid | The number of Pyramid networks |

| attn_nums | The number of streams in the main Pyramid network |

| repr_dim | Dimension of representation |

| loss | Loss function |

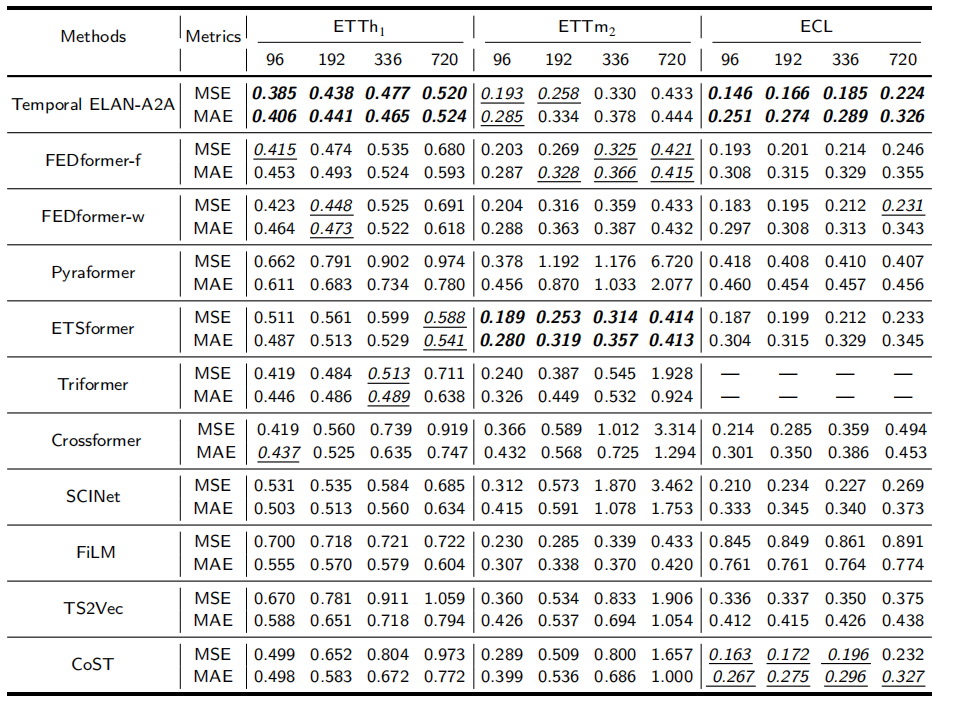

Results

The experiment parameters of each data set are formated in the A2A.sh files in the directory ./scripts/. You can refer to these parameters for experiments, and you can also adjust the parameters to obtain better mse and mae results or draw better prediction figures.

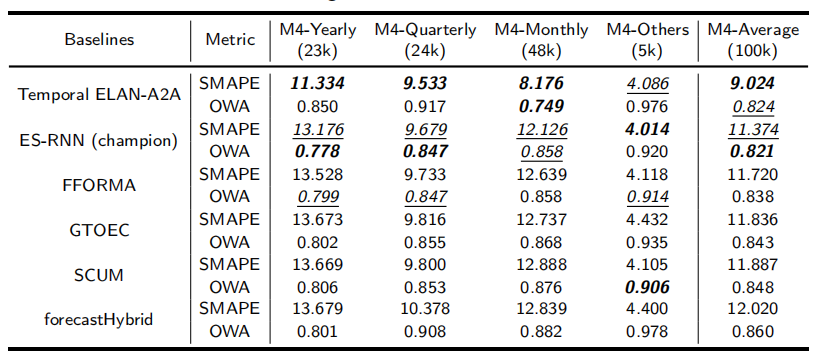

Figure 4. Quantitative results of multivariate forecasting with {ETTh1, ETTm2, ECL}

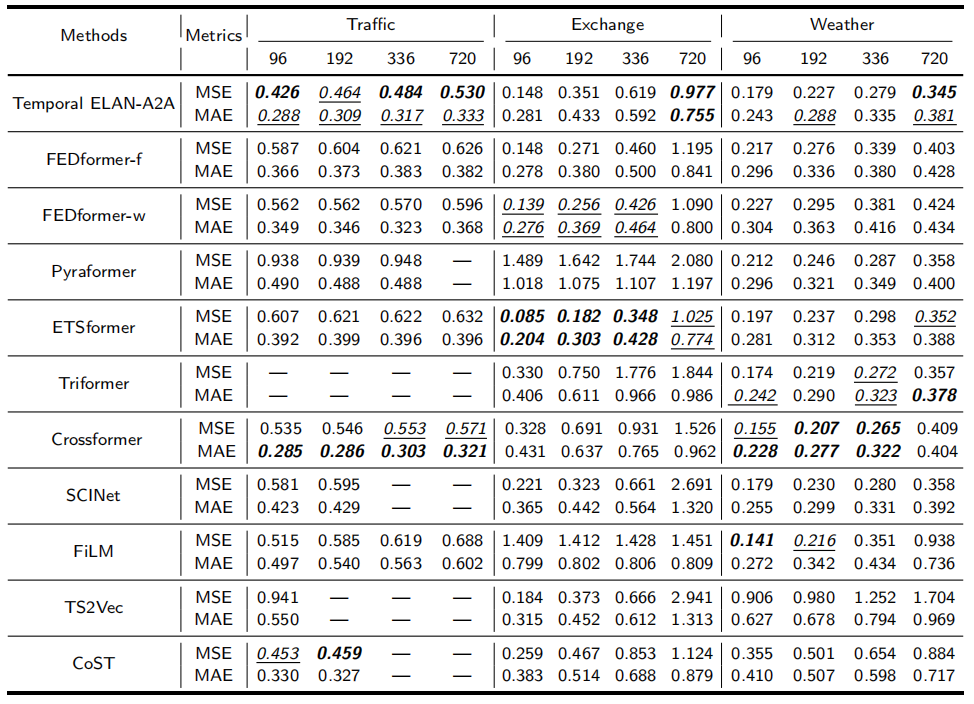

Figure 5. Quantitative results of multivariate forecasting with {Traffic, Exchange, Weather}

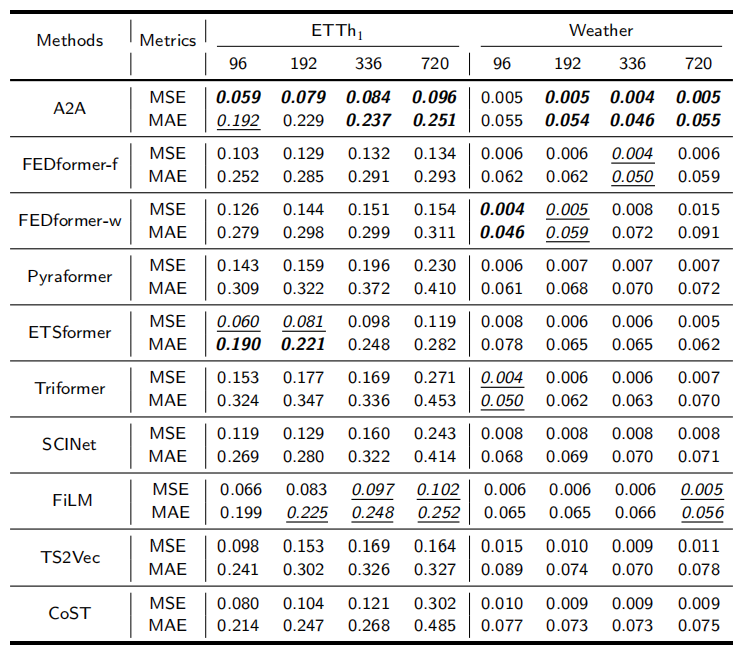

Figure 6. Quantitative results of univariate forecasting under ETTh1 and Weather

Figure 7. Univariate forecasting results

Contact

If you have any questions, feel free to contact Li Shen through Email (shenli@buaa.edu.cn) or Github issues. Pull requests are highly welcomed!