This repo contains the implementation for paper:

Xiaofan Yu, Yunhui Guo, Sicun Gao, Tajana Rosing. "SCALE: Online Self-Supervised Contrastive Lifelong Learning without Prior Knowledge" in CLVision 2023.

OnlineContrastholds the implementation of our method SCALE, SimCLR, SupCon, Co2L and CaSSLe. The code is implemented based on SupContrast.UCLholds the implementation of LUMP, PNN, SI, DER adapted from the original repo UCL.stamholds the implementation of STAM adapted from the original repo stam.

Dataset configuration for OnlineContrast and UCL is in data_utils.py. Model and training configuration is in set_utils.py. The shared evaluation functions are in eval_utils.py.

In each folder, set up the environment with pip3 install -r requirements.txt. We test OnlineContrast and UCL with Python3.8, while STAM is tested with Python3.6. Our machine uses CUDA 11.7 and a NVIDIA RTX 3080 Ti GPU.

We mainly focus on image classification while the methodology can be applied to more general scenarios. In this repo, we test with MNIST (10 classes), CIFAR-10 (classes), CIFAR-100 (20 coarse classes) and SubImageNet (10 classes).

For all methods in OnlineContrast and UCL folders, the shared root dataset directory is datasets.

- The download and configuration of MNIST, CIFAR-10, CIFAR-100 should be completed automatically by the code.

- For SubImageNet, sometimes the download cannot proceed normaly. If that is the case, please download the original TinyImageNet. Unzip the file under

./datasets/TINYIMG.

For STAM, as written in the original repo, you need to download datasets from here and unzip into the ./stam directory as ./stam/datasets.

We list the commands to fire our method and each baseline in the following lines.

To run our method SCALE:

cd OnlineContrast/scripts

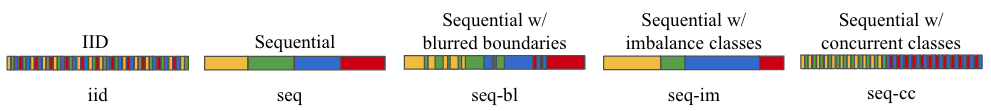

bash ./run-scale.sh scale <mnist/cifar10/cifar100/tinyimagenet> <iid/seq/seq-bl/seq-cc/seq-im> <trial#>- We test with five types of data streams as discussed in the paper: (1) iid, (2) sequential classes (seq), (3) sequential classes with blurred boundaries (seq-bl), (4) sequential classes with imbalance lengths (seq- im), and (5) sequential classes with concurrent classes (seq-cc). More details about data stream configuration are explained later.

- For all implementations, the last argument of

trial#(e.g.,0,1,2) determines the random seed configuration. Hence using the sametrial#produces the same random selection.

You can run run-<simclr/supcon/co2l/cassle>.sh to run the corresponding baseline with similar argument format.

We replace the original data loader with our own loader. For evaluation, we also adapt the original code with our own clustering and kNN classifier on the learned embeddings.

We run LUMP (mixup in argument), PNN, SI and DER as baselines to compare:

cd UCL

bash ./run-baseline.sh <mixup/pnn/si/der> supcon <mnist/cifar10/cifar100/tinyimagenet> <iid/seq/seq-bl/seq-cc/seq-im> <trial#>We replace the original data loader with our own loader. For evaluation, we also adapt the original code with our own clustering and kNN classifier on the learned embeddings.

To run the code:

cd stam

bash ./stam_IJCAI.sh <mnist/cifar10/cifar100> <iid/seq/seq-bl/seq-cc/seq-im>The data streams configurations for STAM are in stam/core/dataset.py. In our setup, we directly fire all experiments with stam/run_all.sh.

The single-pass and non-iid data streams are the key motivation of the paper. As discussed in the paper, we consider five type of single-pass streams:

-

Take SCALE for an example, iid input stream is configured by

# In the ./OnlineContrast directory python main_supcon.py --training_data_type iid -

The sequential class-incremental data stream is configured by

# In the ./OnlineContrast directory python main_supcon.py --training_data_type sequential -

The sequential data stream with blurred boundary is configured by

# In the ./OnlineContrast directory python main_supcon.py --training_data_type sequential --blend_ratio 0.5The loader blends the last 0.25 portion of samples from the previous class with the first 0.25 portion of the next class, which leads to a total blend ratio of 0.5 as the specified

blend_ratioargument. The blending probability is linearly increased as approaching the boundary. -

The sequential data stream with imbalanced classes is configured by

# In the ./OnlineContrast directory python main_supcon.py --training_data_type sequential --train_samples_per_cls 250 500 500 500 250 500 500 500 500 500If the argument of

train_samples_per_clsholds a single integer, then the samples of all classes are set to this integer. Iftrain_samples_per_clsholds a list of integers, then the samples of each sequential class is set to match the list. -

The sequential data stream with concurrent classes is configured by

# In the ./OnlineContrast directory python main_supcon.py --training_data_type sequential --n_concurrent_classes 2We consider the case that two classes are reveled concurrently.

More implementation details can be found in ./data_utils.py in the SeqSampler class.

If you found the codebase useful, please consider citing

@article{yu2022scale,

title={SCALE: Online Self-Supervised Lifelong Learning without Prior Knowledge},

author={Yu, Xiaofan and Guo, Yunhui and Gao, Sicun and Rosing, Tajana},

journal={arXiv preprint arXiv:2208.11266},

year={2022}

}

MIT

If you have any questions, please feel free to contact x1yu@ucsd.edu.