This repo contains the simulation implementation for paper:

Xiaofan Yu, Ludmila Cherkasova, Harsh Vardhan, Quanling Zhao, Emily Ekaireb, Xiyuan Zhang, Arya Mazumdar, Tajana Rosing. "Async-HFL: Efficient and Robust Asynchronous Federated Learning in Hierarchical IoT Networks" in the Proceedings of IoTDI 2023.

The implementation is based on FLSim and ns3-fl.

.

├── client.py // Implementation of client class

├── config.py // Implementation of argument parsing and configuration settings

├── configs // The json configuration files for all datasets and test scenarios (iid vs non-iid, sync vs async)

├── delays // Delays generated by ns3-fl and the script to generate computational delays

├── LICENSE

├── load_data.py // Implementation of data loaders for both image datasets and the LEAF dataset

├── models // Implementation of ML models for all datasets

├── README.md // This file

├── requirements.txt // Prerequisites

├── run.py // Main script to fire simulations

├── scripts // Collection of bash scripts for various experiments in the paper

├── server // Implementation of servers (sync, semi-async, async)

└── utils // Necessary util files in FLSim

We test with Python3.7. We recommend using conda environments:

conda create --name asynchfl-py37 python=3.7

conda activate asynchfl-py37

python -m pip install -r requirements.txtAll require Python packages are included in requirements.txt and can be installed automatically.

Async-HFL uses Gurobi to solve the gateway-level device selection and cloud-level device-gateway association problem. A license is required. After the installation, add the following lines to the bash initialization file (e.g., ~/.bashrc):

export GUROBI_HOME="PATH-TO-GUROBI-INSTALLATION"

export PATH="${PATH}:${GUROBI_HOME}/bin"

export LD_LIBRARY_PATH="${LD_LIBRARY_PATH}:${GUROBI_HOME}/lib"As mentioned in the paper, we experiment on MNIST, FashionMNIST, CIFAR-10, Shakespeare, HAR, HPWREN.

-

For MNIST, FashionMNIST, CIFAR-10, the script will download the datasets automatically into

./datafolder, which is specified in the json configuration files under./configs. The non-iid partition for each client is done synthetically, assigning two random classes to each client, as set in the json configuration. -

The Shakespeare dataset is adapted from the LEAF dataset, and we use their original script for natural data partition:

git clone https://github.com/TalwalkarLab/leaf.git cd leaf/data/shakespeare/ ./preprocess.sh -s niid --sf 0.2 -k 0 -t sample -tf 0.8The

preprocess.shscript generates partitioned data in the same directory. Then, you need to specify the path to the Shakespeare data in./configs/Shakespeare/xxx.json, wherexxxcorresponds to the setting. -

The HAR dataset is downloaded from UCI Machine Learning repository. We provide a similar partition script as in LEAF here. Similarly, after partition, you need to specify the path to the partitioned data in

./configs/HAR/xxx.json. -

The HPWREN dataset is constructed from the historical data of HPWREN. We provide the data download script and partition scripts here. You need to specify the path to the partitioned data in

./configs/HPWREN/xxx.json.

Note, that Shakespeare, HAR and HPWREN use natural non-iid data partition as detailed in the paper.

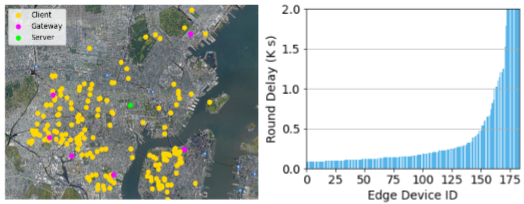

One major novelty of Async-HFL is considering the networking heterogeneity in FL. Specifically, we generate communication delays from ns3-fl, and the computational delay randomly from a log-normal distribution.

- For the communication delays, the hierarchical network topology is configured based on NYCMesh with 184 edge devices, 6 gateways, and 1 server. We assume that edge devices are connected to the gateways via Wi-Fi, and the gateways are connected to the server via Ethernet. For each node, we retrieve its latitude, longitude, and height as input to the

HybridBuildingsPropagationLossModelin ns-3 to obtain the average point-to-point latency. The communication delays generated from ns3-fl are stored indelays/delay_client_to_gateway.csvanddelays/delay_gateway_to_cloud.csv. - For the computational delays, we run

python3 delays/generate.py.

The path to the corresponding delay files are set in the json configuration files.

The NYCMesh topology and round delay distributions are shown as follows.

To run synchronous FL on MNIST with non-iid data partition and random client selection:

python run.py --config=configs/MNIST/sync_noniid.json --delay_mode=nycmesh --selection=randomFor synchronous FL, apart from random client selection, we offer the other client-selection strategies:

divfl: Implementation of DivFL in Diverse Client Selection for Federated Learning via Submodular Maximization, ICLR'21tier: Implementation of TiFL in TiFL: A Tier-based Federated Learning System, HPDC'20oort: Implementation of Oort in Oort: Efficient Federated Learning via Guided Participant Selection, OSDI'21

To run RFL-HA (sync aggregation at gateways and async aggregation at cloud) on FashionMNIST with non-iid data partition and random client selection:

python run.py --config=configs/FashionMNIST/rflha_noniid.json --delay_mode=nycmesh --selection=randomrflha: Implementation of RFL-HA in Resource-Efficient Federated Learning with Hierarchical Aggregation in Edge Computing, INFOCOM'21

To run semi-asynchronous FL on CIFAR-10 with non-iid data partition and random client selection:

python run.py --config=configs/CIFAR-10/semiasync_noniid.json --delay_mode=nycmesh --selection=randomsemiasync: We implement the semi-async FL strategy as in Federated Learning with Buffered Asynchronous Aggregation, AISTATS'22

To run asynchronous FL on Shakespeare with non-iid data partition and random client selection:

python run.py --config=configs/Shakespeare/async_noniid.json --delay_mode=nycmesh --selection=randomTo run Async-HFL on HAR with non-iid data partition:

python3.7 run.py --config=configs/HAR/async_noniid.json --delay_mode=nycmesh --selection=coreset_v1 --association=gurobi_v1To run Async-HFL on HPWREN with non-iid data partition, and certain alpha (weight for delays in gateway-level client selection, used in server/clientSelection.py) and phi (weight for throughput in cloud-level device-gateway association, used in server/clientAssociation.py):

python3.7 run.py --config=configs/HAR/async_noniid.json --delay_mode=nycmesh --selection=coreset_v1 --association=gurobi_v1 --cs_alpha=alpha --ca_phi=phiWe provide our scripts for running various experiments in the paper in scripts:

.

├── run_ablation.sh // Script for running ablation study on Async-HFL

| // (various combinations of client selection and association)

├── run_baseline_nycmesh.sh // Script for running baselines in the NYCMesh setup

├── run_baseline.sh // Script for running baselines in the random delay setup

├── run_exp_nycmesh.sh // Script for running Async-HFL in the NYCMesh setup

├── run_exp.sh // Script for running Async-HFL in the random delay setup

├── run_motivation_nycmesh.sh // Script for running the motivation study

├── run_sensitivity_pca.sh // Script for running sensitivity study regarding PCA dimension

└── run_sensitivity_phi.sh // Script for running sensitivity study regarding phi

Apart from simulation, we also evaluation Async-HFL with a physical deployment using Raspberry Pis and CPU clusters. The implementation is at https://github.com/Orienfish/FedML, which is adapted from the FedML framework.

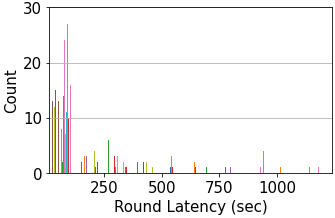

The delay distribution in the physical deployment verifies our strategy in generating the delays in the simulation framework, which presents a long-tail distribution:

The above plot shows a histogram of all round latencies collected when an updated model is returned in our physical deployment, with x axis representing round latency and y axis representing the counts. It can be clearly observed that the majority of trials return fairly quickly, while in rare cases the round latency can be unacceptably long.

MIT

If you have any questions, please feel free to contact x1yu@ucsd.edu.