Xinhao Li*, Ziang Yan*, Desen Meng*, Lu Dong, Xiangyu Zeng, Yinan He, Yali Wang, Yu Qiao, Yi Wang^ and Limin Wang^

- 2025/04/22:🔥🔥🔥 We release our VideoChat-R1-caption at Huggingface.

- 2025/04/14:🔥🔥🔥 We release our VideoChat-R1 and VideoChat-R1-thinking at Huggingface.

- 2025/04/10:🔥🔥🔥 We release our paper and code.

Refer to hf README to inference our model.

See eval_scripts and lmms-eval_videochat.

See training_scripts.

If you find this project useful in your research, please consider cite:

@article{li2025videochatr1,

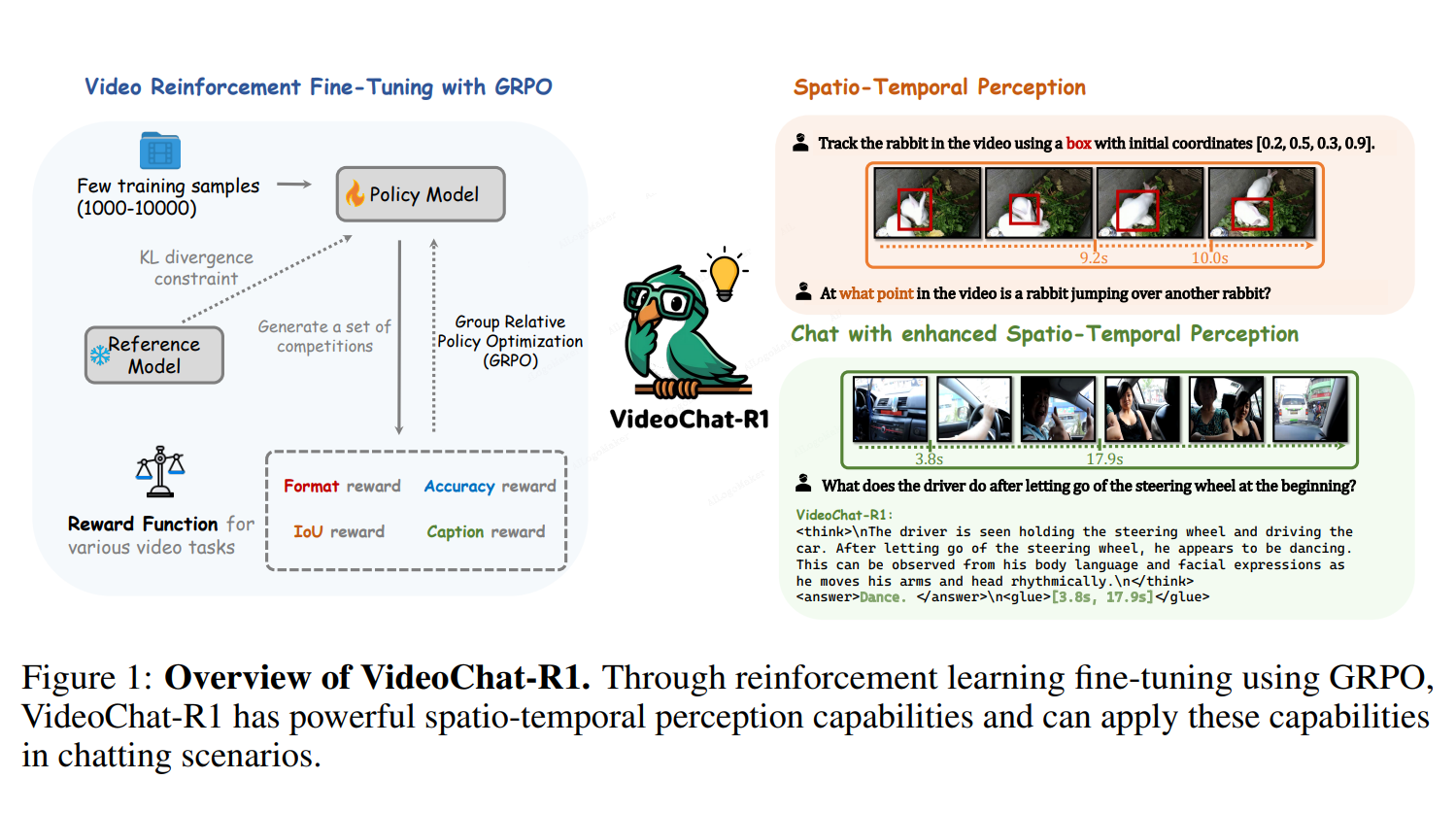

title={VideoChat-R1: Enhancing Spatio-Temporal

Perception via Reinforcement Fine-Tuning},

author={Li, Xinhao and Yan, Ziang and Meng, Desen and Dong, Lu and Zeng, Xiangyu and He, Yinan and Wang, Yali and Qiao, Yu and Wang, Yi and Wang, Limin},

journal={arXiv preprint arXiv:2504.06958},

year={2025}

}