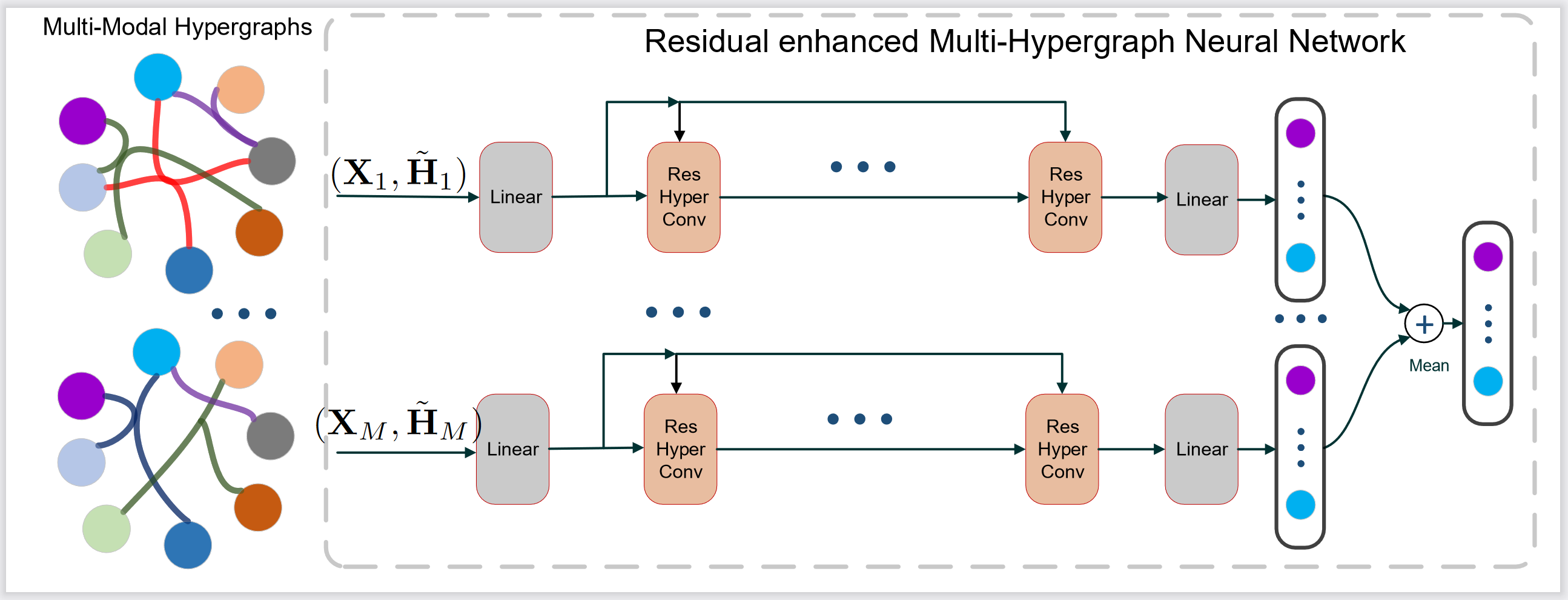

This repository contains the source code for the paper Residual Enhanced Multi-Hypergraph Neural Network, accepted by ICIP 2021.

If you find this work useful in your research, please consider cite:

@inproceedings{icip21-ResMHGNN,

title = {Residual Enhanced Multi-Hypergraph Neural Network},

author = {Huang, Jing and Huang, Xiaolin and Yang, Jie},

booktitle = {International Conference on Image Processing, {ICIP-21}},

year = {2021}

}Our code requires Python>=3.6.

We recommed using virtual environtment and install the newest versions of Pytorch.

You also need these additional packages:

- scipy

- numpy

- path

Please download the precomputed features of ModelNet40 and NTU2012 datasets from HGNN or just clicking the following links.

Extract above files and put them under any directory ($DATA_ROOT) you like.

We implement the HGNN, MultiHGNN, ResHGNN and ResMultiHGNN. You can change the $model and the layers $layer.

python train.py --dataroot=$DATA_ROOT --dataname=ModelNet40 --seed=2 --model-name=$model --nlayer=$layer;

python train.py --dataroot=$DATA_ROOT --dataname=NTU2012 --seed=1 --model-name=$model --nlayer=$layer; python train.py --dataroot=$DATA_ROOT --dataname=ModelNet40 --model-name=$model --nlayer=$layer --balanced;

python train.py --dataroot=$DATA_ROOT --dataname=NTU2012 --model-name=$model --nlayer=$layer --balanced; Change the split-ratio as you like.

python train.py --dataroot=$DATA_ROOT --dataname=ModelNet40 --model-name=$model --nlayer=$layer --split-ratio=4;

python train.py --dataroot=$DATA_ROOT --dataname=NTU2012 --model-name=$model --nlayer=$layer --split-ratio=4; usage: ResMultiHGNN [-h] [--dataroot DATAROOT] [--dataname DATANAME]

[--model-name MODEL_NAME] [--nlayer NLAYER] [--nhid NHID]

[--dropout DROPOUT] [--epochs EPOCHS]

[--patience PATIENCE] [--gpu GPU] [--seed SEED]

[--nostdout] [--balanced] [--split-ratio SPLIT_RATIO]

[--out-dir OUT_DIR]

optional arguments:

-h, --help show this help message and exit

--dataroot DATAROOT the directary of your .mat data (default:

~/data/HGNN)

--dataname DATANAME data name (ModelNet40/NTU2012) (default: NTU2012)

--model-name MODEL_NAME

(HGNN, ResHGNN, MultiHGNN, ResMultiHGNN) (default:

HGNN)

--nlayer NLAYER number of hidden layers (default: 2)

--nhid NHID number of hidden features (default: 128)

--dropout DROPOUT dropout probability (default: 0.5)

--epochs EPOCHS number of epochs to train (default: 600)

--patience PATIENCE early stop after specific epochs (default: 200)

--gpu GPU gpu number to use (default: 0)

--seed SEED seed for randomness (default: 1)

--nostdout do not output logging info to terminal (default:

False)

--balanced only use the balanced subset of training labels

(default: False)

--split-ratio SPLIT_RATIO

if set unzero, this is for Task: Stability Analysis,

new total/train ratio (default: 0)

Distributed under the MIT License. See LICENSE for more information.