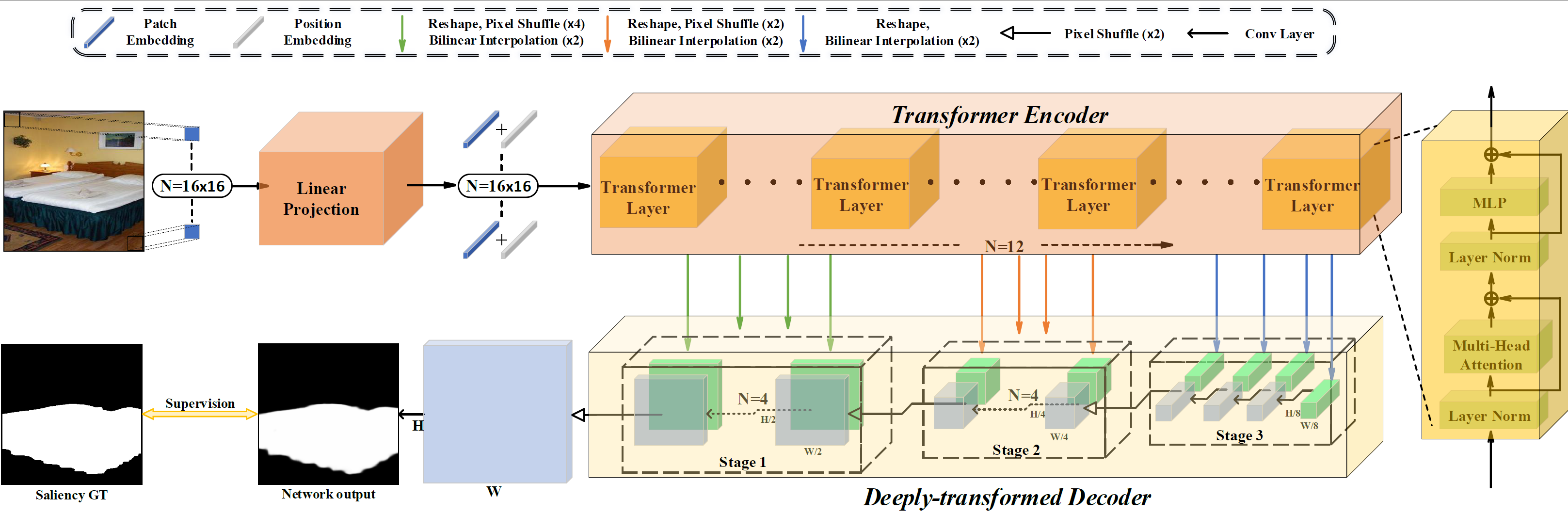

This is the official implementation of paper "Unifying Global-Local Representations in Salient Object Detection with Transformer" by Sucheng Ren, Qiang Wen, Nanxuan Zhao, Guoqiang Han, Shengfeng He

The whole training process can be done on eight RTX2080Ti or four RTX3090.

- Pytorch 1.6

We use the training set of DUTS (DUTS-TR) to train our model.

/path/to/DUTS-TR/

img/

img1.jpg

label/

label1.png

We test our model on the testing set of DUTS, ECSSD, HKU-IS, PASCAL-S, DUT-OMRON, and SOD to test our model.

Download the pretrained transformer backbone on ImageNet.

# input the path to training data and pretrained backbone in train.sh

bash train.sh

Download the pretrained model from Baidu pan(code: uo0a), Google drive, and put it int ./ckpt/

python test.py

The precomputed saliency maps (DUTS-TE, ECSSD, HKU-IS, PASCAL-S, DUT-OMRON, and SOD) can be found at Baidu pan(code: uo0a), Google drive.

After paper submission, we retrain the model, and the performance is improved. Feel free to use the results of our paper or the precomputed saliency maps.

If you have any questions, feel free to email Sucheng Ren :) (oliverrensu@gmail.com)

Please cite our paper if you think the code and paper are helpful.

@article{ren2021unifying,

title={Unifying Global-Local Representations in Salient Object Detection with Transformer},

author={Ren, Sucheng and Wen, Qiang and Zhao, Nanxuan and Han, Guoqiang and He, Shengfeng},

journal={arXiv preprint arXiv:2108.02759},

year={2021}

}