Defeng Xie, Ruichen Wang,Jian Ma,Chen Chen,Haonan Lu,Dong Yang,Fobo Shi,Xiaodong Lin

OPPO Research Institute

"While drawing I discover what I realy want to say" --Dario Fo

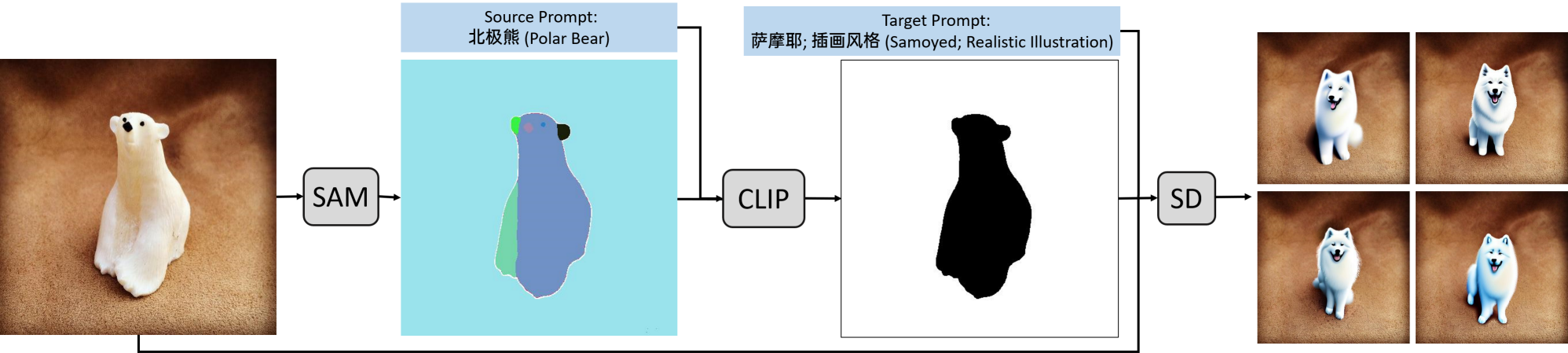

We propose a text-guided generative system without any finetuning (zero-shot). We achieve a great performance for image editing by the implement of Segment Anything Model+CLIP+Stable Diffusion. This work firstly provides an efficient solution for Chinese scenarios.

This is the implement of Edit Everything: A Text-Guided Generative System for Images Editing

Approach

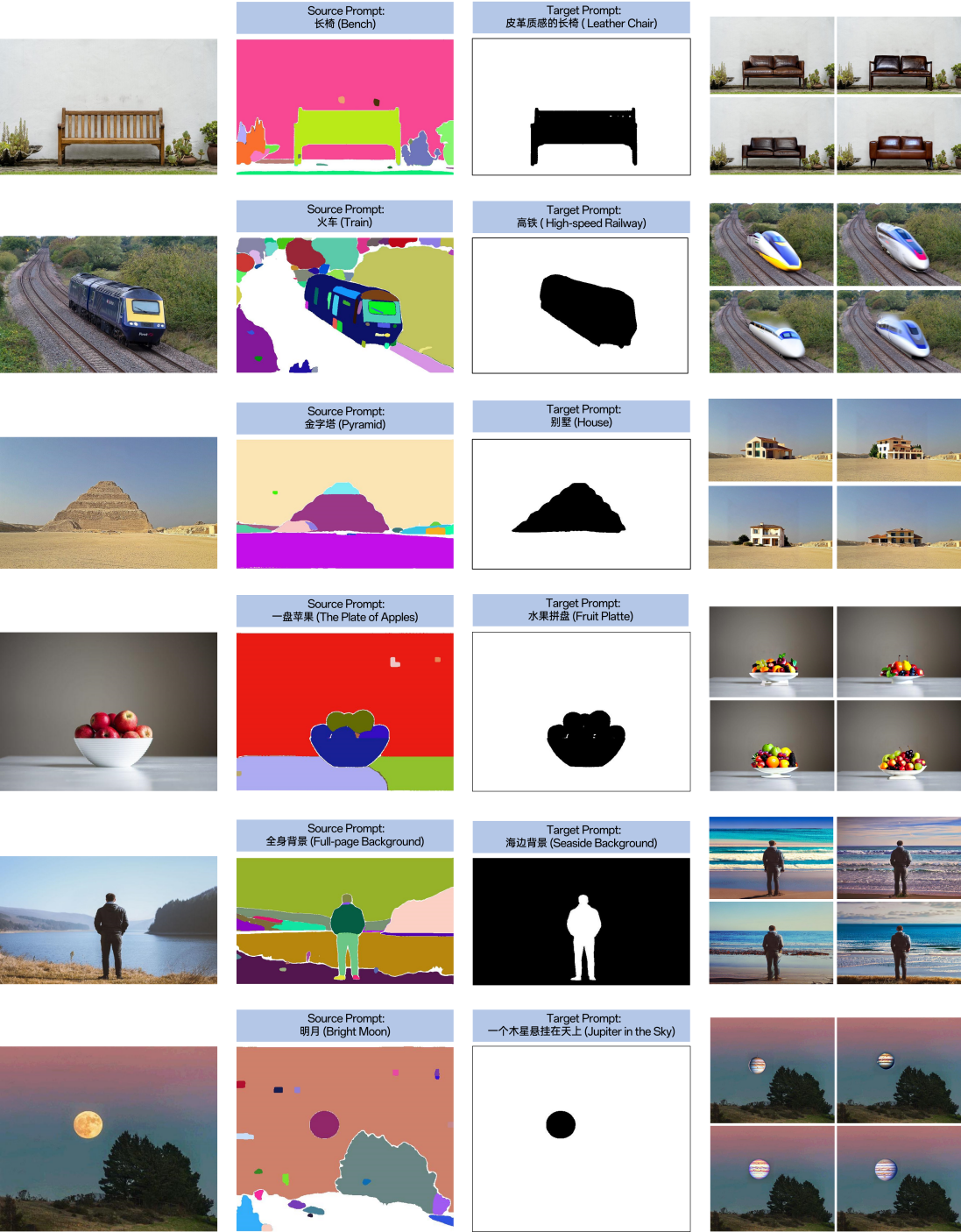

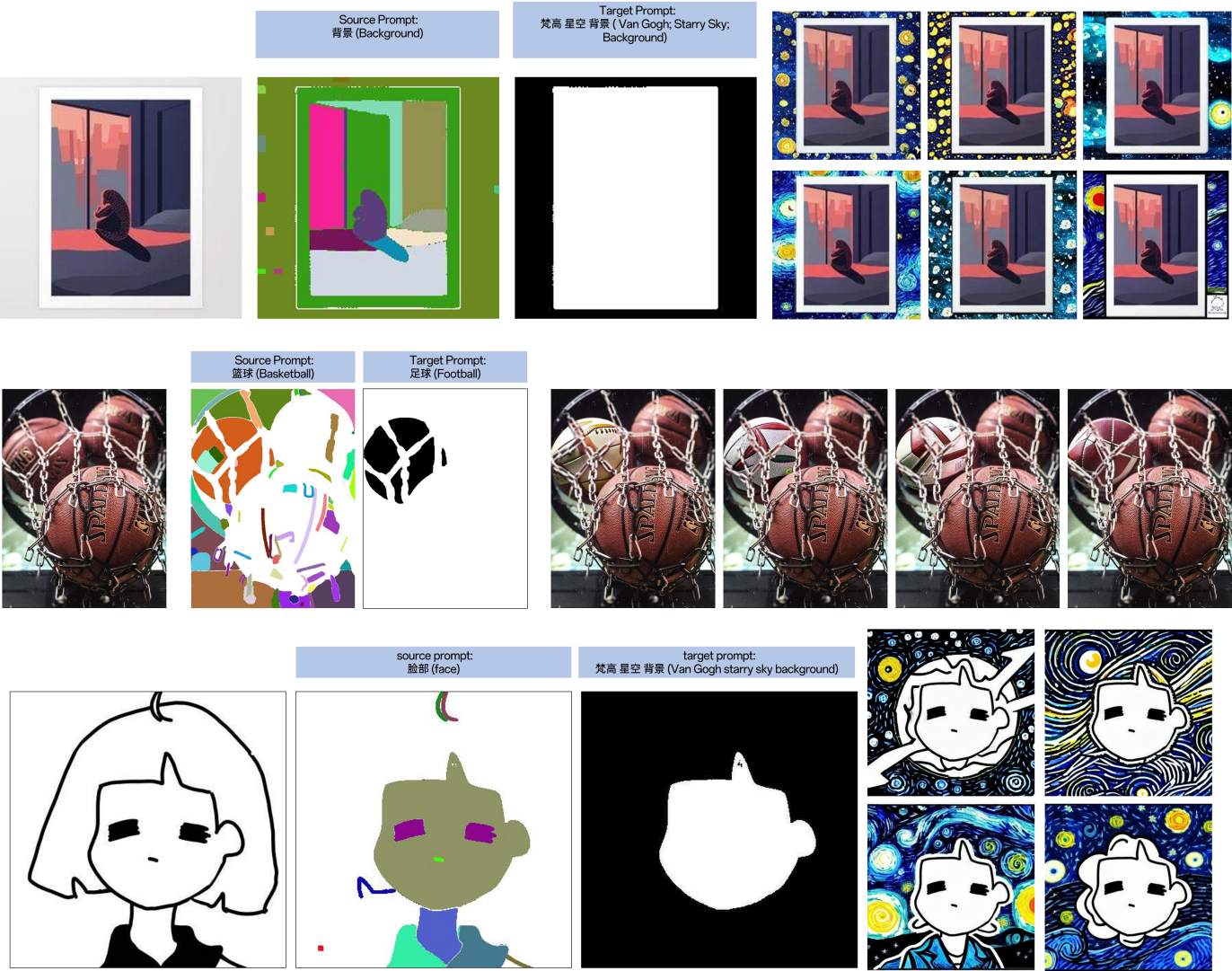

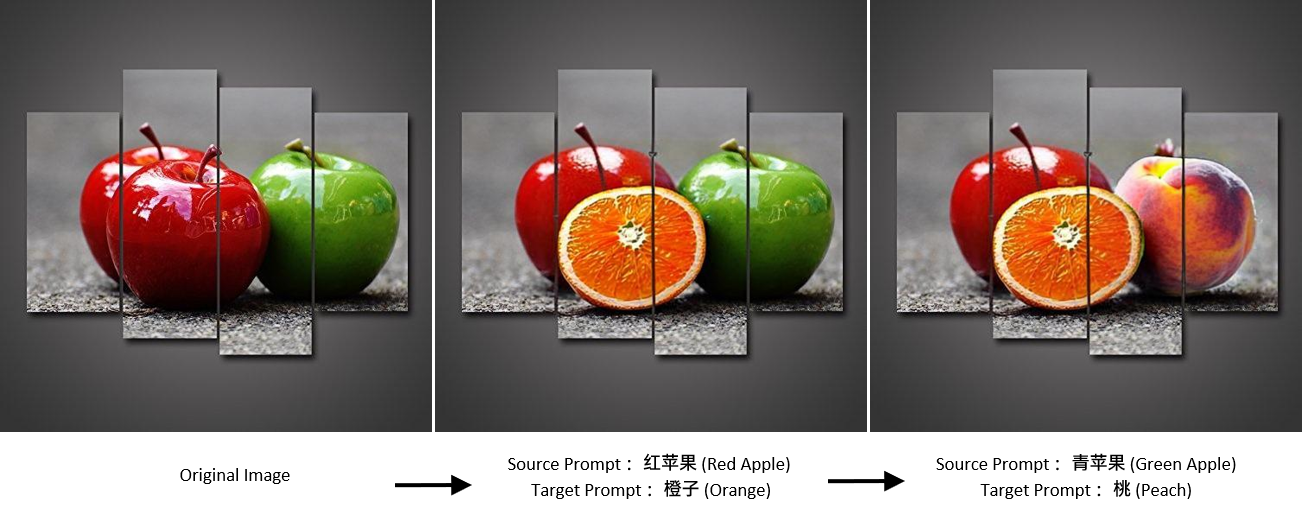

The text-guided generative system consists of Segment Anything Model (SAM), CLIP, and Stable Diffusion (SD). First, we obtain all segments of an image by SAM. Second, based on the source prompt, we rerank these segments and find the target segment with the highest score calculated by our CLIP. The source prompt is a text which describes an object of an image. Finally, we write a target prompt to guide our Stable Diffusion to generate a new object to replace the detected area.

For Chinese scenarios, our CLIP is pre-trained on Chinese corpus and image pairs. And Stable Diffusion is also pre-trained on Chinese corpus. So our work first achieves the text-guided generative system in Chinese scenarios. For English scenarios, we just implement open-source CLIP and Stable Diffusion.

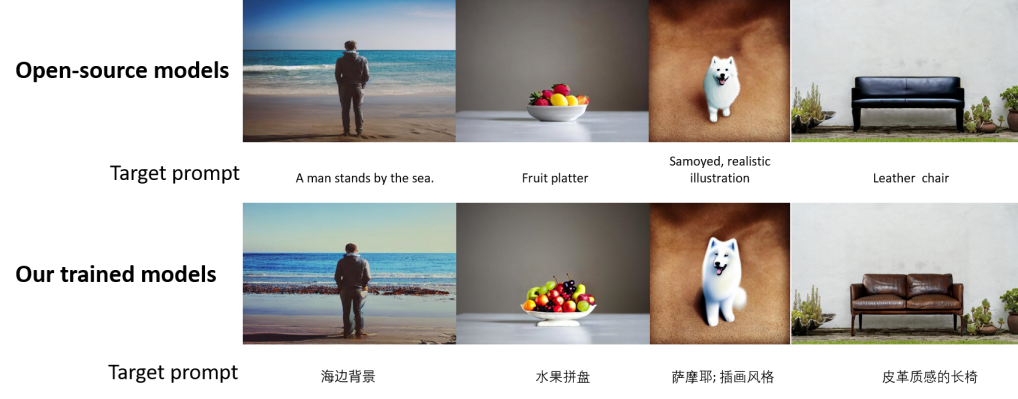

Note: In this demo, we use Taiyi-CLIP-Roberta-large-326M-Chinese and stable-diffusion-inpainting. Thus the target-prompt should be english input rather than chinese. real examples of this demo are slightly different from those shown in the README. we present a comparison between our trained models and open-source models.

Getting Started

PyTorch 1.10.1 (or later) and python 3.8 are recommended. Some python packages are needed and can be installed by

pip install -r requirements.txt

For CLIP, download the checkpoint of Taiyi-CLIP-Roberta-large-326M-Chinese and clip-vit-large-patch14 in CLIP,and replace the checkpoint path in clip_wrapper.py. For SAM, download the checkpoint of vit_l and replace the checkpoint path in segmentanything_wrapper.py. for SD, download the checkpoint of stable-diffusion-inpainting and replace the checkpoint path in stablediffusion_wrapper.py. Final, see the demo.ipynb to use each module.

More Example

One object can be edited at a time

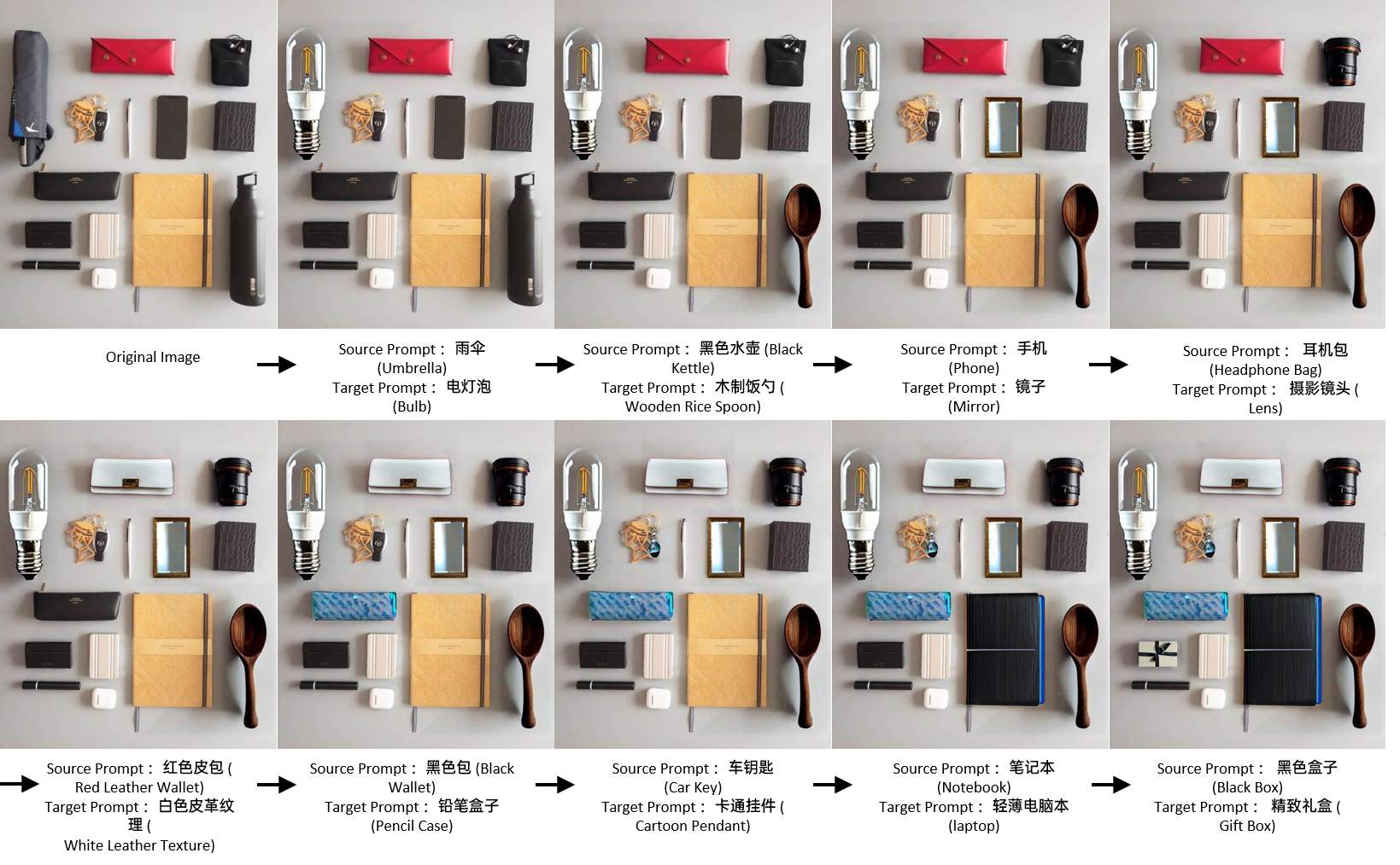

Multiple objects of an image are edited step by step

Next to do

we will further improve zero-shot performances in our system. In the future, we will add area-guided and point-guided tools.