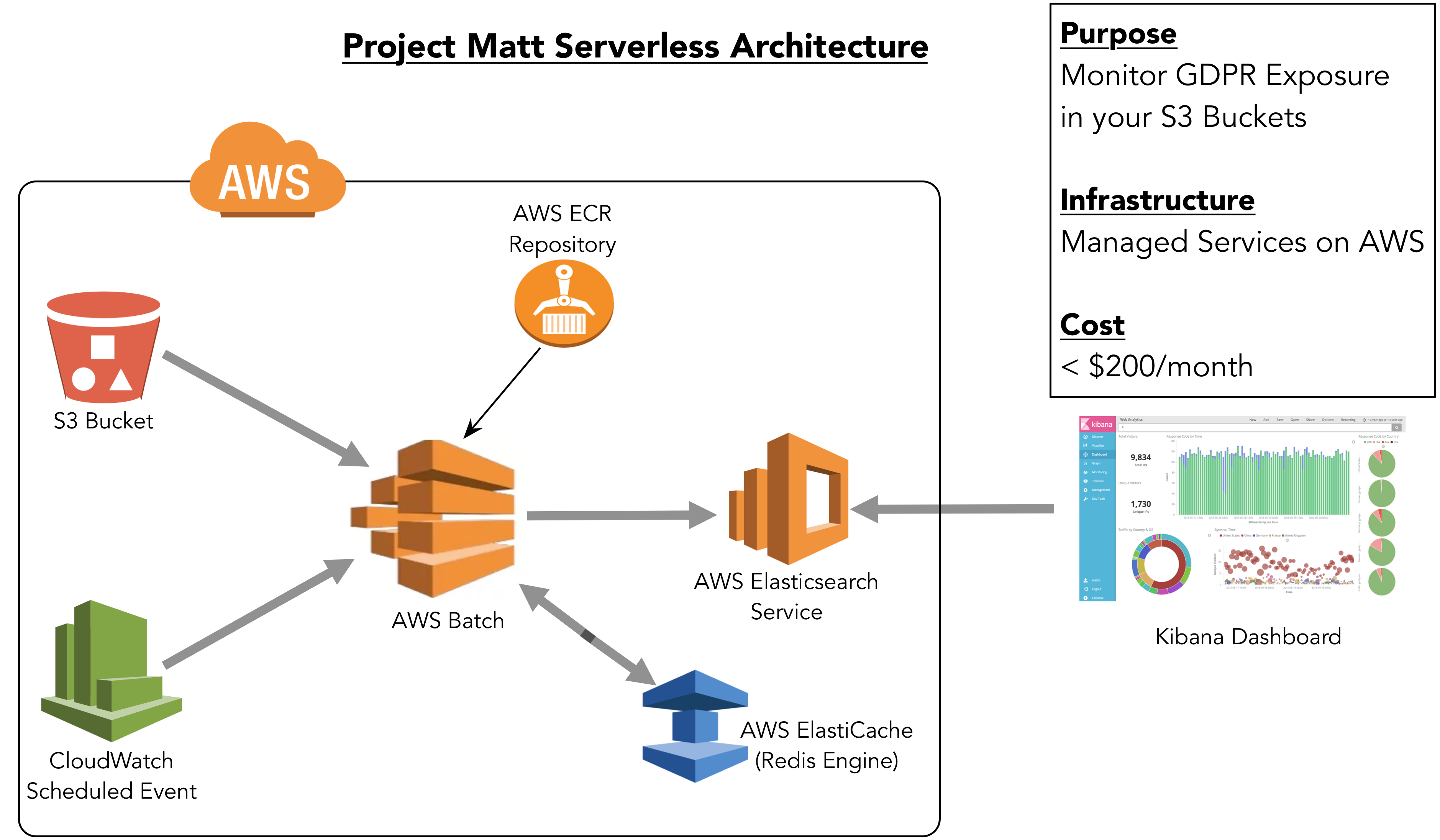

This project was created to help to scan your AWS S3 buckets for PII data. The app leverages the scale and cost of AWS services ensuring that you only pay for what you use.

When deployed, it scans your AWS S3 bucket (you can also set prefixes to limit scan to specific paths) and detects file types automatically and extracts possible PII using regular expressions.

The scan summary is loaded to your Elasticsearch cluster with which you can create Kibana dashboards to report your DLP exposure.

-

Regex: Currently the app detects of some key European personal data regexp patterns. However, you can fork the project and add more regex expressions. You can read more on the available classifiers here.

-

Keyword Matching: This is currently in development. This is not released yet. This is due large amount of domain expertise is required on this topic.

-

Convolutional Neural Networks: This is active development and will be released with the next major update. The project will use CNN to detect sensitive or PII words in scanned files.

Project Matt uses Apache Tika under the hood for file parsing. Hence, all file formats

supported out-of-the-box by Apache Tika are supported - including media files.

Currently, we cannot guarantee support for parsing parquet file formats.

This is in active development and would be released within the next minor upgrades.

Reading Parquet Files is now Supported

All compression file formats supported by Apache Tika are available.

An AWS Cloudformation template that deploys the jar app as an AWS Batch job is available.

NOTE: You can only scan S3 buckets in the same region as where your template is deployed.

Deploying Project Matt to your AWS environment is in the following stages

- Deploy the infrastructure i.e

- AWS Elasticsearch Service (comes with Kibana)

- AWS ElastiCache (Redis engine)

- Build the Docker Image located in

deploy_app/Dockerfileand push to your AWS ECR. You can read more about how to get this done here. Make sure you copy the link to the ECR repository. - Deploy the cloudformation template here and fill the necessary parameters

:

- Target S3 Bucket: [Required]

- Target S3 Prefix: [Optional] (MUST BE IN THE S3 BUCKET)

- AWS ECR Repository for Project Matt container [Required]

- Backend Stack Name: The stack name of Project Matt infrastructure. Required for accessing its exports

By default, maximum number of AWS S3 objects to be scanned is set to 2000. This we assume is more ideal for performance purposes.

Project Matt only performs S3 GET requests, hence you pay $0.0008 for every job execution for S3 charges. For AWS Batch, you only pay by the instance type you select when deploying the template. By default, the template makes use of spot instances also to save cost.

Cost Estimation with AWS Simple Calculator

Usage is licensed under MIT License