Keywords: Multimodal Generation, Text to image generation, Plug and Play

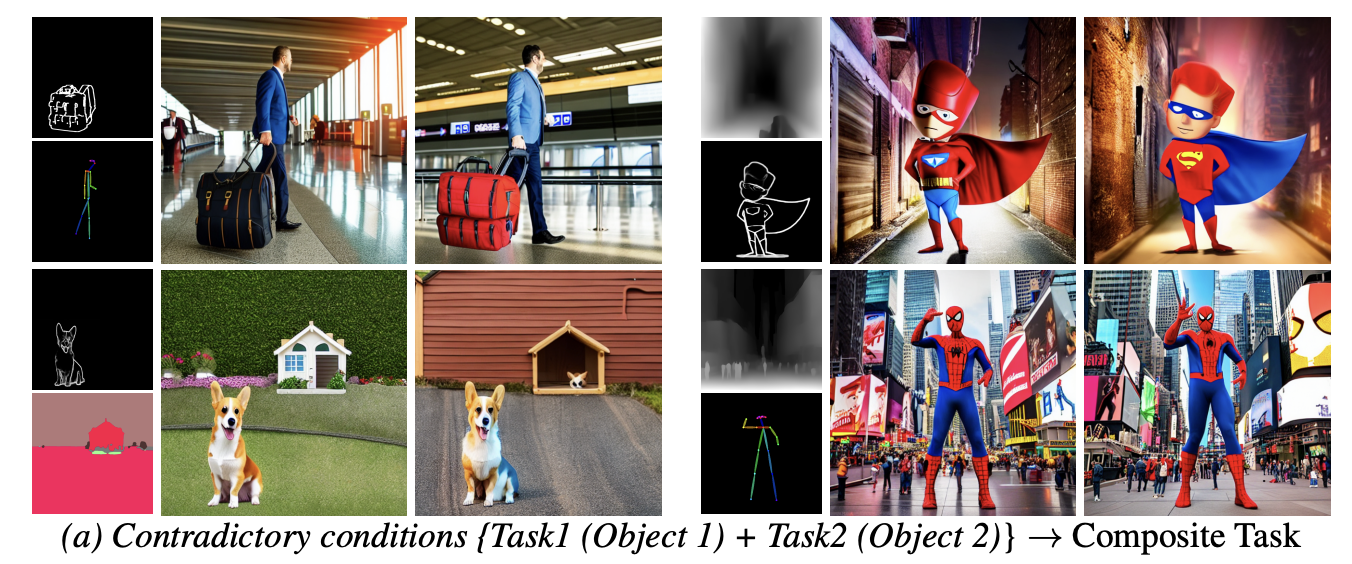

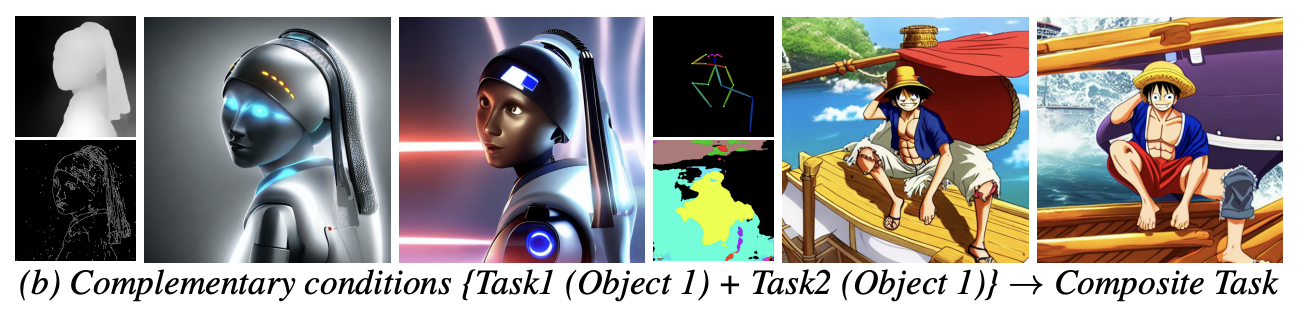

We propose MaxFusion, a plug and play framework for multimodal generation using text to image diffusion models. (a) Multimodal generation. We address the problem of conflicting spatial conditioning for text to iamge models . (b) Saliency in variance maps. We discover that the variance maps of different feature layers expresses the strength og conditioning.

- We tackle the need for training with paired data for multi-task conditioning using diffusion models.

- We propose a novel variance-based feature merging strategy for diffusion models.

- Our method allows us to use combined information to influence the output, unlike individual models that are limited to a single condition.

- Unlike previous solutions, our approach is easily scalable and can be added on top of off-the-shelf models.

conda env create -f environment.yml

A notebook for differnt demo conditions is provided in demo.ipynb

Will be released shortly

An intractive demo can be run locally using

python gradio_maxfusion.py

This code is reliant on:

https://github.com/google/prompt-to-prompt/

- If you use our work, please use the following citation

@article{nair2024maxfusion,

title={MaxFusion: Plug\&Play Multi-Modal Generation in Text-to-Image Diffusion Models},

author={Nair, Nithin Gopalakrishnan and Valanarasu, Jeya Maria Jose and Patel, Vishal M},

journal={arXiv preprint arXiv:2404.09977},

year={2024}

}