Honghui Yang1,2, Sha Zhang1,4, Di Huang1,5, Xiaoyang Wu1,3, Haoyi Zhu1,4, Tong He1*,

Shixiang Tang1, Hengshuang Zhao3, Qibo Qiu6, Binbin Lin2*, Xiaofei He2, Wanli Ouyang1

1Shanghai AI Lab, 2ZJU, 3HKU, 4USTC, 5USYD, 6Zhejiang Lab

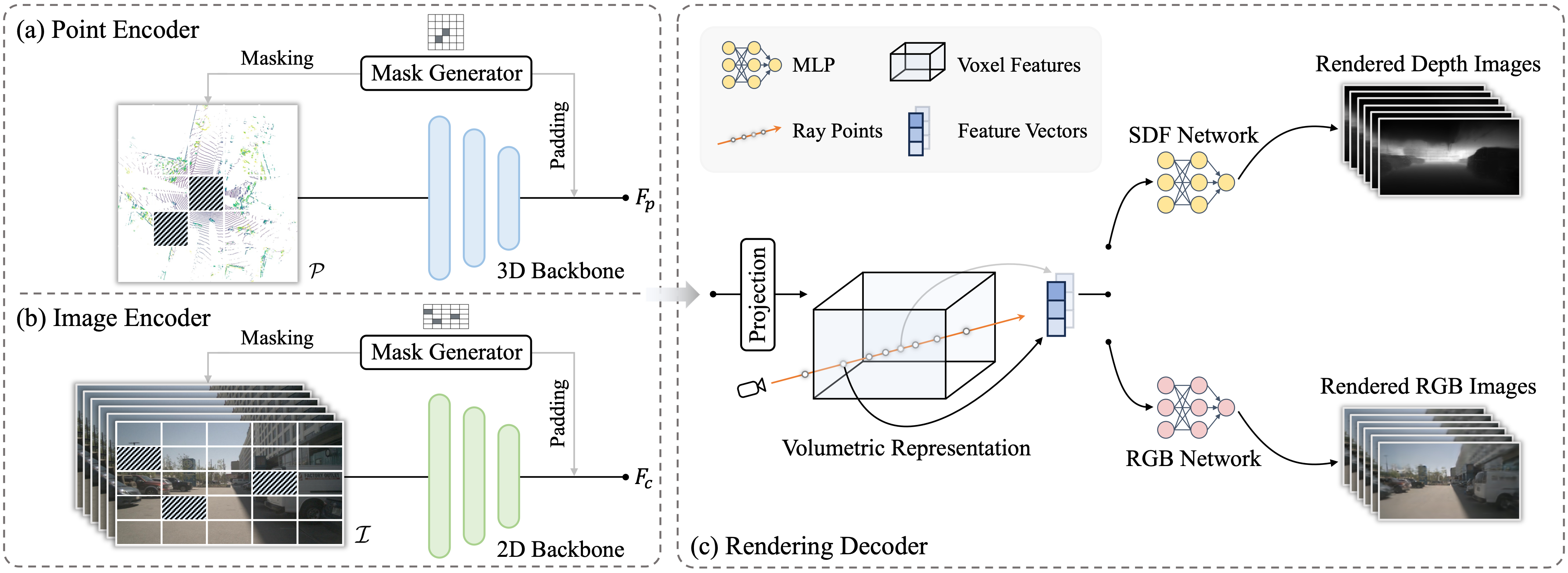

In this paper, we present UniPAD, a novel self-supervised learning paradigm applying 3D volumetric differentiable rendering. UniPAD implicitly encodes 3D space, facilitating the reconstruction of continuous 3D shape structures and the intricate appearance characteristics of their 2D projections. The flexibility of our method enables seamless integration into both 2D and 3D frameworks, enabling a more holistic comprehension of the scenes.

[2024-03-16] The full code is released.

[2024-02-27] UniPAD is accepted at CVPR 2024.

[2023-11-30] The code is released. The code for indoor is available here.

[2023-10-12] The paper is publicly available on arXiv.

This project is based on MMDetection3D, which can be constructed as follows.

- Install PyTorch v1.7.1 and mmDetection3D v0.17.3 following the instructions.

- Install the required environment

conda create -n unipad python=3.7

conda activate unipad

conda install -y pytorch==1.7.1 torchvision==0.8.2 cudatoolkit=11.0 -c pytorch

pip install --no-index torch-scatter -f https://data.pyg.org/whl/torch-1.7.1+cu110.html

pip install mmcv-full==1.3.8 -f https://download.openmmlab.com/mmcv/dist/cu110/torch1.7.1/index.html

pip install mmdet==2.14.0 mmsegmentation==0.14.1 tifffile-2021.11.2 numpy==1.19.5 protobuf==3.19.4 scikit-image==0.19.2 pycocotools==2.0.0 waymo-open-dataset-tf-2-2-0 nuscenes-devkit==1.0.5 spconv-cu111 gpustat numba scipy pandas matplotlib Cython shapely loguru tqdm future fire yacs jupyterlab scikit-image pybind11 tensorboardX tensorboard easydict pyyaml open3d addict pyquaternion awscli timm typing-extensions==4.7.1

git clone git@github.com:Nightmare-n/UniPAD.git

cd UniPAD

python setup.py develop --user

Please follow the instruction of UVTR to prepare the dataset.

You can train the model following the instructions. You can also find the pretrained models here.

# train (4 gpus)

bash ./extra_tools/dist_train_ssl.sh

# test

bash ./extra_tools/dist_test.sh

| NDS | mAP | Model | |

|---|---|---|---|

| UniPAD_C (voxel_size=0.1) | 32.9 | 32.6 | pretrain/ckpt/log |

| UniPAD_L | 55.8 | 48.1 | pretrain/ckpt |

| UniPAD_M | 56.8 | 57.0 | ckpt |

| NDS | mAP | Model | |

|---|---|---|---|

| UniPAD_C (voxel_size=0.075) | 47.4 | 41.5 | pretrain/ckpt |

| UniPAD_L | 70.6 | 65.0 | pretrain/ckpt |

| UniPAD_M | 73.2 | 69.9 | ckpt |

| NDS | mAP | |

|---|---|---|

| UniPAD_C (voxel_size=0.075) | 49.4 | 45.0 |

| UniPAD_L | 71.6 | 66.4 |

| UniPAD_M | 73.9 | 71.0 |

| UniPAD_M (TTA) | 74.8 | 72.5 |

@inproceedings{yang2023unipad,

title={UniPAD: A Universal Pre-training Paradigm for Autonomous Driving},

author={Honghui Yang and Sha Zhang and Di Huang and Xiaoyang Wu and Haoyi Zhu and Tong He and Shixiang Tang and Hengshuang Zhao and Qibo Qiu and Binbin Lin and Xiaofei He and Wanli Ouyang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024},

}

@article{zhu2023ponderv2,

title={PonderV2: Pave the Way for 3D Foundation Model with A Universal Pre-training Paradigm},

author={Haoyi Zhu and Honghui Yang and Xiaoyang Wu and Di Huang and Sha Zhang and Xianglong He and Tong He and Hengshuang Zhao and Chunhua Shen and Yu Qiao and Wanli Ouyang},

journal={arXiv preprint arXiv:2310.08586},

year={2023}

}

@inproceedings{huang2023ponder,

title={Ponder: Point cloud pre-training via neural rendering},

author={Huang, Di and Peng, Sida and He, Tong and Yang, Honghui and Zhou, Xiaowei and Ouyang, Wanli},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={16089--16098},

year={2023}

}This project is mainly based on the following codebases. Thanks for their great works!