This is the repository for the code implementation, results, and dataset of the paper titled "Robust Multi-Modal Multi-LiDAR-Inertial Odometry and Mapping for Indoor Environments". The current version of the paper can be accessed at here.

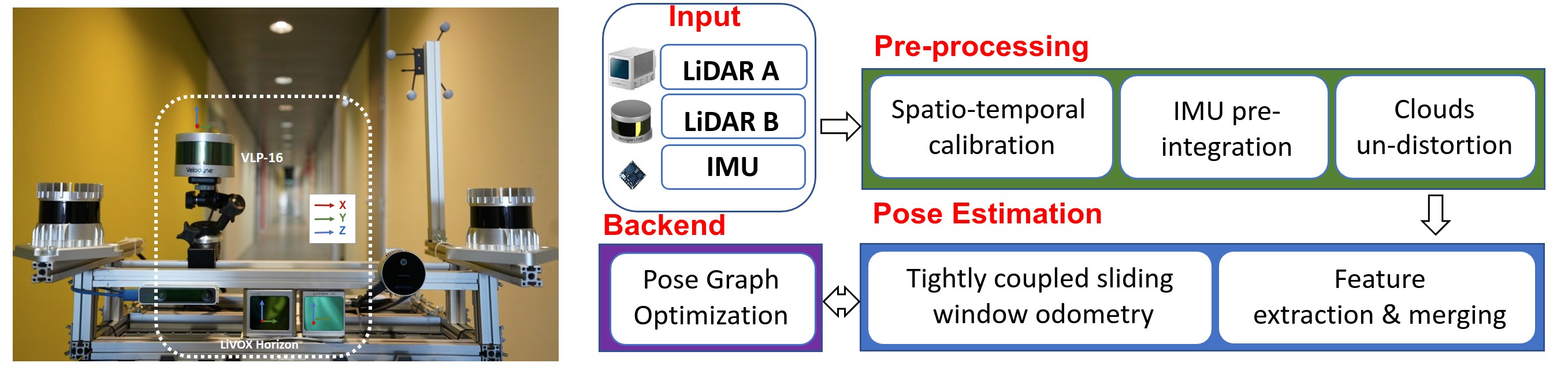

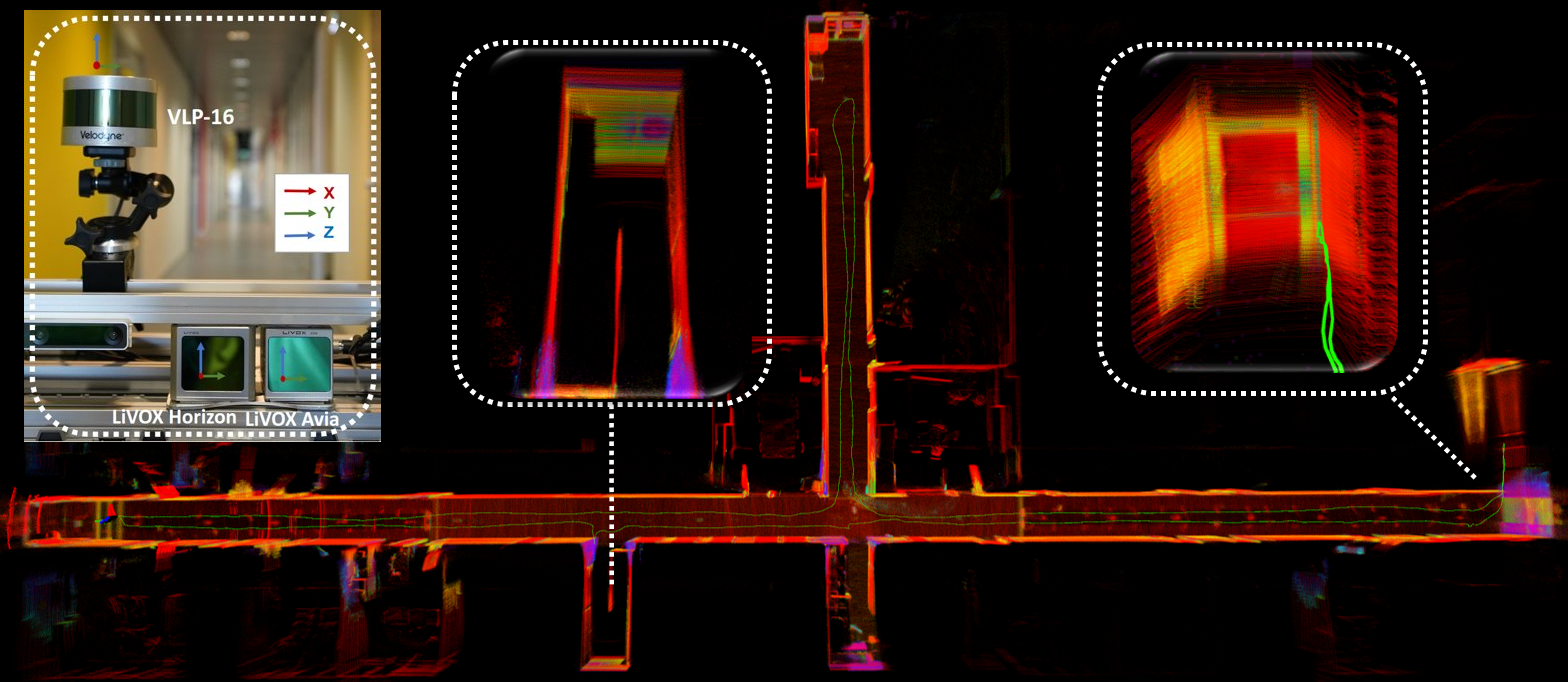

We propose a tightly-coupled multi-modal multi-LiDAR-inertial SLAM system for surveying and mapping tasks. By taking advantage of both solid-state and spinnings LiDARs, and built-in inertial measurement units (IMU). First, we use spatial-temporal calibration modules to align the timestamp and calibrate extrinsic parameters between sensors. Then, we extract two groups of feature points including edge and plane points, from LiDAR data. Next, with pre-integrated IMU data, an undistortion module is applied to the LiDAR point cloud data. Finally, the undistorted point clouds are merged into one point cloud and processed with a sliding window based optimization module. - (left )Data collecting platform. Hardware platform used for data acquisition. The sensors used in this work are the Livox Horizon LiDAR, with its built-in IMU, and the Velodyne VLP-16 LiDAR; (Right) The pipeline of proposed multi-modal LiDAR-inertial odometry and mapping framework. The system starts with preprocessingmodule which takes the input from sensors and performs IMU pre-integration, calibrations, and un-distortions. The scan registration module extracts features and sent the features to a tightly coupled sliding window odometry. Finally, a pose graph is built to maintain global consistency - Mapping result with the proposed system at a hall environment. Thanks to the high resolution of solid-state LiDAR with a non-repetitive scan pattern, the mapping result is able to show clear detail of object’s surface.- 2023.09.30 : Add docker support

- 2023.04.30 : Upload multi-modal lidar-inertial odom

- 2023.03.02 : Init repo

- cd ~/catkin_ws/src

- git clone https://github.com/TIERS/multi-modal-loam.git

- cd multi-modal-loam

- docker build --progress=plain . -t mm-loam

- git submodule update --init --recursive

Note: We recommend using a Docker container to run this code because there are unresolved dependencies in some Ubuntu system. Docker containers provide a consistent and isolated environment, ensuring that the code runs smoothly across different systems.

roscore

rosbag play office_2022-04-21-19-14-23.bag --clock

docker run --rm -it --network=host mm-loam /bin/bash -c "roslaunch mm_loam mm_lio_full.launch"

rviz -d mm-loam/config/backlio_viz.rviz

TODO

Indoor environments:

Outdoor enviornments:

- Street (27.7 GB) Uploading

- Forest (44 GB) Uploading

More datasets can be found in our previous work:

- Hardware and Mapping result in long corridor environment. Our proposed methods show robust performance in long corridor environments and survive in narrow spaces where 180°U-turn occur.

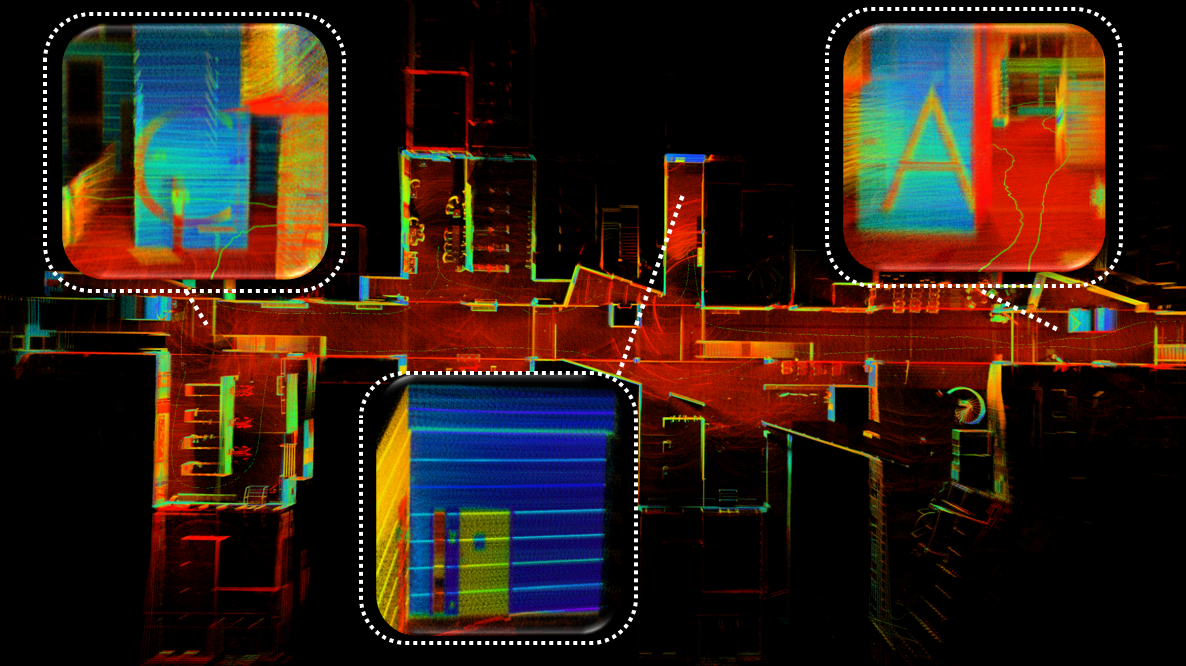

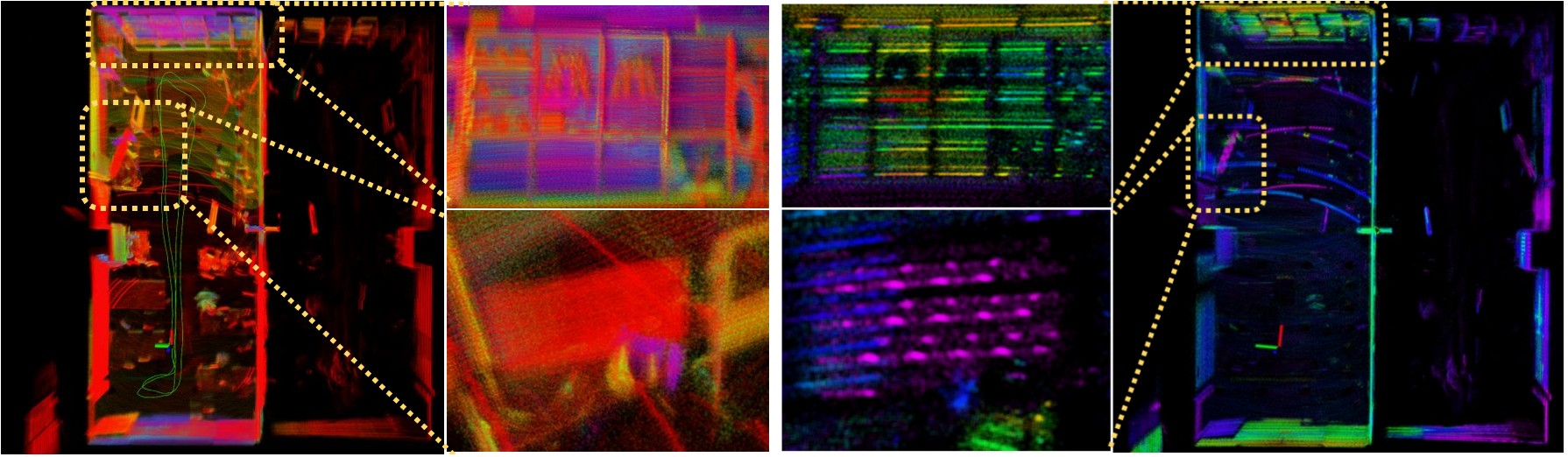

- Qualitative comparison of map details in the office room dataset sequence. The color of the points represents the reflectivity provided by raw sensor data. The point size is 0.01, and transparency is set to 0.05. The middle two columns show the zoom-in view of the wall (top) and TV (bottom).

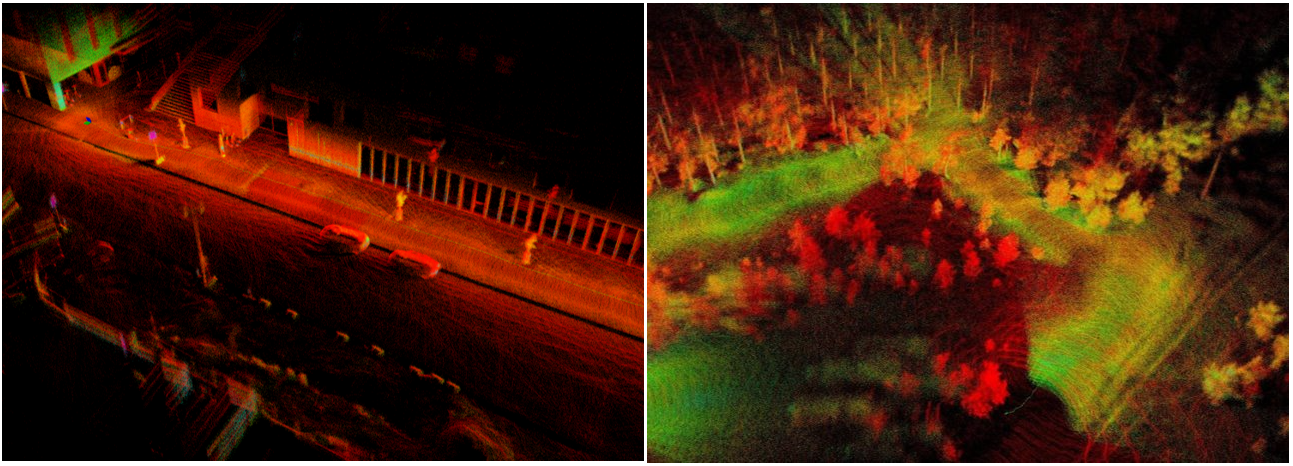

- Mapping results with the proposed method outdoors: urban street (left) and forest environment (right)..

This repo has borrowed code from the following great repositories and has included relevant copyrights in the code to give proper credit. We are grateful for the excellent work done by the creators of the original repositories, which has made this work possible. We encourage the user to read material from those original repositories.

- LIO-LIVOX : A Robust LiDAR-Inertial Odometry for Livox LiDAR

- LEGO-LOAM : A lightweight and ground optimized lidar odometry and mapping

- LIO-MAPPING : A Tightly Coupled 3D Lidar and Inertial Odometry and Mapping Approach