Outdoor inverse rendering from a single image using multiview self-supervision

Y. Yu and W. Smith, "Outdoor inverse rendering from a single image using multiview self-supervision" in IEEE Transactions on Pattern Analysis & Machine Intelligence

Abstract

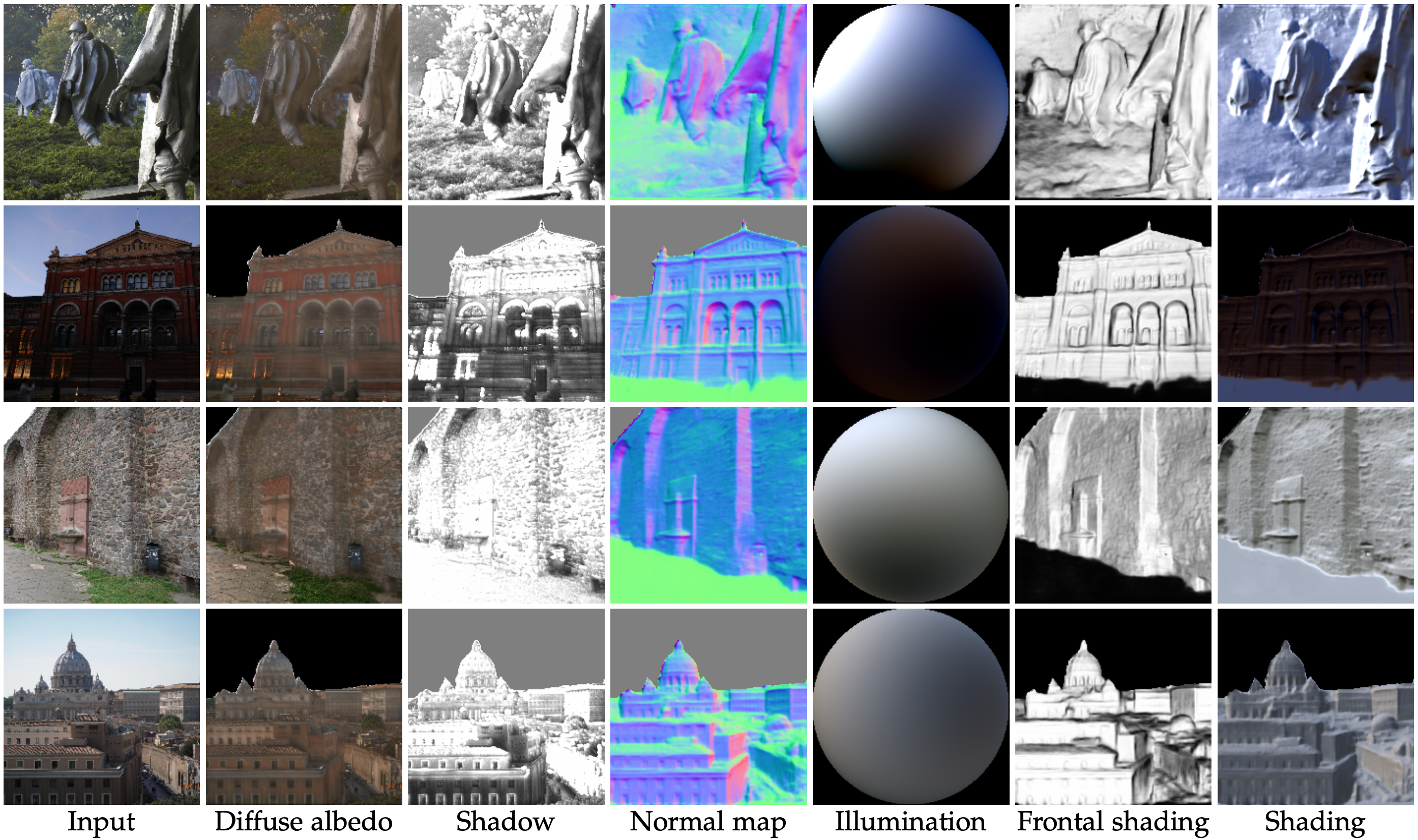

In this paper we show how to perform scene-level inverse rendering to recover shape, reflectance and lighting from a single, uncontrolled image using a fully convolutional neural network. The network takes an RGB image as input, regresses albedo, shadow and normal maps from which we infer least squares optimal spherical harmonic lighting coefficients. Our network is trained using large uncontrolled multiview and timelapse image collections without ground truth. By incorporating a differentiable renderer, our network can learn from self-supervision. Since the problem is ill-posed we introduce additional supervision. Our key insight is to perform offline multiview stereo (MVS) on images containing rich illumination variation. From the MVS pose and depth maps, we can cross project between overlapping views such that Siamese training can be used to ensure consistent estimation of photometric invariants. MVS depth also provides direct coarse supervision for normal map estimation. We believe this is the first attempt to use MVS supervision for learning inverse rendering. In addition, we learn a statistical natural illumination prior. We evaluate performance on inverse rendering, normal map estimation and intrinsic image decomposition benchmarks.

Evaluation

Dependencies

To run our evaluation code, please create your environment based on following dependencies:

- tensorflow 1.12.0

- python 3.6

- skimage

- cv2

- numpy

Pretrained model

- Download our pretrained model from here.

- Untar the downloaded file to get models: model_ckpt, iiw_model_ckpt and diode_model_ckpt.

- Place these three model folders at the root path.

InverseRenderNet_v2

│ README.md

│ test.py

│ ...

|

└─────model_ckpt

│ model.ckpt.meta

│ model.ckpt.index

│ ...

└─────iiw_model_ckpt

│ model.ckpt.meta

│ model.ckpt.index

│ ...

└─────diode_model_ckpt

│ model.ckpt.meta

│ model.ckpt.index

│ ...

Test on demo image

You can perform inverse rendering on random RGB image by our pretrained model. To run the demo code, you need to specify the path to pretrained model, path to RGB image and corresponding mask which masked out sky in the image. The mask can be generated by PSPNet, which you can find on https://github.com/hszhao/PSPNet. Finally inverse rendering results will be saved to the output folder named by your argument.

An example of our inference results on the demonstration image can be performed by:

bash run_test_demo.shOther than running test on the provided demo image, you can test your own images by specifying IMAGE_PATH and MASK_PATH in run_test_demo.sh. The default output folder, being specified byRESULTS_DIR is test_results.

Test on IIW

-

IIW dataset should be downloaded firstly from http://opensurfaces.cs.cornell.edu/publications/intrinsic/#download

-

Make sure

IMAGES_DIRin run_test_iiw.sh point to the path of IIW data and run:

bash run_test_iiw.shResults will be saved to test_iiw.

Test on DIODE

-

Download the dataset from https://diode-dataset.org/

-

Replace

${IMAGES_DIR}defined in run_test_diode.sh with the path to DIODE data and run:

bash run_test_diode.shResults will be saved to test_diode.

Citation

If you use the model or the code in your research, please cite the following paper:

@article{yu2021outdoor,

title={Outdoor inverse rendering from a single image using multiview self-supervision},

author={Yu, Ye and Smith, William A. P.},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

note={to appear},

year={2021}

}