The model, data, and code for the paper: SeeClick: Harnessing GUI Grounding for Advanced Visual GUI Agents

Release Plans:

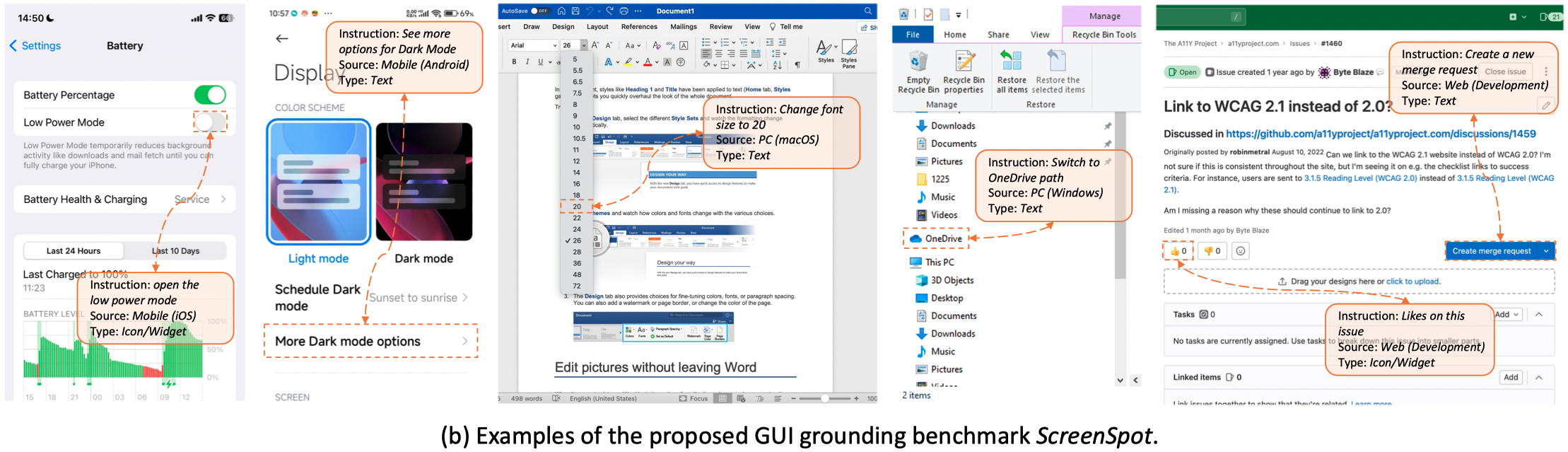

- GUI grounding benchmark: ScreenSpot

- Data for the GUI grounding Pre-training of SeeClick

- Inference code & model checkpoint

- Other code and resources

News: We release data and code for fine-tuning on all three downstream agent tasks.

ScreenSpot is an evaluation benchmark for GUI grounding, comprising over 1200 instructions from iOS, Android, macOS, Windows and Web environments, along with annotated element types (Text or Icon/Widget). See details and more examples in our paper.

Download the images and annotations of ScreenSpot (or download with Google Drive).

Each test sample contain:

img_filename: the interface screenshot fileinstruction: human instructionbbox: the bounding box of the target element corresponding to instructiondata_type: "icon"/"text", indicates the type of the target elementdata_souce: interface platform, including iOS, Android, macOS, Windows and Web (Gitlab, Shop, Forum and Tool)

| LVLMs | Model Size | GUI Specific | Mobile Text | Mobile Icon/Widget | Desktop Text | Desktop Icon/Widget | Web Text | Web Icon/Widget | Average |

|---|---|---|---|---|---|---|---|---|---|

| MiniGPT-v2 | 7B | ❌ | 8.4% | 6.6% | 6.2% | 2.9% | 6.5% | 3.4% | 5.7% |

| Qwen-VL | 9.6B | ❌ | 9.5% | 4.8% | 5.7% | 5.0% | 3.5% | 2.4% | 5.2% |

| GPT-4V | - | ❌ | 22.6% | 24.5% | 20.2% | 11.8% | 9.2% | 8.8% | 16.2% |

| Fuyu | 8B | ✅ | 41.0% | 1.3% | 33.0% | 3.6% | 33.9% | 4.4% | 19.5% |

| CogAgent | 18B | ✅ | 67.0% | 24.0% | 74.2% | 20.0% | 70.4% | 28.6% | 47.4% |

| SeeClick | 9.6B | ✅ | 78.0% | 52.0% | 72.2% | 30.0% | 55.7% | 32.5% | 53.4% |

Check data for the GUI grounding pre-training datasets, including the first open source large-scale web GUI grounding corpus collected from Common Crawl.

SeeClick is built on Qwen-VL and is compatible with its Transformers 🤗 inference code.

All you need is to input a few lines of codes as the examples below.

Before running, set up the environment and install the required packages.

pip install -r requirements.txt

Then,

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-VL-Chat", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("SeeClick-ckpt-dir", device_map="cuda", trust_remote_code=True, bf16=True).eval()

model.generation_config = GenerationConfig.from_pretrained("Qwen/Qwen-VL-Chat", trust_remote_code=True)

img_path = "assets/test_img.png"

prompt = "In this UI screenshot, what is the position of the element corresponding to the command \"{}\" (with point)?"

# prompt = "In this UI screenshot, what is the position of the element corresponding to the command \"{}\" (with bbox)?" # Use this prompt for generating bounding box

ref = "add an event" # response (0.17,0.06)

ref = "switch to Year" # response (0.59,0.06)

ref = "search for events" # response (0.82,0.06)

query = tokenizer.from_list_format([

{'image': img_path}, # Either a local path or an url

{'text': prompt.format(ref)},

])

response, history = model.chat(tokenizer, query=query, history=None)

print(response)The SeeClick's checkpoint can be downloaded on huggingface.

Please replace the SeeClick-ckpt-dir with the actual checkpoint dir.

The prediction output represents the point of (x, y) or the bounding box of (left, top, right, down),

each value is a [0, 1] decimal number indicating the ratio of the corresponding position to the width or height of the image.

We recommend using point for prediction because SeeClick is mainly trained for predicting click points on GUIs.

Thanks to Qwen-VL for their powerful model and wonderful open-sourced work.

Check here to get details of training and testing on three downstream agent tasks, which also provides a guideline for fine-tuning SeeClick.

bash finetune/finetune_lora_ds.sh --save-name SeeClick_test --max-length 704 --micro-batch-size 4 --save-interval 500

--train-epochs 10 --nproc-per-node 2 --data-path xxxx/data_sft.json --learning-rate 3e-5

--gradient-accumulation-steps 8 --qwen-ckpt xxxx/Qwen-VL-Chat --pretrain-ckpt xxxx/SeeClick-pretrain

--save-path xxxx/checkpoint_qwen

data-path: generated sft data, the format can be found in hereqwen-ckpt: origin Qwen-VL ckpt path for loading tokenizerpretrain-ckpt: base model for fine-tuning, e.g. SeeClick-pretrain or Qwen-VLsave-path: directory to save training checkpoints

The fine-tuning scripts are similar to Qwen-VL, except for we use LoRA to fine-tune customized parameters, as in finetune/finetune.py lines 315-327.

This scripts fine-tune pre-train LVLM with LoRA and multi-GPU training; for more option like full-finetuning, Q-LoRA and single-GPU training, please

refer to Qwen-VL.

@misc{cheng2024seeclick,

title={SeeClick: Harnessing GUI Grounding for Advanced Visual GUI Agents},

author={Kanzhi Cheng and Qiushi Sun and Yougang Chu and Fangzhi Xu and Yantao Li and Jianbing Zhang and Zhiyong Wu},

year={2024},

eprint={2401.10935},

archivePrefix={arXiv},

primaryClass={cs.HC}

}