This repo contains an implementation of the following AutoEncoders:

-

Vanilla AutoEncoders - AE:

The most basic autoencoder structure is one which simply maps input data-points through a bottleneck layer whose dimensionality is smaller than the input. -

Variational AutoEncoders - VAE:

The Variational Autoencoder introduces the constraint that the latent codezis a random variable distributed according to a prior distributionp(z). -

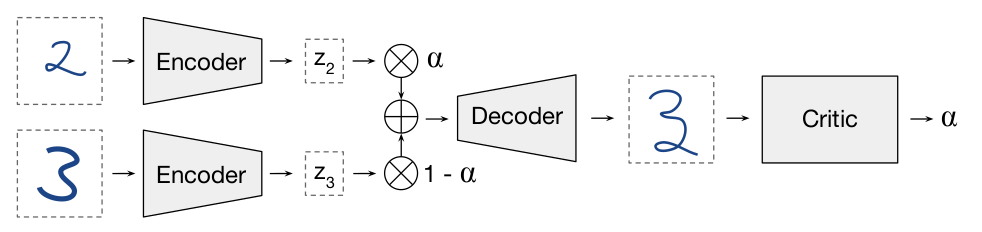

Adversarially Constrained Autoencoder Interpolations - ACAI:

A critic network tries to predict the interpolation coefficient α corresponding to an interpolated datapoint. The autoencoder is trained to fool the critic into outputting α = 0.

mkvirtualenv --python=/usr/bin/python3 pytorch-AE

pip install torch torchvision

python train.py --help

-

Vanilla Autoencoder:

python train.py --model AE -

Variational Autoencoder:

python train.py --model VAE --batch-size 512 --dataset EMNIST --seed 42 --log-interval 500 --epochs 5 --embedding-size 128

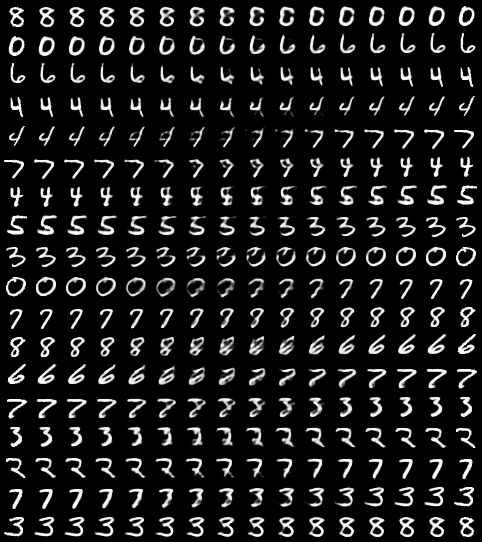

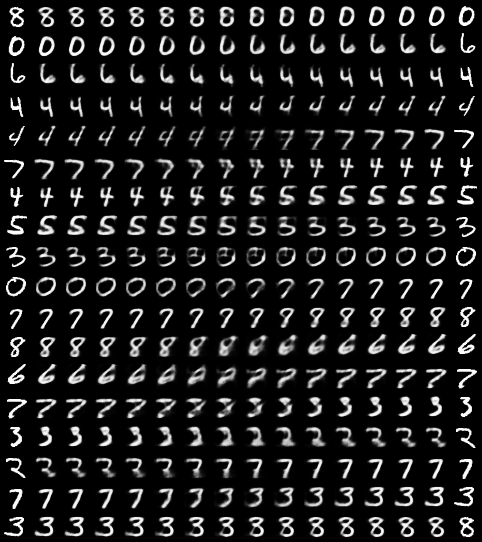

| Vanilla AutoEncoders | Variational AutoEncoders | ACAI |

|---|---|---|

|

|

|

If you have suggestions or any type of contribution idea, file an issue, make a PR and don't forget to star the repository

Feel free to check out my other repos with more work in Machine Learning: