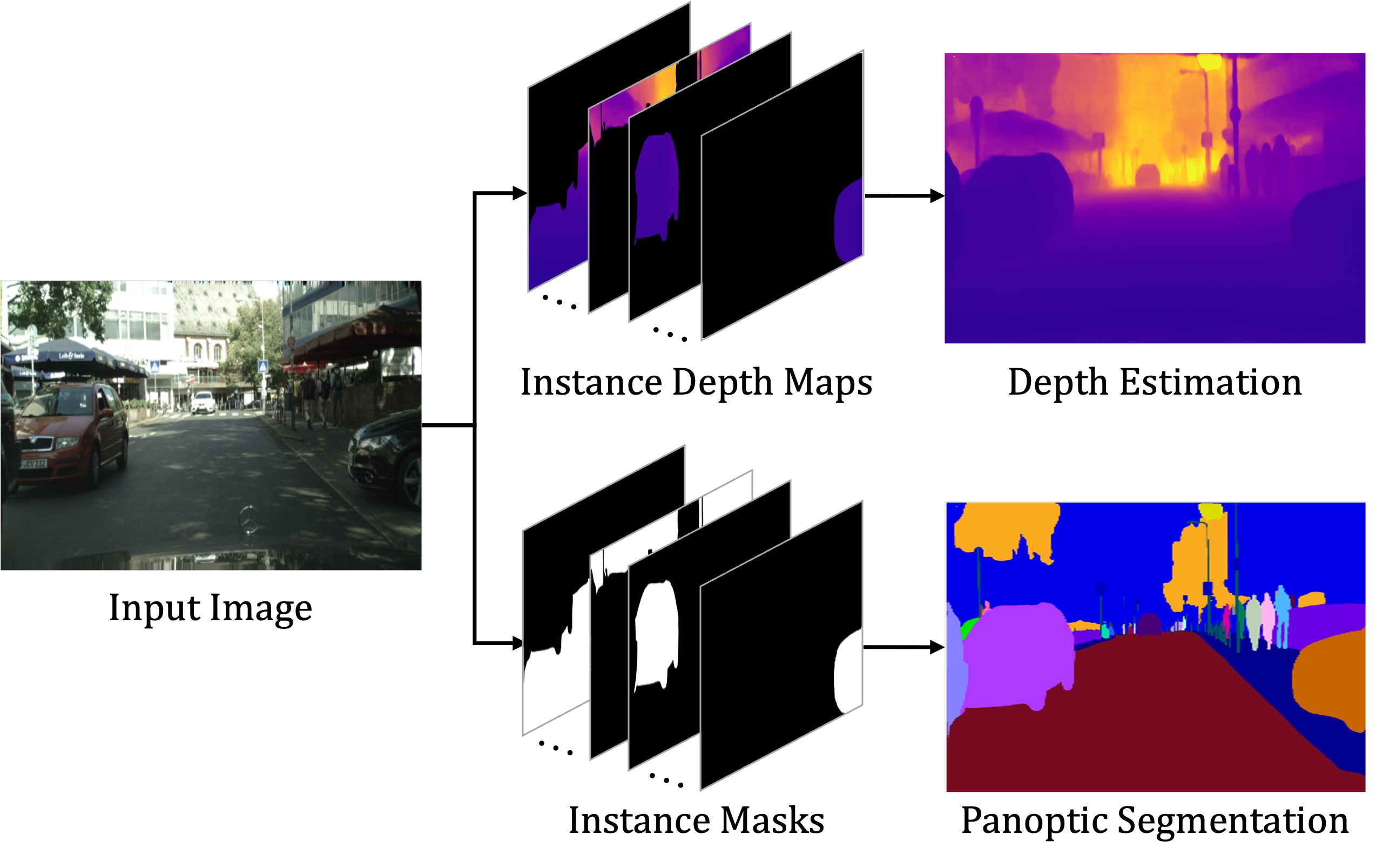

In this repository, we present a unified framework for depth-aware panoptic segmentation (DPS), which aims to reconstruct 3D scene with instance-level semantics from one single image. Prior works address this problem by simply adding a dense depth regression head to panoptic segmentation (PS) networks, resulting in two independent task branches. This neglects the mutually-beneficial relations between these two tasks, thus failing to exploit handy instance-level semantic cues to boost depth accuracy while also producing sub-optimal depth maps. To overcome these limitations, we propose a unified framework for the DPS task by applying a dynamic convolution technique to both the PS and depth prediction tasks. Specifically, instead of predicting depth for all pixels at a time, we generate instance-specific kernels to predict depth and segmentation masks for each instance. Moreover, leveraging the instance-wise depth estimation scheme, we add additional instance-level depth cues to assist with supervising the depth learning via a new depth loss. Extensive experiments on Cityscapes-DPS and SemKITTI-DPS show the effectiveness and promise of our method. We hope our unified solution to DPS can lead a new paradigm in this area.

This project is based on Detectron2, which can be constructed as follows.

- Install Detectron2 following the instructions

- Copy this project to

/path/to/detectron2/ - Setup the Cityscapes dataset

- Download the Cityscapes-DPS dataset

- Setup the Cityscapes-DPS dataset format with

datasets/prepare_dvps_cityscapes.py

To train a DPS model with 8 GPUs, run:

cd ./projects/PanopticDepth/

# Step1, train the PS model

python3 train.py --config-file configs/cityscapes/PanopticDepth-R50-cityscapes.yaml --num-gpus 8 OUTPUT_DIR ./output/ps

# Step2, finetune the PS model with full scale image inputs

python3 train.py --config-file configs/cityscapes/PanopticDepth-R50-cityscapes-FullScaleFinetune.yaml --num-gpus 8 MODEL.WEIGHTS ./output/ps/model_final.pth OUTPUT_DIR ./output/ps_fsf

# Step3, train the DPS model

python3 train.py --config-file configs/cityscapes_dps/PanopticDepth-R50-cityscapes-dps.yaml --num-gpus 8 MODEL.WEIGHTS ./output/ps_fsf/model_final.pth OUTPUT_DIR ./output/dpsTo evaluate a pre-trained model with 8 GPUs, run:

cd ./projects/PanopticDepth/

python3 train.py --eval-only --config-file <config.yaml> --num-gpus 8 MODEL.WEIGHTS /path/to/model_checkpointCityscapes panoptic segmentation

| Method | Backbone | PQ | PQTh | PQSt | download |

|---|---|---|---|---|---|

| PanopticDepth (seg. only) | R50 | 64.1 | 58.8 | 68.0 | google drive |

Cityscapes depth-aware panoptic segmentation

| Method | Backbone | DPQ | DPQTh | DPQSt | download |

|---|---|---|---|---|---|

| PanopticDepth | R50 | 58.3 | 52.1 | 62.8 | google drive |

Consider cite PanopticDepth in your publications if it helps your research.

@inproceedings{gao2022panopticdepth,

title={PanopticDepth: A Unified Framework for Depth-aware Panoptic Segmentation},

author={Naiyu Gao, Fei He, Jian Jia, Yanhu Shan, Haoyang Zhang, Xin Zhao, and Kaiqi Huang},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

We have used utility functions from other wonderful open-source projects, we would espeicially thank the authors of: