This repository contains the code for DiffStack: A Differentiable and Modular Control Stack for Autonomous Vehicles a CoRL 2022 paper by Peter Karkus, Boris Ivanovic, Shie Mannor, Marco Pavone.

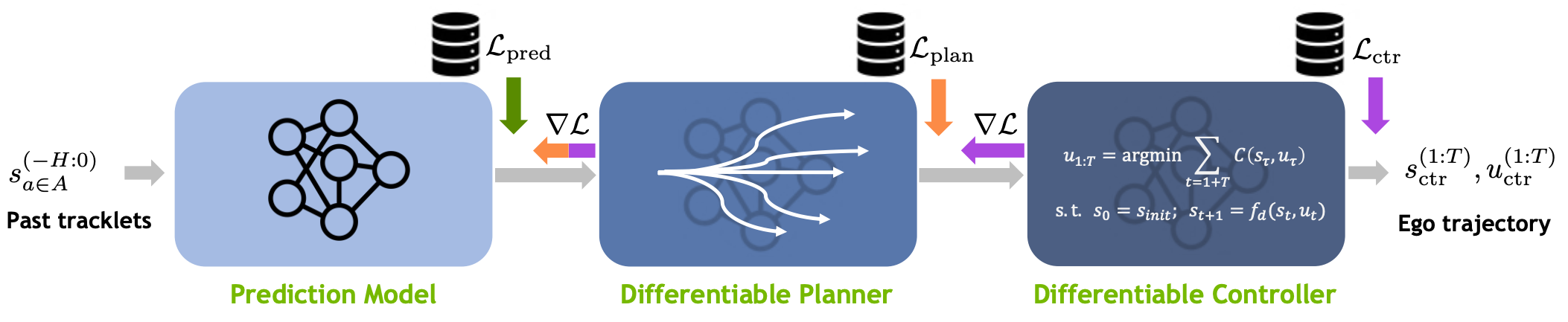

DiffStack is comprised of differentiable modules for prediction, planning, and control. Importantly, this means that gradients can propagate backwards all the way from the final planning objective, allowing upstream predictions to be optimized with respect to downstream decision making.

Disclaimer this code is for research purpose only. This is only an alpha release, not product quality code. Expect some rough edges and sparse documentation.

Credits: the code is built on Trajectron++, Differentiable MPC, Unified Trajctory Data Loader, and we utilize several other standard libraries.

Create a conda or virtualenv environment and clone the repository

conda create -n diffstack python==3.9

conda activate diffstack

git clone https://github.com/NVlabs/diffstackInstall diffstack (locally) with pip

cd diffstack

pip install -e ./This single step is sufficient to install all dependencies. For active development, you may prefer to clone and install Trajectron++, Differentiable MPC, and Unified Trajctory Data Loader manually.

Download and setup the NuScenes dataset following https://github.com/NVlabs/trajdata/blob/main/DATASETS.md

The path to the dataset can be specified with the --data_loc_dict argument, the default is --data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"}

We currently support training and evaluation from two data sources.

- Cached data source (

--data_source=cache) corresponds to a custom preprocessed dataset that allows reproducing results in the paper. - Trajdata source (

--data_source=trajdata) is an interface to the Unified Trajectory Data Loader that supports various data sources including nuScenes, Lyft, etc. This is the recommended way to train new models.

We provide a couple of example commands below. For more argument options use python ./diffstack/train.py --help or look at ./diffstack/argument_parser.py.

To train a new model we recommend using the trajdata data source and the following default arguments. See Unified Trajectory Data Loader for setting up different datasets.

An example for data-parallel training on the nuScenes mini dataset using 1 gpu. Remember to change the path to the dataset. Use the full training set instead of minival for meaningful results.

python -m torch.distributed.run --nproc_per_node=1 ./diffstack/train.py \

--data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"} \

--train_data=nusc_mini-mini_train \

--eval_data=nusc_mini-mini_val \

--predictor=tpp \

--plan_cost=corl_default_angle_fix \

--plan_loss_scaler=100 \

--plan_loss_scaler2=10 \

--device=cuda:0To reporduce results you will need to download our preprocessed dataset (nuScenes-minival, nuScenes-full) and optionally the pretrained models (checkpoints). Please only use the dataset and models if you have agreed to the terms for non-commercial use on https://www.nuscenes.org/nuscenes. The preprocessed dataset and pretrained models are under the CC BY-NC-SA 4.0 licence.

Evaluate a pretrained DiffStack model with jointly trained prediction-planning-control modules on the nuscenes minival dataset. Remember to update the path to the downloaded data and models. Use the full validation set to reproduce results in the paper.

python ./diffstack/train.py \

--data_source=cache \

--cached_data_dir=./data/cached_data/ \

--data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"} \

--train_data=nusc_mini-mini_train \

--eval_data=nusc_mini-mini_val \

--predictor=tpp_cache \

--dynamic_edges=yes \

--plan_cost=corl_default \

--train_epochs=0 \

--load=./data/pretrained_models/diffstack_model.ptRetrain a DiffStack model (on cpu) using our preprocessed dataset. Remember to update the path to the downloaded data. When training a new model you should expect semantically similar results as in the paper, but exact reproduction is not possible due to different random seeding of the public code base.

python ./diffstack/train.py \

--data_source=cache \

--cached_data_dir=./data/cached_data/ \

--data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"} \

--train_data=nusc_mini-mini_train \

--eval_data=nusc_mini-mini_val \

--predictor=tpp_cache \

--dynamic_edges=yes \

--plan_cost=corl_default \

--plan_loss_scaler=100 To use 1 gpu and distributed data parallel pipeline use:

python -m torch.distributed.run --nproc_per_node=1 ./diffstack/train.py \

--data_source=cache \

--cached_data_dir=./data/cached_data/ \

--data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"} \

--train_data=nusc_mini-mini_train \

--eval_data=nusc_mini-mini_val \

--predictor=tpp_cache \

--dynamic_edges=yes \

--plan_cost=corl_default \

--plan_loss_scaler=100 \

--device=cuda:0Train a standard stack that only trains for a prediction objective by setting --plan_loss_scaler=0:

python -m torch.distributed.run --nproc_per_node=1 ./diffstack/train.py \

--data_source=cache \

--cached_data_dir=./data/cached_data/ \

--data_loc_dict={\"nusc_mini\": \"./data/nuscenes\"} \

--train_data=nusc_mini-mini_train \

--eval_data=nusc_mini-mini_val \

--predictor=tpp_cache \

--dynamic_edges=yes \

--plan_cost=corl_default \

--plan_loss_scaler=0

--device=cuda:0The source code is released under the NSCL licence. The preprocessed dataset and pretrained models are under the CC BY-NC-SA 4.0 licence.