Rui Xie1 | Ying Tai2 | Kai Zhang2 | Zhenyu Zhang2 | Jun Zhou1 | Jian Yang3

1Southwest University, 2Nanjing University, 3Nanjing University of Science and Technology.

⭐ If AddSR helps your images or projects, please consider giving this repo a star. Your support is greatly appreciated! 😊

- 2024.04.10 The training code has been released. Please note that it currently only supports a batch size of 2 per device. We will make it possible to support different batchsizes in the future.

- 2024.04.09 The pretrained AddSR model and testing code have been released.

- ✅ Release the pretrained model

- ✅ Release the training code

## git clone this repository

git clone https://github.com/NJU-PCALab/AddSR.git

cd AddSR

# create an environment with python >= 3.8

conda create -n addsr python=3.8

conda activate addsr

pip install -r requirements.txt

- Download the pretrained SD-2-base models from HuggingFace.

- Download the AddSR models from GoogleDrive

- Download the DAPE models from GoogleDrive

You can put the models into preset/.

You can put the testing images in the preset/datasets/test_datasets.

python test_addsr.py \

--pretrained_model_path preset/models/stable-diffusion-2-base \

--prompt '' \

--addsr_model_path preset/models/addsr \

--ram_ft_path preset/models/DAPE.pth \

--image_path preset/datasets/test_datasets \

--output_dir preset/datasets/output \

--start_point lr \

--num_inference_steps 4 \

--PSR_weight 0.5

RealLR200, RealSR and DRealSR can be downloaded from SeeSR.

Download the pretrained SD-2-base models, RAM, SeeSR and DINOv2. You can put them into preset/models.

We employ the same preprocessing measures as SeeSR. More details can be found at HERE

CUDA_VISIBLE_DEVICES="0, 1, 2, 3" accelerate launch train_addsr.py \

--pretrained_model_name_or_path="preset/models/stable-diffusion-2-base" \

--controlnet_model_name_or_path_Tea='preset/seesr' \

--unet_model_name_or_path_Tea='preset/seesr' \

--controlnet_model_name_or_path_Stu='preset/seesr' \

--unet_model_name_or_path_Stu='preset/seesr' \

--dino_path = "preset/models/dinov2_vits14_pretrain.pth" \

--output_dir="./experience/addsr" \

--root_folders 'DataSet/training' \

--ram_ft_path 'preset/models/DAPE.pth' \

--enable_xformers_memory_efficient_attention \

--mixed_precision="fp16" \

--resolution=512 \

--learning_rate=2e-5 \

--train_batch_size=2 \

--gradient_accumulation_steps=2 \

--null_text_ratio=0.5 \

--dataloader_num_workers=0 \

--max_train_steps=50000 \

--checkpointing_steps=5000

--pretrained_model_name_or_paththe path of pretrained SD model from Step 1--root_foldersthe path of your training datasets from Step 2--ram_ft_paththe path of your DAPE model from Step 3

This project is based on SeeSR, diffusers, BasicSR, ADD and StyleGAN-T. Thanks for their awesome works.

If you have any inquiries, please don't hesitate to reach out via email at ruixie0097@gmail.com

If our project helps your research or work, please consider citing our paper:

@misc{xie2024addsr,

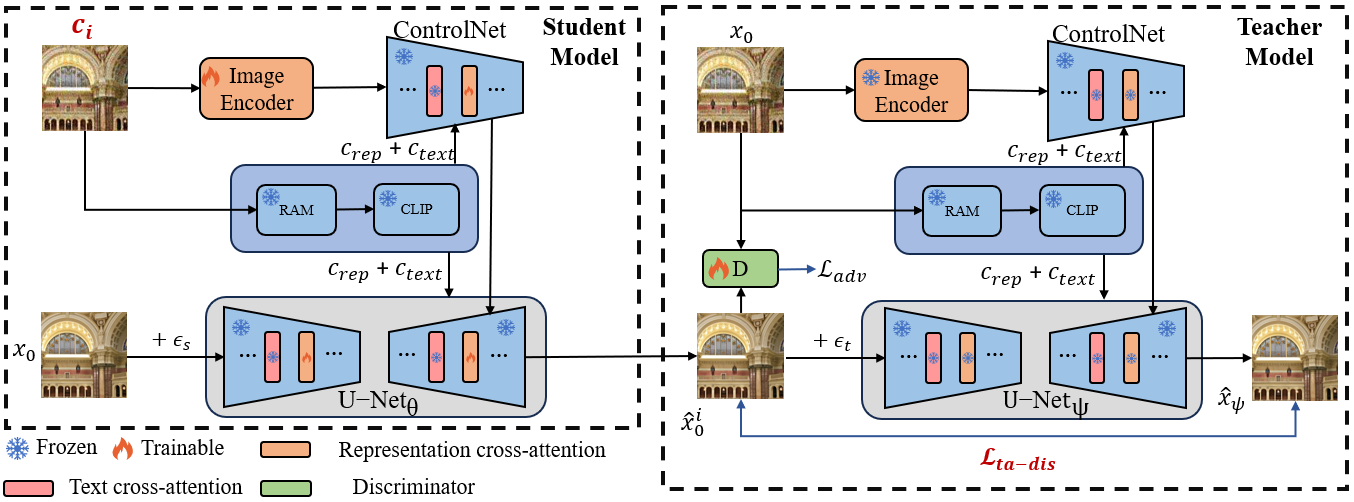

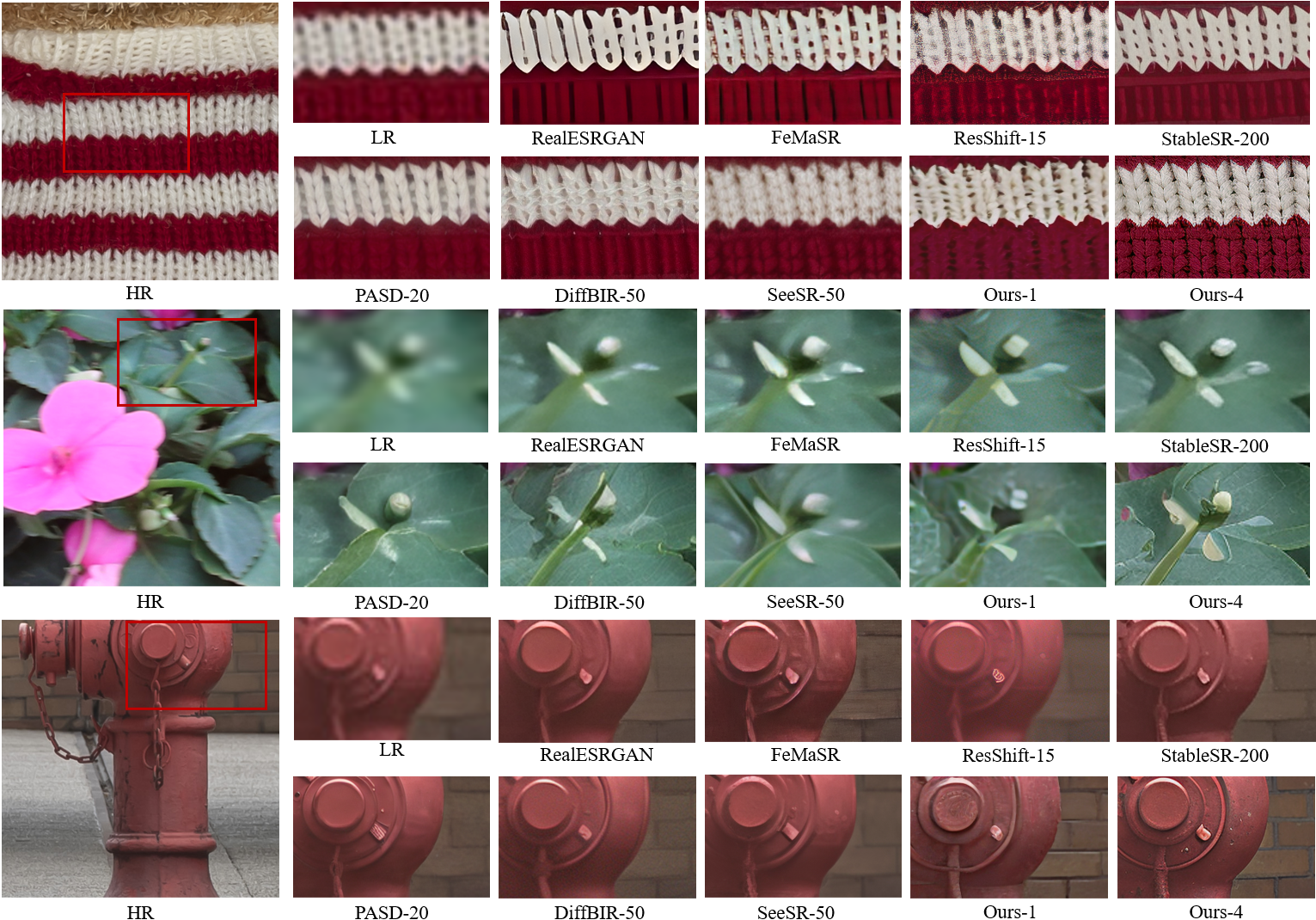

title={AddSR: Accelerating Diffusion-based Blind Super-Resolution with Adversarial Diffusion Distillation},

author={Rui Xie and Ying Tai and Kai Zhang and Zhenyu Zhang and Jun Zhou and Jian Yang},

year={2024},

eprint={2404.01717},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This project is distributed under the terms of the Apache 2.0 license. Since AddSR is based on SeeSR, StyleGAN-T, and ADD, users must also follow their licenses to use this project.