News | Introduction | Pre-trained Models | Usage| Acknowledgement | Statement

-

[Aug 7 2023]: New version has been updated at arxiv. Fix some mistakes. Please refer to issue #7 and #14 for details.

-

[May 5 2023]: The IEEE TGRS published version can be found at IEEE Xplore.

-

[May 4 2023]: Updated the acknowledgment. Many thanks to A2MIM and OpenMixup for their awesome implementations of RGB mean input and the Focal Frequency loss!

-

[Apr 20 2023]: The IEEE TGRS early access version can be found at this website.

-

[Apr 19 2023]: This paper have beed released at arxiv.

-

[Apr 15 2023]: All of the codes have been released.

-

[Apr 14 2023]: This paper has been accepted by IEEE TGRS!

-

[Jan 11 2023]: All the pre-trained models and checkpoints of various downstream tasks are released. The code will be uploaded after the paper has been accepted.

This is the official repository for the paper “CMID: A Unified Self-Supervised Learning Framework for Remote Sensing Image Understanding”

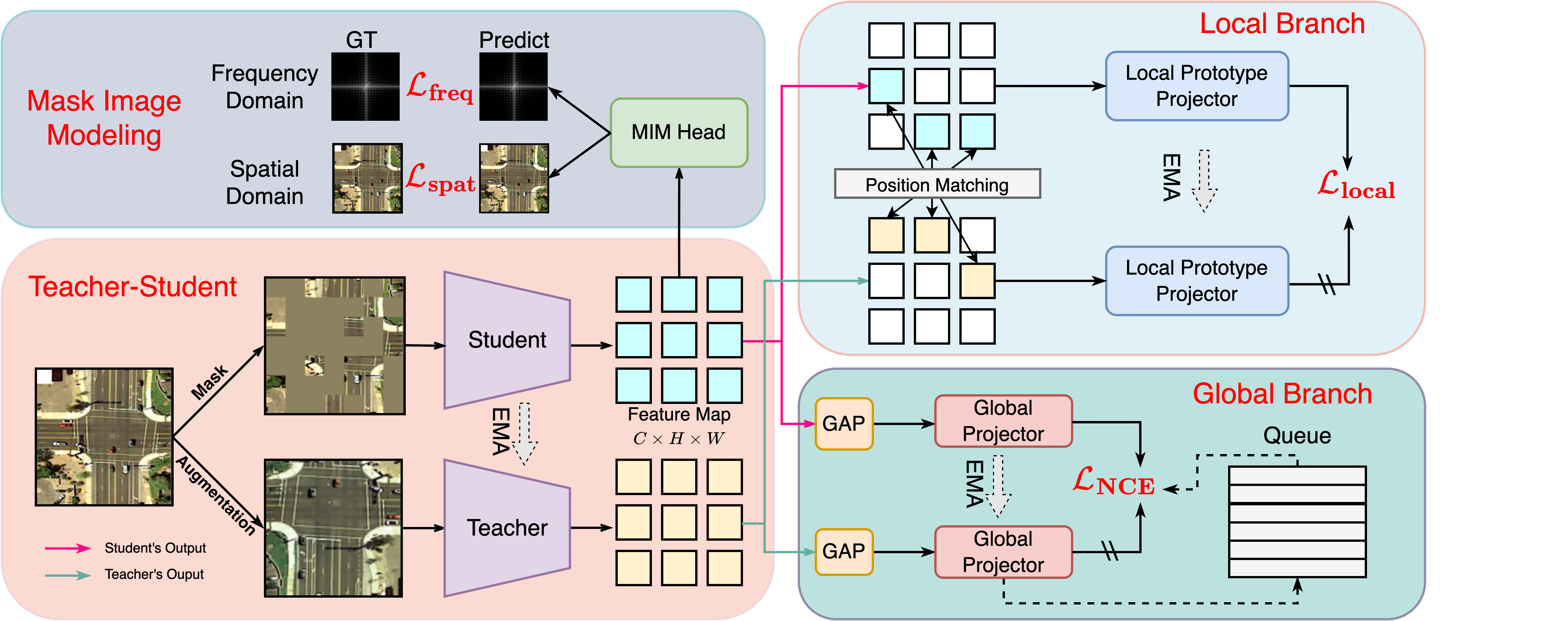

Abstract: Self-supervised learning (SSL) has gained widespread attention in the remote sensing (RS) and earth observation (EO) communities owing to its ability to learn task-agnostic representations without human-annotated labels. Nevertheless, most existing RS SSL methods are limited to learning either global semantic separable or local spatial perceptible representations. We argue that this learning strategy is suboptimal in the realm of RS, since the required representations for different RS downstream tasks are often varied and complex. In this study, we proposed a unified SSL framework that is better suited for RS images representation learning. The proposed SSL framework, Contrastive Mask Image Distillation (CMID), is capable of learning representations with both global semantic separability and local spatial perceptibility by combining contrastive learning (CL) with masked image modeling (MIM) in a self-distillation way. Furthermore, our CMID learning framework is architecture-agnostic, which is compatible with both convolutional neural networks (CNN) and vision transformers (ViT), allowing CMID to be easily adapted to a variety of deep learning (DL) applications for RS understanding. Comprehensive experiments have been carried out on four downstream tasks (i.e. scene classification, semantic segmentation, object-detection, and change detection) and the results show that models pre-trained using CMID achieve better performance than other state-of-the-art SSL methods on multiple downstream tasks.

| Method | Backbone | Pre-trained Dataset | Pre-trained Epochs | Pre-trained model | Backbone Only |

|---|---|---|---|---|---|

| CMID | ResNet-50 | MillionAID | 200 | NJU Box | NJU Box |

| CMID | Swin-B | MillionAID | 200 | NJU Box | NJU Box |

| CMID | ResNet-50 | Potsdam | 400 | NJU Box | NJU Box |

| CMID | Swin-B | Potsdam | 400 | NJU Box | NJU Box |

| BYOL | ResNet-50 | Potsdam | 400 | NJU Box | \ |

| Barlow-Twins | ResNet-50 | Potsdam | 400 | NJU Box | \ |

| MoCo-v2 | ResNet-50 | Potsdam | 400 | NJU Box | \ |

| MAE | ViT-B | Potsdam | 400 | NJU Box | \ |

| SimMIM | Swin-B | Potsdam | 400 | NJU Box | \ |

| Method | Backbone | Pre-trained Dataset | Pre-trained Epochs | OA | Weights |

|---|---|---|---|---|---|

| CMID | ResNet-50 | MillionAID | 200 | 99.22 | NJU Box |

| CMID | Swin-B | MillionAID | 200 | 99.48 | NJU Box |

| BYOL | ResNet-50 | ImageNet | 200 | 99.22 | NJU Box |

| Barlow-Twins | ResNet-50 | ImageNet | 300 | 99.16 | NJU Box |

| MoCo-v2 | ResNet-50 | ImageNet | 200 | 97.92 | NJU Box |

| SwAV | ResNet-50 | ImageNet | 200 | 98.96 | NJU Box |

| SeCo | ResNet-50 | SeCo-1m | 200 | 97.66 | NJU Box |

| ResNet-50-SEN12MS | ResNet-50 | SEN12MS | 200 | 96.88 | NJU Box |

| MAE | ViT-B-RVSA | MillionAID | 1600 | 98.56 | NJU Box |

| MAE | ViTAE-B-RVSA | MillionAID | 1600 | 97.12 | NJU Box |

| Method | Backbone | Pre-trained Dataset | Pre-trained Epochs | mIoU (Potsdam) | Weights (Potsdam) | mIoU (VH) | Weights (VH) |

|---|---|---|---|---|---|---|---|

| CMID | ResNet-50 | MillionAID | 200 | 87.35 | NJU Box | 79.44 | NJU Box |

| CMID | Swin-B | MillionAID | 200 | 88.36 | NJU Box | 80.01 | NJU Box |

| BYOL | ResNet-50 | ImageNet | 200 | 85.54 | NJU Box | 72.52 | NJU Box |

| Barlow-Twins | ResNet-50 | ImageNet | 300 | 83.16 | NJU Box | 71.86 | NJU Box |

| MoCo-v2 | ResNet-50 | ImageNet | 200 | 87.02 | NJU Box | 79.16 | NJU Box |

| SwAV | ResNet-50 | ImageNet | 200 | 85.74 | NJU Box | 73.76 | NJU Box |

| SeCo | ResNet-50 | SeCo-1m | 200 | 85.82 | NJU Box | 78.59 | NJU Box |

| ResNet-50-SEN12MS | ResNet-50 | SEN12MS | 200 | 83.17 | NJU Box | 73.99 | NJU Box |

| MAE | ViT-B-RVSA | MillionAID | 1600 | 86.37 | NJU Box | 77.29 | NJU Box |

| MAE | ViTAE-B-RVSA | MillionAID | 1600 | 86.61 | NJU Box | 78.17 | NJU Box |

| Method | Backbone | Pre-trained Dataset | Pre-trained Epochs | mAP | Weights |

|---|---|---|---|---|---|

| CMID | ResNet-50 | MillionAID | 200 | 76.63 | NJU Box |

| CMID | Swin-B | MillionAID | 200 | 77.36 | NJU Box |

| BYOL | ResNet-50 | ImageNet | 200 | 73.62 | NJU Box |

| Barlow-Twins | ResNet-50 | ImageNet | 300 | 67.54 | NJU Box |

| MoCo-v2 | ResNet-50 | ImageNet | 200 | 73.25 | NJU Box |

| SwAV | ResNet-50 | ImageNet | 200 | 73.30 | NJU Box |

| MAE | ViT-B-RVSA | MillionAID | 1600 | 78.08 | NJU Box |

| MAE | ViTAE-B-RVSA | MillionAID | 1600 | 76.96 | NJU Box |

| Method | Backbone | Pre-trained Dataset | Pre-trained Epochs | mF1 | Weights |

|---|---|---|---|---|---|

| CMID | ResNet-50 | MillionAID | 200 | 96.95 | NJU Box |

| CMID | Swin-B | MillionAID | 200 | 97.11 | NJU Box |

| BYOL | ResNet-50 | ImageNet | 200 | 96.30 | NJU Box |

| Barlow-Twins | ResNet-50 | ImageNet | 300 | 95.63 | NJU Box |

| MoCo-v2 | ResNet-50 | ImageNet | 200 | 96.05 | NJU Box |

| SwAV | ResNet-50 | ImageNet | 200 | 95.89 | NJU Box |

| SeCo | ResNet-50 | SeCo-1m | 200 | 96.26 | NJU Box |

| ResNet-50-SEN12MS | ResNet-50 | SEN12MS | 200 | 95.88 | NJU Box |

- Details about pre-training from scratch please refer to pre-training instructions.

- After pre-training (or downloading the pre-trained models), please make sure extract the backbone using extract.py.

- Details about fine-tuning on the UCM scene classification task please refer to classification instructions.

- Details about fine-tuning on semantic segmentation task please refer to sementic segmentation instructions.

- Details about fine-tuning on the DOTA OBB detection task please refer to OBB detection instructions.

- Details bout fine-tuning on the CDD change detection task please refer to change detection instructions.

- Many thanks to the following repos: Remote-Sensing-RVSA、iBOT、A2MIM、core-pytorch-utils、solo-learn、OpenMixup、timm、Adan.

-

If you find our work is useful, please give us 🌟 in GitHub and cite our paper in the following BibTex format:

@article{muhtar2023cmid, title={CMID: A Unified Self-Supervised Learning Framework for Remote Sensing Image Understanding}, author={Muhtar, Dilxat and Zhang, Xueliang and Xiao, Pengfeng and Li, Zhenshi and Gu, Feng}, journal={IEEE Transactions on Geoscience and Remote Sensing}, year={2023}, publisher={IEEE} } @article{muhtar2022index, title={Index your position: A novel self-supervised learning method for remote sensing images semantic segmentation}, author={Muhtar, Dilxat and Zhang, Xueliang and Xiao, Pengfeng}, journal={IEEE Transactions on Geoscience and Remote Sensing}, volume={60}, pages={1--11}, year={2022}, publisher={IEEE} } -

This project is strictly forbidden for any commercial purpose. Any questions please contact pumpKin-Co.