Yuyang Li, Bo Liu, Yiran Geng, Puhao Li, Yaodong Yang, Yixin Zhu, Tengyu Liu, Siyuan Huang

Published in IEEE Robotics and Automation Letters (Volume: 9, Issue: 5, May 2024).

We recommend using Conda to create a virtual environment. To install dependencies:

pip install -r requirements.txtThe code in this repo is tested with:

- CUDA 12.2

- PyTorch 2.2.1

- Python 3.9

We use a customized pytorch_kinematics for batched forward kinematics.:

cd thirdparty/pytorch_kinematics

pip install -e .Please refer to Installing PyTorch3D to install PyTorch3D with CUDA support.

Use the following code to synthesize grasps.

python run.py [--object_models OBJECT_MODELS [OBJECT_MODELS ...]]

[--hand_model HAND_MODEL]

[--batch_size BATCH_SIZE]

[--max_physics MAX_PHYSICS]

[--max_refine MAX_REFINE]

[--n_contact N_CONTACT]

[--hc_pen]

[--viz]

[--log]

[--levitate]

[--seed SEED]

[--tag TAG]

... # See argumentsExplainations:

--hand_model: Hand model for synthesizing grasps."shadowhand"by default.--object_models: Specify names for objects one by one.--batch_size: Specify parallel batch size for synthesis.--max_physics,--max_refines: steps for the two optimization stages.--n_contact: Amount of contact points for each object,3by default.--hc_pen: Enable hand-penetration energy.--viz,--log: Enable periodical visualization and logging.--levitate: Synthesize grasps for levitating objects (rather than ones on the tabletop).--seed: Specify the seed,42by default.--tag: Tag for the synthesis.

For more arguments, please check run.py

As an example:

python run.py --object_models duck cylinder --seed 42 --batch_size 1024 --tag demoThe result will be in synthesis/[HAND_MODEL]/[YYYY-MM]/[DD]/..., where you can find an existing example.

Use filter.py to filter the result to remove bad samples and visualize the final grasps.

- Input all the folders of the synthesis (under

synthesis/[HAND_MODEL]/[YYYY-MM]/[DD]/...) into thepathslist infilter.py. - Run

python filter.py. You can adjust the filter thresholds in the code (XXX_thres). - Go to folders of each synthesis to check the visualization.

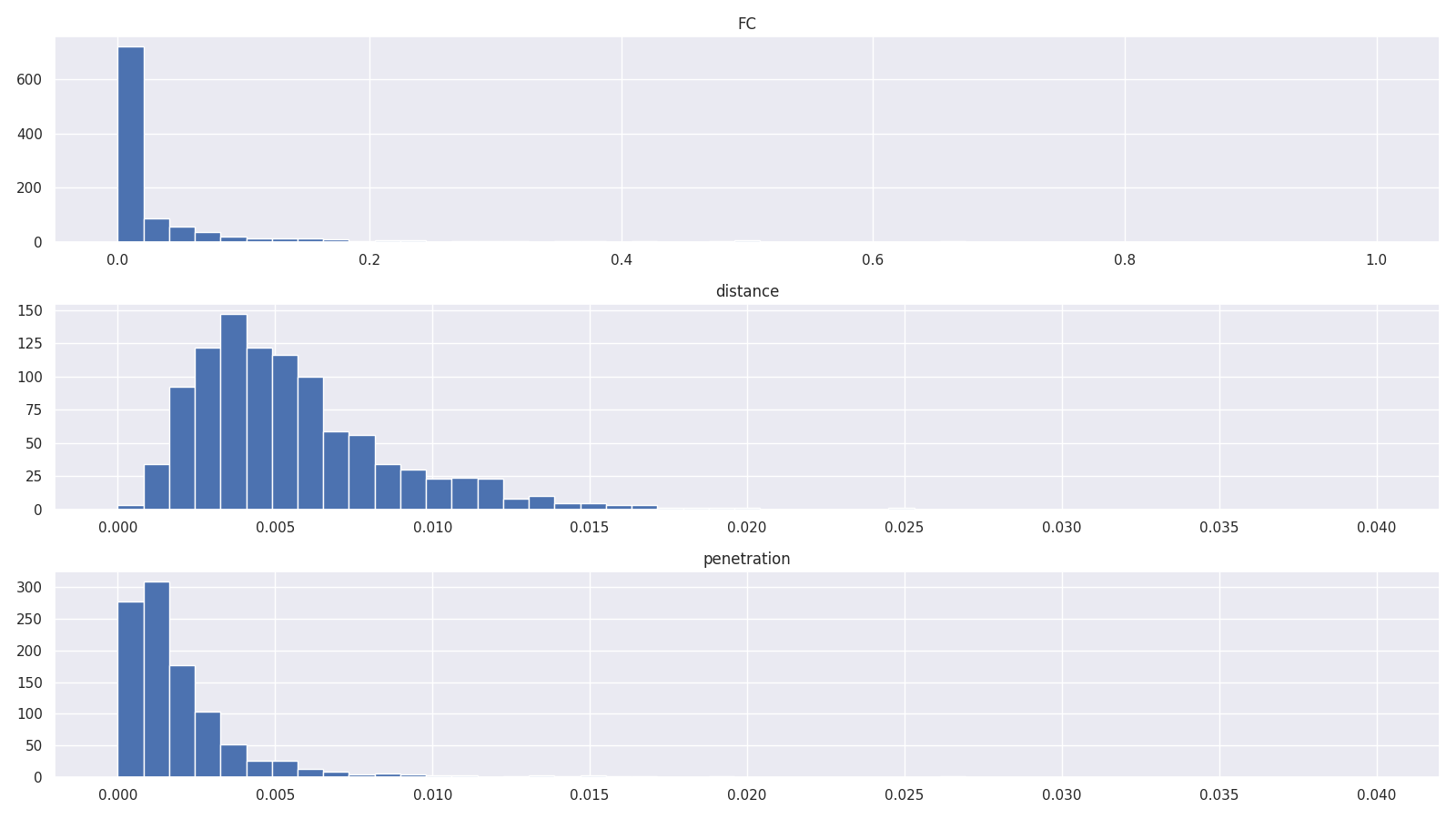

You can see the grasps as HTML files (example 1, example 2), and also a histogram for the synthesis results:

We use kaolin to perform distance computation on mesh. Please follow Installing kaolin to install NVIDIA kaolin.

To use your object, you need to train an overfitted Signed Distance Field for it for distance computation.

- Put your object to

data/objects/[YOUR_OBJECT_NAME]. - Put the object label and path-to-mesh in

data/objects/names.json. As an example, for a mesh of a torus:... "torus": "torus/torus.stl", ...

- Use

train_odf.pyto train the SDF, whose weights will be saved as "sdfield.pt" under the object folder.python train_odf.py --obj_name [YOUR_OBJECT_LABEL]

- (TBD) Prepare table-top stable orientations. We use PyBullet to simulate random drops of the objects and collect and merge their stable rotations on the table. Results are in

drop_rot_filtered_new.json. We will update guides for this soon :D - Synthesize by specifying the object with the label like

torus.

If you find this work useful, please consider citing it:

@article{li2024grasp,

author={Li, Yuyang and Liu, Bo and Geng, Yiran and Li, Puhao and Yang, Yaodong and Zhu, Yixin and Liu, Tengyu and

Huang, Siyuan},

title={Grasp Multiple Objects with One Hand},

journal={IEEE Robotics and Automation Letters},

volume={9},

number={5},

pages={4027-4034},

year={2024},

doi={10.1109/LRA.2024.3374190}

}