Big-Data-Notes

Listing my big data notes from this Udemy Course

Section 1: Introduction to Big Data

- If we want to label some data as big data, it has some properties as follows(known as 5V, sometimes 3 V or 11 V is used):

-

Volume

-

Velocity

-

Variety

-

Verification

-

Value

- A plane is generally having more than 5000 sensors. 10 GB data is created per second per engine in an airplane.

For a flight between Adana and Istanbul, the flight is taking 1 hour. The data created during this flight is

Data Generation Speed * Number of Engines * Total second of flight

10 GB * 2 engines * 3600 Seconds ~ 72 TB

- An inforgraphics for social media is below

-

Variety means having different data sources like images, texts, audios, sensors, csvs, database tables etc.

-

Verification is that checking the data. For instance, speed value can't be negative or a probability can't exceed 1 etc.

-

Hadoop and spark are 2 most popular big data technologies. They are based on distributed computing.

-

Hadoop has 2 parts: HDFS and MapReduce. HDFS keeps data in a distributed way. MapReduce is processing data. Develop MapReduce in order to process data

- Apache Pig

- Apache Hive

-

Spark has no storage part. It works on RAM, which leads to speed increase by 100 times compared to Hadoop.

-

If there are 5 machines in a cluster, 1 machine is master and remaining 4 machines are slaves. Data is replicated in hadoop by a replication factor.

- Data shouldn't be directly transferrred to Hadoop because it may lose. Kafka should receive data first and then data moves to hadoop secondly.

- NoSQL is essential in big data technologies because they are scalable. 4 types of NoSQL are as follows:

- Document: MongoDB and ElasticSearch. We can upload a json file to MongoDB.

- Wide Column: Hadoop, HBase, Cassandra

- Key Value: Redis, DynomoDB

- Graph DB: Neo4j, used in social media analysis.

- When we set up a noqsl database, writing operation will be carried out via master node and reading operation will be carried out slave nodes generally.

Hadoop

-

Hadoop is a java library which enables us to make parallel operations of big data(PB, EB) on multiple machines

-

Hadoop composes of 4 components:

-

Hadoop Common: Necessary for Apache Hive & Apache Pig It gives access them to access HDFS.

-

HDFS: Hadoop distributed file system

-

Hadoop YARN: It manages CPU, RAM and disk usage of MapReduce applications.

-

Hadoop MapReduce: Tools to process data which is on HDFS.

- HDFS can be examplified as follows:

- MapReduce can be visualized as follows:

- Hadoop has 3 modes in installation.

-

Standalone mode: Used for debugging and testing

-

Single Node Cluster: Replication factor = 1. Master and slave are the same.

-

Multiple Node Cluster: Replication factor > 1, 1 master and others are slave. Hadoop should be installed in each machine and the network should be configured.

-

Cloudera is a platform in which hadoop, apache pig, apache hive, apache spark are installed. Install vmbox and install cloudera as an operating system.

-

Some hdfs commands on terminal of Hadoop is below:

# To create a directory on HDFS

hdfs dfs -mkdir /example

# To transfer a file to HDFS

hdfs dfs -copyFromLocal /path/of/file/in/local /target/directory/on/hdfs

# To see the number of files in a hdfs directory

hdfs dfs -count /example

# To see the content of a file in HDFS

hdfs dfs -cat /example/ratings.csv

# To copy a file in HDFS

hdfs dfs -copy /example/ratings.csv /var

# To delete a file

hdfs dfs -rm /example/ratings.csv

# To move a file to another directory

hdfs dfs -rm /example/ratings.csv /var

# To list files in usr folder

hdfs dfs -ls /us

# To change permissions of a file

hdfs dfs -chmod +x runall.sh

# To set replication factor of HDFS(should be used in a cluster where a master and slaves exist)

hdfs dfs -setrep 4 -R /example/ratings.csv

# Copy file to local

hdfs dfs - copyToLocal /path/in/hdfs /path/in/local

- HDFS is on port 8020. HDFS on local works on 50070.

Apache Pig

-

Apache is one of the ways to develop Map Reduce in Hadoop. It is processing data in HDFS.

-

We can develop Map Reduce via Java, Python, Scala, Apache Pig and Apache Hive. However, Apache Pig and Apache Hive are preferred.

-

Apache Pig is developed in Pig Latin language.

-

Pig file extension is

.pig. -

Some Apache Pig script is below:

# Loading data

First = LOAD '/data/*' USING PigStorage(',') AS

(

userId: int,

movieId: int,

rating: double,

duration: double,

date: int,

country: chararray

);

# To print data

DUMP First;

New_Data = FILTER First BY rating>3.0;

DUMP New_Data;

# Drop duplicate rows

New_Data2 = DISTINCT New_Data;

DUMP New_Data2;

New_Data3 = GROUP First BY COUNTRY;

DUMP New_Data3;

AVG_DATA = FOREACH New_Data3 {

Generate

group,

AVG(Data.duration) as ortalama;

}

DUMP AVG_DATA;

-- Inner join

JOINED_DATA = JOIN Personal BY DEPT_ID, DEPARTMENT BY DEPT_ID BY LEFT_TABLE;

DUMP JOINED_DATA;

--Left Join

JOINED_DATA = JOIN Personal BY DEPT_ID LEFT OUTER, DEPARTMENT BY DEPT_ID BY LEFT_TABLE;

-- Saving

STORE JOINED_DATA INTO /path/to/hdfs/directory USING PigStorage(',');

- To run pig script,

pig path/to/directory/filename.pig

- Bind condition(?:) is 'if else' of Apache Pig.

a==3?'dogru':'yanlis'

-

We can make regex operations in Apache Pig.

-

Standard Aggregation functions like max, min, avg is possible in Apache Pig.

Apache Hive

-

Apache Hive is a tool to develop MapReduce on Hadoop environment.

-

We write SQL Queries for Apache Hive.

-

Don't run COUNT and WHERE operations on Apache Hive, which grabs data from Hadoop.

MongoDB

-

It is a popular and scalable NoSQL Technology.

-

MongoDB stores data in a format of BinaryJSON, which is similar to JSON.

-

RDBMS vs NoSQL is below:

- Different terminologies for RDBMS and NoSQL is below:

- While inserting a new record to mongoDB, a unique id is created as follows:

-

After installing MongoDB, open up a MongoDB Server on Terminal 1 and run command in Terminal 2.

-

To create a DB in MongoDB

use DB_NAME_TO_CREATE

- To list DB's in MongoDB(admin is a default DB coming with installition)

show dbs

- To show current DB

db

- To create collection(Table)

db.createCollection("Persons")

- To show collections on DB,

show collections

- To insert a document(row) to a collection(table)

db.Persons.insert( {ad: "albert einstein"} )

db.Persons.insert( {ad: "madam curie"} )

db.Persons.insert( {ad: "isaac newton"} )

db.Persons.insert( {ad: "celal sengor"} )

- To have a look at the collection

db.Persons.find()

- To filter on mongo db

db.Persons.find( ad: "isaac newton"} )

-

Robomongo(Robo3T) is used in industry instad of CLI we did above. Robo3T is a GUI like pgadmin of postgresql.

-

MongoDB's default port is 27017.

-

We can use MongoDB on Java, Python, C#.

-

Maven is used in Java Enterprise. Maven is a java technology. INstead of adding libraries one by one, we are just adding dependency code to Maven and it will download what is required. We can use Maven to access to mongo db.

-

Java codes are stored under src/main/java in Maven project.

-

pom.xml is our maven config file. We can type what libraries to use under tags

-

All documents(rows) are independent of each other. One document is composed of 50 fields and the other is composed of 3 fields. This makes MongoDB flexible. We don't have to make a relationship of 1 to N, N to N, 1 to 1 etc.

-

We can make CRUD operations on Java for MongoDB.

-

Using MongoDB is more efficient and easier than using Mysql.

Elastic Search

-

Elastic Search is a NoSQL technology which is used in text search on big data.

-

If search in your main focus, prefer Elastic Search.

-

ES indexes words at saving phase. Therefore, it is faster.

-

A visualization for Elastic Search is below:

- Terminology for Elastic Search

- Elastic Search is also having cluster architecture like other big data technologies.

-

Elastic Search keeps its data in JSON format due to the fact that it has a pattern of document based. You can import json file to elastic search directly.

-

POST HTTP verb is more secure in sending credential information like password or credit card info.

-

Elastic Search uses 9200 port

-

To upload a json file to an ES cluster, use PUT HTTP word on Postman and send a Json object (under Body Tab) to

localhost:9200/db_name/table_name/id_of_documentor POST HTTP word andlocalhost:9200/db_name/table_name. We don't have to specify id_of_document in POST HTTP. POST assigns random id to the document. -

To query an instance in Elastic Search, use GET HTTP word and

localhost:9200/db_name/table_name/id_of_document

- To get a selected product, use GET HTTP word

localhost:9200/exam/product/126

- To check a document is in ES or not, use GET HTTP word

localhost:9200/exam/product/126?_source=false

- To get only selected fields(name and color fields of 126 ID product), use GET HTTP word

localhost:9200/exam/product/126?_source_include=name,color

- To delete a document from ES, use DELETE HTTP word

localhost:9200/exam/product/126

- To filter only brand=apple, use GET word

localhost:9200/exam/product/_search?q=brand:name

- To get all infos on DB,

localhost:9200/_all

-

What we did above in Postman is used in testing. ES should be integrated into a programming language like Java or Python.

-

ES can be used in Maven project in Java. ES should be addedas dependency under pom.xml

-

Check out ES Java and Python API's

-

Data which is sent to ES must be JSON. We can send data to ES via Java or Python. Sending data to ES is called indexing.

-

ES is composed of 5 shards by default.

Apache Kafka

-

The critical point in big data projects is to collect data fast without failure.

-

We are sending data from source to Apache Kafka, not directly to analysis tools.

-

Apache Kafka has a message-queue architecture(FIFO: first in first out). It is distributed. It is used in real time projects. It is developed in Scala language.

- Producer and COnsumer architecture exist in Kafka.

- Kafka cluster is consisting of many computers. One computer is called broker. In each broker, there may be several topics.

- Kafka is distributed, which means that it can be replicated by a replication factor(below = 3)

-

In order to run Kafka cluster, run Zookeeper first.

-

Zookeeper manages resource management in distributed server architectures. Zookeeper is dealing with configuration and keeping configuration files.

-

Zookeeper and Kafka versions must be compatible.

-

Zookeeper uses 2181 port. Kafka uses 9092.

-

Producer is a Kafka API which is sending data to Kafka Topic. Consumer is a Kafka API which is taking data from Kafka Topic to send it to Hadoop or Spark.

- To create a topic in Apache Kafka

kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic TOPIC_NAME_TO_USE

- To list topics in Apache Kafka

kafka-topics.bat --list --zookeeper localhost:2181

- To create a producer API in Apache Kafka

kafka-console-producer.bat --broker-list localhost:9092 --topic TOPIC_NAME

- We can crate a producer API in Java or Python and then send it to Kafka. In most cases, Consumer API and Producer API are created in a programming language and then linked.

- To create a consumer API in Apache Kafka

kafka-console-consumer.bat --zookeeper localhost:2181 --topic TOPIC_NAME

-

In Java, add kafka dependencies under pom.xml to use in Java

-

Consumers are listening topcs by subscribe keyword in Java. Consumer can listen many topics at the same time.

Apache Spark

- Spark was developed in Scala language. It is working on RAM, therefore it is faster than hadoop. Spark has no storage unit.

- Spark vs Hadoop

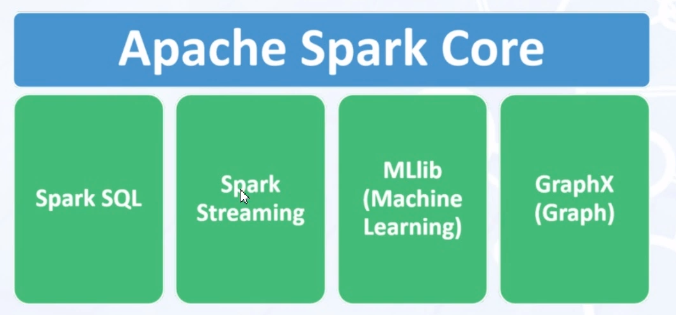

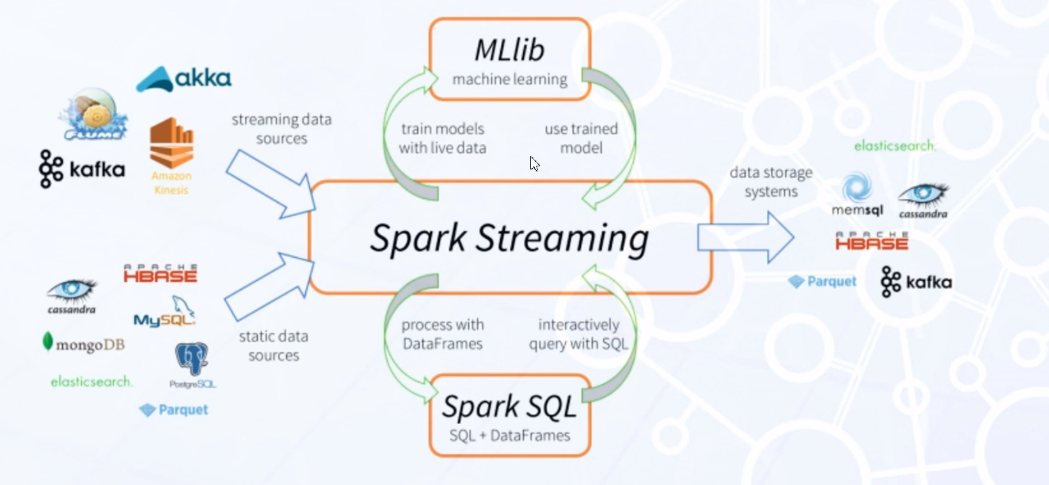

- Spark has a Machine Learning library called MLLib. Real time data analysis can be made via Spark Streaming. We can make big data analysis via Spark SQL .

-

The opposite of real time analysis is batch analysis. Batch analysis is made in Hadoop nad Real time analysis is made in Spark.

-

Apache Spark is also having a cluster architecture.

-

Generally, data is stored in hdfs of Hadoop and processed in Spark. Hadoop is advantegous in hdfs and Spark is advantegous in processing.

-

RDD is Resilient Distributed Dataset. RDD is fundamental data structure of Apache Spark.

-

Apache Spark made a big change in version 1.8. It would be a better practice to use Spark > 1.8. Spark was using Dataframe architecture before 1.8 and now using Dataset architecture after 1.8.

-

Add Spark to Maven repository as dependency in pom.xml.

-

RDD methods are important. Some RDD methods are count, first, last etc.

-

We can import data to RDD via local computer, HDFS or zipped files.

-

RDD operations are 2 types: transformations and actions. Map and filter are examples of transformations. Count and first are examples of actions;

-

Map method of RDD creates a new RDD.

-

Map, foreach and Filter are 3 most used methods of RDD.

-

Filter method of RDD filters input RDD data and create a new RDD data.

-

flatmap, mappartitions, distinct, sample, Union, Substract are transformation methods for RDD. They are creating a new RDD after transformations.

-

PairRDD is a transformation method that creates key value pairs.

-

Spark SQL is used more than RDD's.

-

Lazy evaluation is creating RDD until seeing action method. It is a concept asked in interviews. There must be an action method at the end of the code.

- collect is an action method of RDD. It gathrs data from slaves to master.

-

take is an action method of RDD. It is like head of Python dataframe.

-

We can save RDD's to csv's or hdfs via saveastextfile action method.

-

Converting input to RDD's via model is the critical point of Java code.

-

MongoDB has an apache spark integration. Its name is mongo spark connector. Use this library in Java as dependency in pom.xml.

-

We can save the result of our analysis into MongoDB. MongoDB accepts data which is in JSON format.

Spark SQL

-

Spark SQL is providing us with writing sql alike functions in order to make analysis.

-

In order to use Spark SQL in Java, add it to pom.xml as dependency.

-

Spark Core(RDD's and section above) uses Spark Context; Spark SQL uses Spark Session.

-

Spark Core & Spark SQL are compared in academical articles. After version 2.0, Spark SQL outperforms. Before version 2.0, Spark Core outperforms.

-

Spark SQL is easier than RDD's(Spark Core).

-

We can subset columns of raw data via select method

data.select("col1","col2"). We can also use group by in Spark SQL. -

We can read nested Json objects in a json file.

-

We can filter data via filter method of Dataset in Spark SQL.

-

Spark is inspired from Hadoop. Therefore, Spark is based on Hadoop Common. We should explicit it briefly.

-

We can write SQL queries in Java for Spark Dataset instead of Object Oriented Methods above.

rawdata.createOrReplaceTempView("person");

sparkSession.sql("SELECT * FROM person")

-

Tempview disappears if spark session is closed and even if spark cluster is running. Therefore, use createOrReplaceGlobalTempView. It is useful in teams collaborating with each other.

-

Encoder in Spark means converting json file which is read to a model defined in a Java class.

-

We store data in Hadoop HDFS and process it in Spark and then send processed data to Hadoop HDFS. Other possible scenarios: Read from HDFS and writes on NoSQL or read from Kafka and writes on HDFS

Real Time Streaming

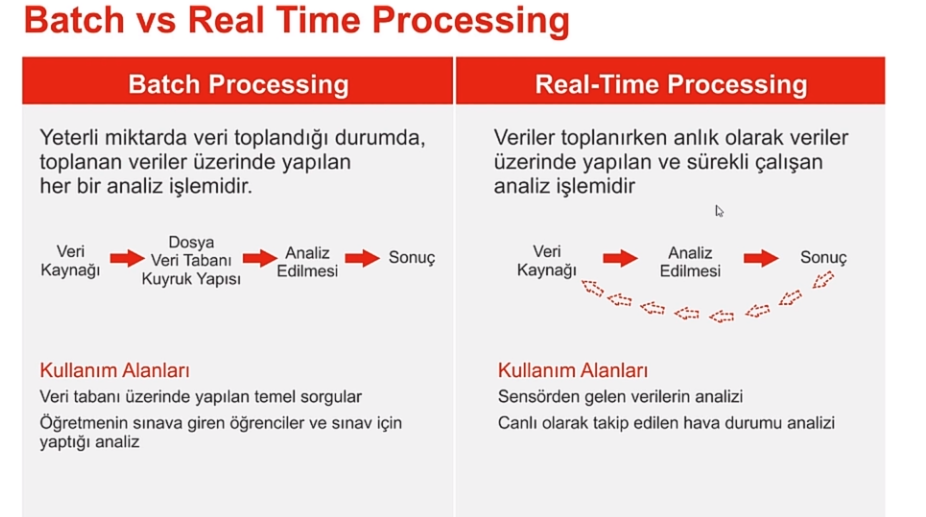

- Batch Processing vs Real Time Processing

-

Batch processing is making analysis on a stationary data.

-

Following new born babies via sensors, self driving cars, Amazon go, Google Ads are examples of Real time processing.

-

Some Real Time Streaming technologies are below

-

Spark Streaming: A sub project of Spark

-

Flink: Similar to Spark Streaming. Used a lot before Spark 2.2.

-

Apache: Not used a lot now but it is basis of Spark Streaming and Flink.

-

Amazon Kinesis: A product of Amazon. It is an enterprise service. No coding. Drap and drop via GUI's.

-

All of above technologies(Spark Streaming, Amazon Kinesis etc.) can be developed via Scala, Python or Java.

-

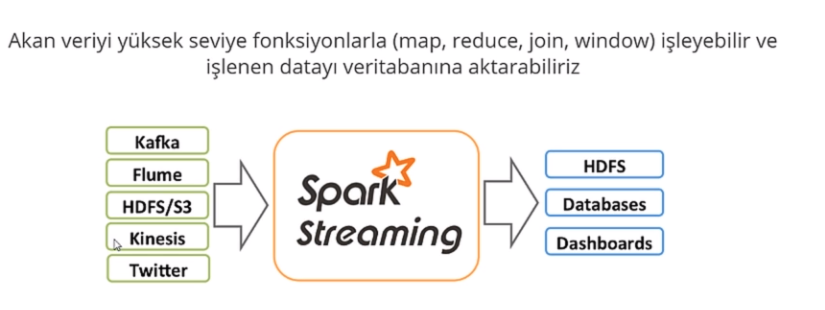

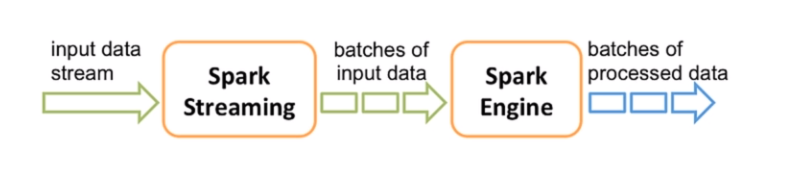

Spark Streaming is a library running on Spark Engine

- Spark Streaming splits data into batches and these batches were fed into Spark Engine. Batches of processed data on Spark Engine are final outputs.

-

Netcat is a messaging app whose data can be processed in real time analysis.

-

We can use data by listening a port or reading from hdfs or reading from Apache Kafka etc in order to use in Spark Streaming.

-

IoT sensor data can be processed in Spark Streaming in a real-time manner.

-

Complete mode takes all data and make analysis. Update mode takes only recent data and make analysis.

-

Real time analysis have 2 types:

-

Original Stream: Immediately processing data.

-

Windowed (Time) Stream: Partitioning data into some time frames and then make analysis.

-

Original data don't have timestamp value and stream adds it.

-

An example of Windowed Stream is below:

Apache Kafka - Spark Streaming Integration

- An example of Kafka and Spark Streaming is below:

-

We can produce and send data to Kafka via Python and consume data in Kafka via Java using libraries

-

To convert data into Json in Kafka Producer, use gson package of Google in pom.xml.

MLLIB

-

Google cloud uses uploaded data to its systems. Therefore, it is super good.

-

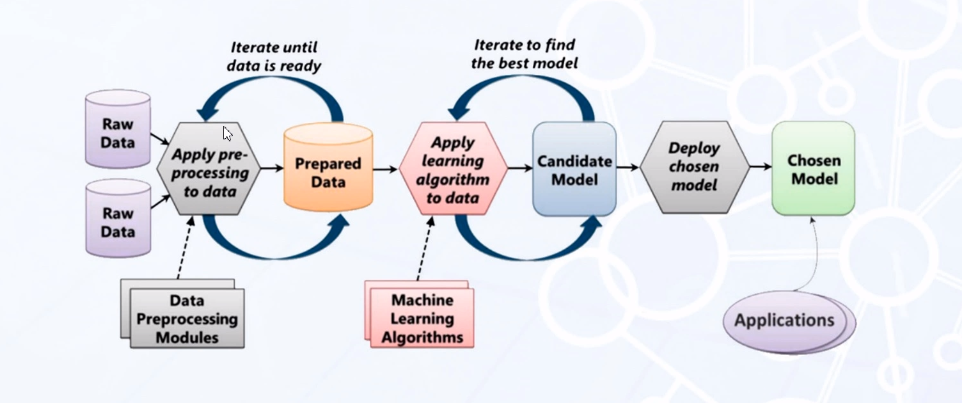

ML lifecycle is below:

-

MLLIB is scalable. We can add machines to cluster or subbtract machines from cluster.

-

MLLIB can be coded in Python, Java, R and Scala etc.

-

MLLIB is released after Spark 0.8

-

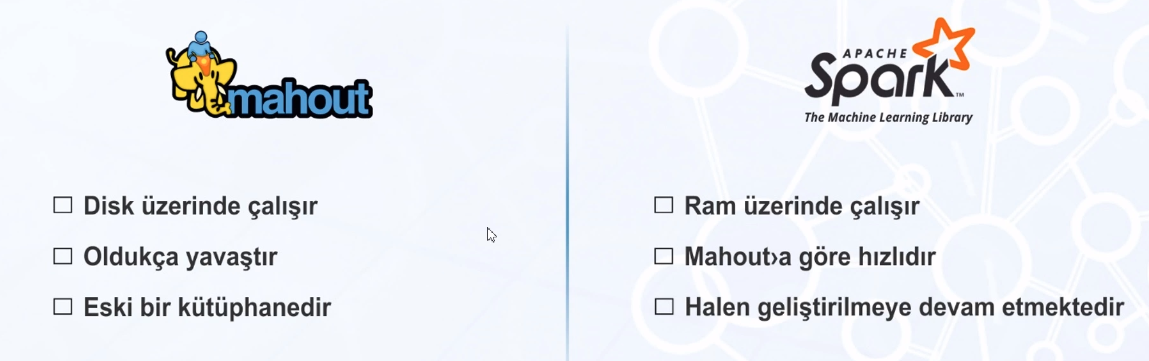

Mahout is an alternative to MLLIB. Mahout is outdated and not developed any more.

- Apache Spark usage example is below

- Linear Regression, Naive Bayes, Decision Trees and Random Forest are available in Spark MLLIB.

Other notes

- Apache Flume is a technology in order to move log or event data(web server logs, application logs, social media feeds) from Kafka to HDFS. It can be installed with Helm. Data can be transferred after accumulating in Kafka based on some criteria such as each 10 minutes, reaching to a limit of 1000 etc.