- TensorRT engine inference with ONNX model conversion

- Dockerized environment with: CUDA 10.2, TensorRT 7, OpenCV 3.4 built with CUDA

- ResNet50 preprocessing and postprocessing implementation

- Ultraface preprocessing and postprocessing implementation

- Pull container image from the repo packages

docker pull ghcr.io/mrlaki5/tensorrt-onnx-dockerized-inference:latest- Download TensorRT 7 installation from link

- Place downloaded TensorRT 7 deb file into root dir of this repo

- Build

cd ./docker

./build.shFrom the root of the repo start docker container with the command below

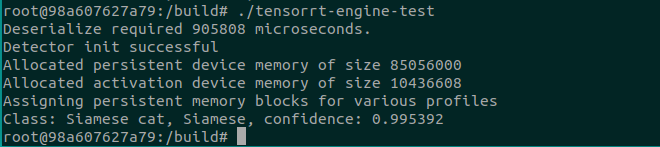

./docker/run.sh./ResNet50_test- Input image

- Output: Siamese cat, Siamese (confidence: 0.995392)

- Note: for this test, camera device is required. Test will start GUI showing camera stream overlaped with face detections.

./Ultraface_test