This repository provides a PyTorch implementation of Lip2Wav.

I have tried to match official implementation as much as possible, but it may have some mistakes, so please be careful of using this implementation. Also, feel free to tell me any suggestion for this repository. Thank you!

- python >= 3.5.2

- torch >= 1.0.0

- numpy

- scipy

- pillow

- inflect

- librosa

- Unidecode

- matplotlib

- tensorboardX

- ffmpeg

sudo apt-get install ffmpeg

You can download datasets from the original Lip2Wav repository, same format of dataset directories is used. Please preprocess first following steps listed in the original repository.

- For training Lip2Wav-pyroch, run the following command.

python train.py --data_dir=<dir/to/dataset> --log_dir=<dir/to/models>

- For training using pretrained model, run the following command.

python train.py --data_dir=<dir/to/dataset> --log_dir=<dir/to/models> --ckpt_dir=<pth/to/pretrained/model>

- For testing, run the following command.

python test.py --data_dir=<dir/to/dataset> --results_dir=<dir/to/save/results> --checkpoint=<pth/to/model>

Pretrained model of Chemistry Lectures is only available now. You can download the model here.

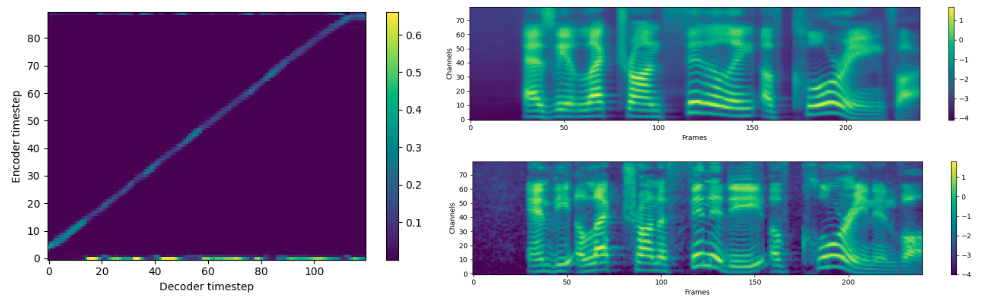

You can find an example test result in results folder. The following figure shows the attention alignment(left), the test result from postnet(right top), and the ground truth Mel spectrogram(right bottom).

This repository is modified from the original Lip2Wav and highly based on Tacotron2-Pytorch. I am so thankful for these great codes.