🌐 Homepage • 📃 Paper • 🤗 Data (PVD-160k) • 🤗 Model (PVD-160k-Mistral-7b) • 💻 Code

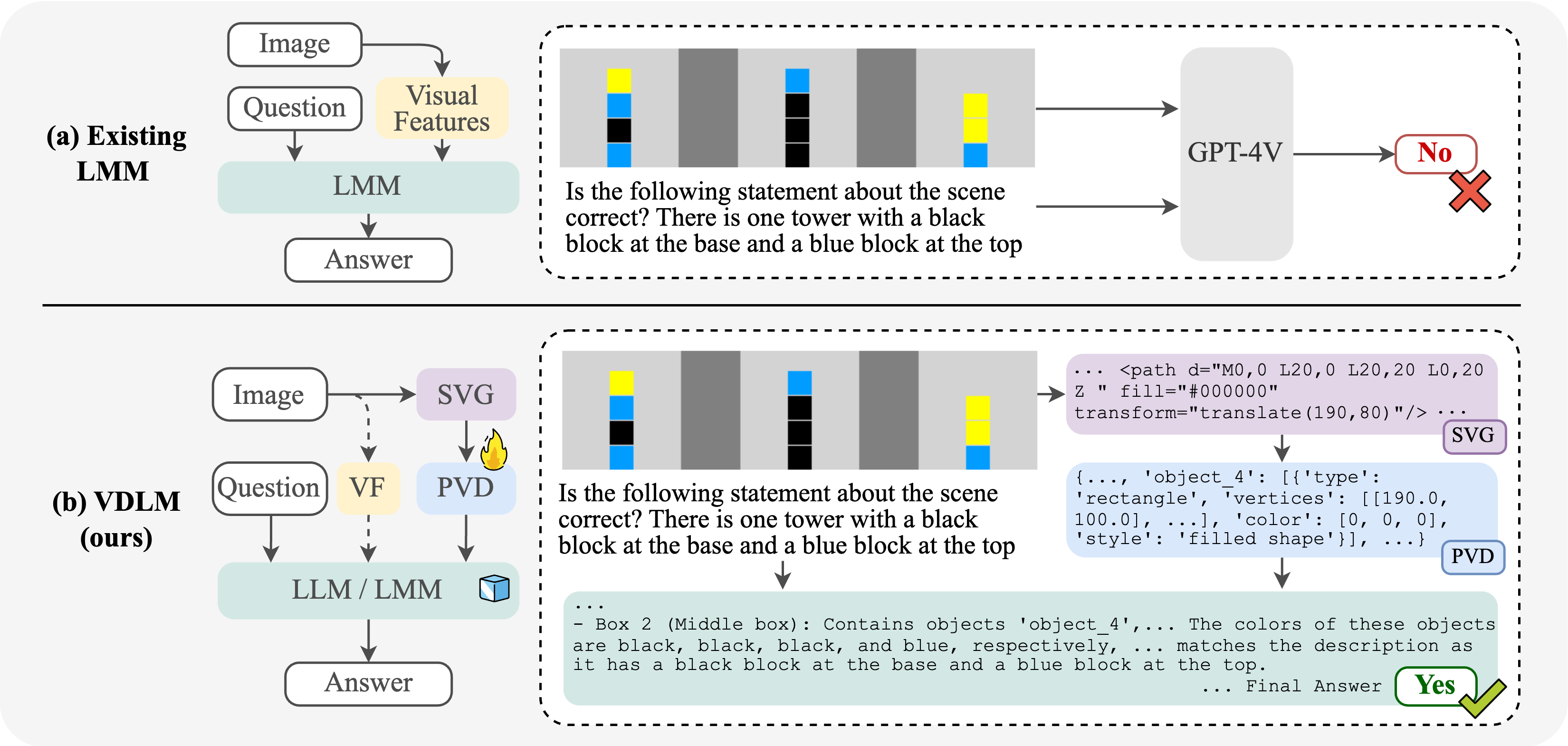

We observe that current large multimodal models (LMMs) still struggle with seemingly straightforward reasoning tasks that require precise perception of low-level visual details, such as identifying spatial relations or solving simple mazes. In particular, this failure mode persists in question-answering tasks about vector graphics—images composed purely of 2D objects and shapes.

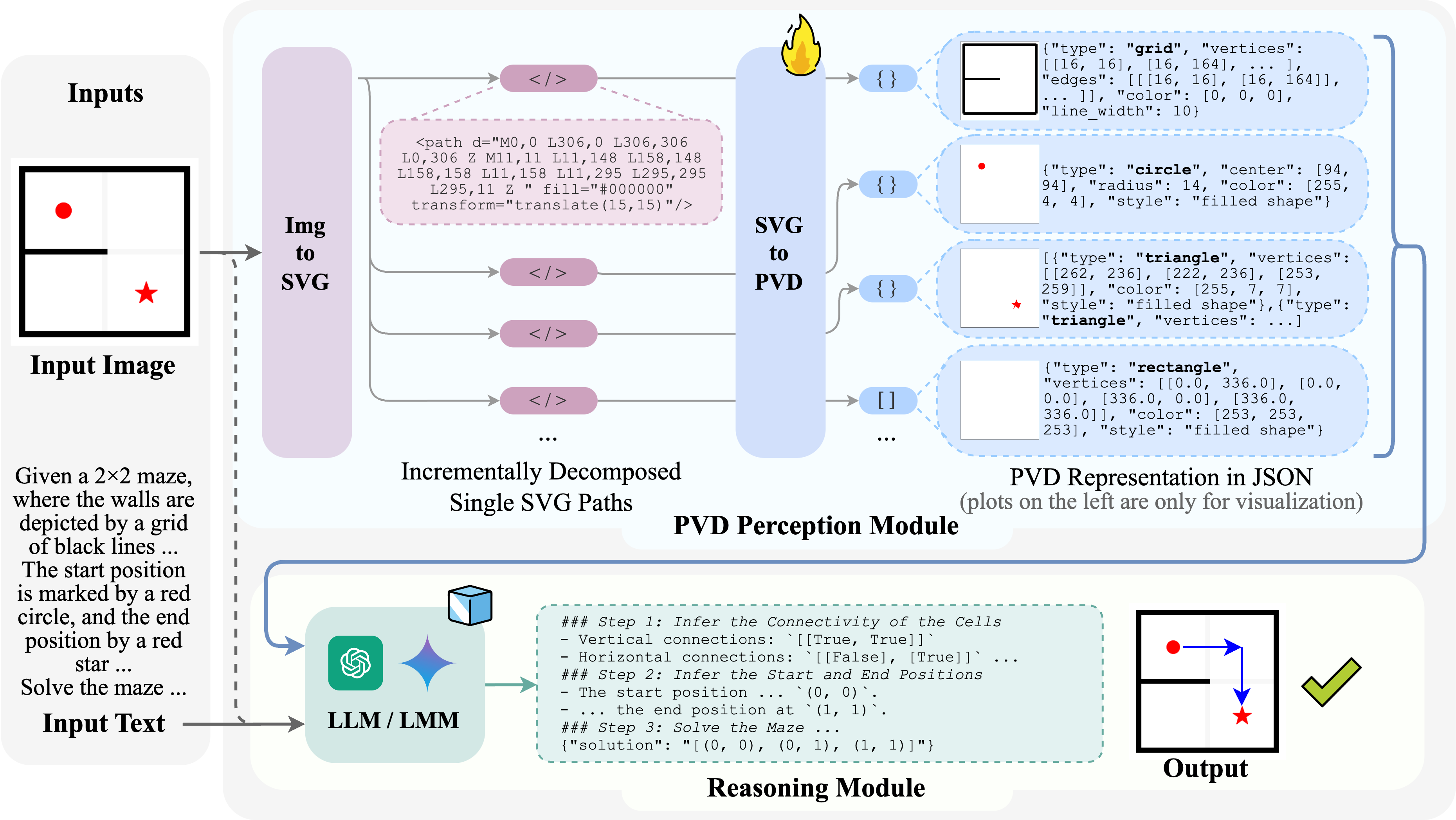

To solve this challenge, we propose Visually Descriptive Language Model (VDLM), a text-based visual reasoning framework for vector graphics. VDLM operates on text-based visual descriptions—specifically, SVG representations and learned Primal Visual Descriptions (PVD), enabling zero-shot reasoning with an off-the-shelf LLM. We demonstrate that VDLM outperforms state-of-the-art large multimodal models, such as GPT-4V, across various multimodal reasoning tasks involving vector graphics. See our paper for more details.

- Minimum requirements:

conda env create -f environment.yml conda activate vdlm - (Optional) For llava inference:

cd third_party git clone https://github.com/haotian-liu/LLaVA.git cd LLaVA pip install -e . - (Optional) For ViperGPT inference:

Set up the environment for ViperGPT following the instructions.

cd third_party git clone https://github.com/MikeWangWZHL/viper.git

-

Download the pretrained SVG-to-PVD model from here. It is an LLM finetuned from Mistral-7B-v0.1. Make sure it is stored at

data/ckpts/PVD-160k-Mistral-7bmkdir -p data/ckpts cd data/ckpts git lfs install git clone https://huggingface.co/mikewang/PVD-160k-Mistral-7b -

Serve the model with vllm:

CUDA_VISIBLE_DEVICES=0 ./vllm_serve_model.sh

-

A detailed inference demo 🚀 can be found here.

You can download the data for downstream tasks from here. Unzip the file and place the downstream_tasks folder under data/datasets/.

bash scripts/perception/eval_perception.sh

-

VDLM:

- GPT-4 Chat API without Code Interpreter:

bash scripts/reasoning/gpt4_pvd.sh - GPT-4 Assistant API with Code Interpreter:

bash scripts/reasoning/gpt4_assistant_pvd.sh

- GPT-4 Chat API without Code Interpreter:

-

Image-based Baselines:

- GPT-4v + Image input:

bash scripts/reasoning/gpt4v_image.sh - Llava-v1.5 + Image input:

# 7b bash scripts/reasoning/llava_1.5_7b_image.sh # 13b bash scripts/reasoning/llava_1.5_13b_image.sh - ViperGPT w/ GPT-4 + Image input:

bash scripts/reasoning/vipergpt_inference.sh

- GPT-4v + Image input:

The dataset used for training our SVG-to-PVD model can be downloaded from here, which contains the preprocessed instruction-tuning data instances for training the SVG-to-PVD model. The format of each line is as follows:

{

"id": "XXX",

"conversations": [

{"role": "system", "content": "XXX"},

{"role": "user", "content": "XXX"},

{"role": "assistant", "content": "XXX"}

// ...

]

}

Additioanlly, the raw PNGs, SVGs and PVD annotations generated by our data generator can be downloaded from here.

pvd_data_generator/generate_pvd_img_svg.py provides the procedural data generator we used for generating the 160K Image/SVG/PVD pairs.

Example usage: bash pvd_data_generator/gen_dataset_pvd_160K.sh

To specify custom configurations, one can modify the main() function in pvd_data_generator/generate_pvd_img_svg.py.

Once generated the SVGs and PVD annotations, one can use the pvd_data_generator/get_instruction_pair.py to construct instruction-tuning data instances in vicuna or openai/mistral format. Modify the #TODO parts in the script with the generated custom dataset information. Then run: python pvd_data_generator/get_instruction_pair.py

We finetune a Mistral-7B model using Megatron-LLM on the PVD-160K dataset. We follow https://github.com/xingyaoww/code-act/blob/main/docs/MODEL_TRAINING.md for doing the preprocessing and postprocessing on the model and data. We train the model on a SLURM cluster with 4 NVIDIA-A100-40GB GPUs.

Example usage:

-

clone the code-act repo:

cd third_party git clone https://github.com/xingyaoww/code-act.git -

Follow the instructions in https://github.com/xingyaoww/code-act/blob/main/docs/MODEL_TRAINING.md#environment-setup; for environmental setup, model preprocessing, data conversion.

-

Modify the

TODO:items inscripts/training/finetune_4xA100_4tp_mistral__pvd_3ep.slurmandscripts/training/finetune_4xA100_4tp_mistral__pvd_3ep.sh -

Copy

scripts/training/finetune_4xA100_4tp_mistral__pvd_3ep.slurmintocode-act/scripts/slurm/configs; Copyscripts/training/finetune_4xA100_4tp_mistral__pvd_3ep.shintocode-act/scripts/models/megatron. -

Run training by:

cd third_party/code-act sbatch scripts/slurm/configs/finetune_4xA100_4tp_mistral__pvd_3ep.slurm scripts/models/megatron/finetune_4xA100_4tp_mistral__pvd_3ep.sh -

Follow https://github.com/xingyaoww/code-act/blob/main/docs/MODEL_TRAINING.md#convert-back-to-huggingface-format to convert the trained model back to Huggingface format. The converted model can be served with

vllmfor inference.

@misc{wang2024textbased,

title={Text-Based Reasoning About Vector Graphics},

author={Zhenhailong Wang and Joy Hsu and Xingyao Wang and Kuan-Hao Huang and Manling Li and Jiajun Wu and Heng Ji},

year={2024},

eprint={2404.06479},

archivePrefix={arXiv},

primaryClass={cs.CL}

}This website's template is based on the Nerfies website.

The website is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.