[Paper] [ACL Anthology page] [Poster]

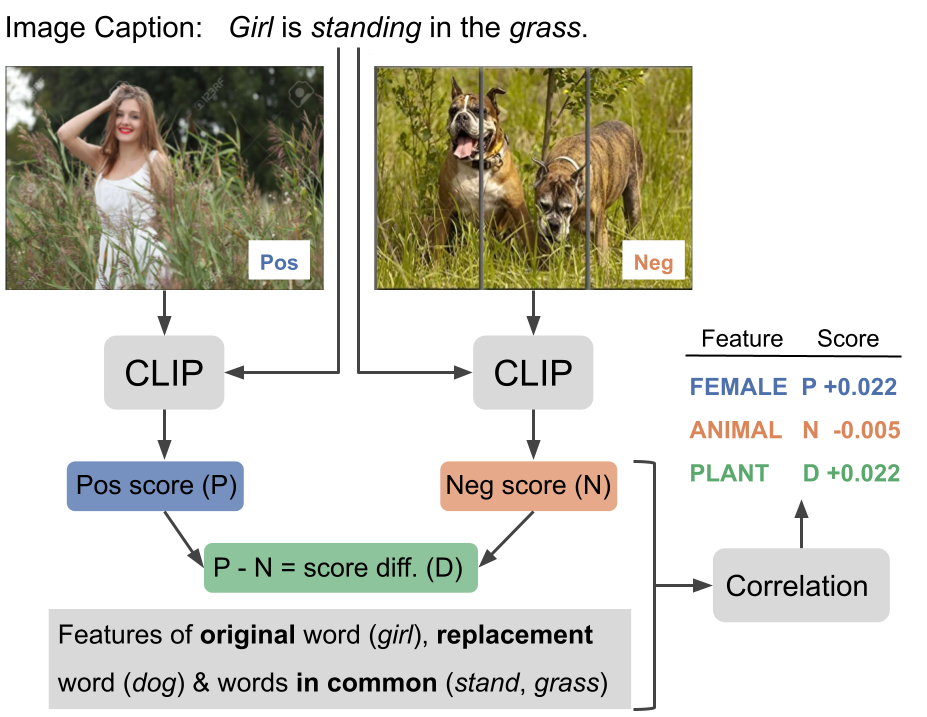

This work proposes a simple and effective method to probe vision-language models (VLMs).

Our method is scalable, as it does not require data annotations since it leverages existing datasets. With our method, we analyzed the performance of CLIP, a popular state-of-the-art multi-modal model, on the SVO-Probes benchmark.

We hope our work contributes to ongoing efforts to discover the limitations of multi-modal models and help build more robust and reliable systems. Our framework can be easily used to analyze other benchmarks, features, and multi-modal models.

For more information, read our *SEM 2023 paper:

Scalable Performance Analysis for Vision-Language Models

By Santiago Castro+, Oana Ignat+, and Rada Mihalcea.

(+ equal contribution.)

This repository includes the obtained results along with the code to reproduce them.

Under results/ you can find the detailed results obtained with our method for the 3 different scores tested. They were generated by running the code in this repository. See below to reproduce it and read the paper (see the link above) to find an explanation of the results.

-

With Python >= 3.8, run the following commands:

pip install -r requirements.txt python -c "import nltk; nltk.download(['omw-1.4', 'wordnet'])" spacy download en_core_web_trf mkdir data -

Compute the CLIP scores for each image-sentence pair and save it to a CSV file. For this step, we used a Google Colab. You can see the results in this Google Sheet. This file is available to download. Place it at

data/svo_probes_with_scores.csv. -

Compute a CSV file that contains the negative sentences for each of the negative triplets. We lost the script for this step, but it's about taking the previous CSV file as input and taking the sentence for the same triplet in the

pos_tripletcolumn (you can use the original SVO-Probes file if there are missing sentences). This file should have the columnssentenceandneg_sentence, in the same order as the columnsentencefrom the previous CSV file. We provide this file already processed. Place it atdata/neg_d.csv. -

Merge the information from these 2 files:

./merge_csvs_and_filter.py > data/merged.csvWe provide the output of this command ready to download.

-

Compute word frequencies in a 10M-size subset from LAION-400M:

./compute_word_frequencies.py > data/words_counter_LAION.jsonWe provide the output of this command ready to download.

-

Obtain LIWC 2015. See LIWC website for more information. Set the path or URL of the file

LIWC.2015.all.txtin the environment variableLIWC_URL_OR_PATH:export LIWC_URL_OR_PATH=...You can also disable the LIWC features in the next command by using the flag

--remove-featuresalong with other features, such as the default removed ones:--remove-features LIWC wup-similarity lch-similarity path-similarity. -

Run the following to obtain the resulting correlation scores and save them as files:

./main.py --dependent-variable-name pos_clip_score --no-neg-features > results/pos_scores.txt ./main.py --dependent-variable-name neg_clip_score > results/neg_scores.txt ./main.py --dependent-variable-name clip_score_diff > results/score_diff.txt

We already provide these files under results/. By default, this script takes our own

merged.csvfile as input, but you can provide your own by using--input-path data/merged.csv. The same happens for other files. Run./main.py --helpto see all the available options. We also recommend you look at the code to see what it does. Note that this repository includes code for preliminary experiments that we didn't report in the paper (for clarity) and we include it here in case it's useful.

@inproceedings{castro-etal-2023-scalable,

title = "Scalable Performance Analysis for Vision-Language Models",

author = "Castro, Santiago and

Ignat, Oana and

Mihalcea, Rada",

booktitle = "Proceedings of the 12th Joint Conference on Lexical and Computational Semantics",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.starsem-1.26",

pages = "284--294",

abstract = "Joint vision-language models have shown great performance over a diverse set of tasks. However, little is known about their limitations, as the high dimensional space learned by these models makes it difficult to identify semantic errors. Recent work has addressed this problem by designing highly controlled probing task benchmarks. Our paper introduces a more scalable solution that relies on already annotated benchmarks. Our method consists of extracting a large set of diverse features from a vision-language benchmark and measuring their correlation with the output of the target model. We confirm previous findings that CLIP behaves like a bag of words model and performs better with nouns and verbs; we also uncover novel insights such as CLIP getting confused by concrete words. Our framework is available at https://github.com/MichiganNLP/Scalable-VLM-Probing a and can be used with other multimodal models and benchmarks.",

}