This repository contains the implementation of the ICML2024 paper "Multi-Patch Prediction: Adapting LLMs for Time Series Representation Learning"

Yuxuan Bian12, Xuan Ju1, Jiangtong Li1, Zhijian Xu1, Dawei Cheng2*, Qiang Xu1*

1The Chinese University of Hong Kong 2Tongji University *Corresponding Author

📖 Table of Contents

In this study, we present

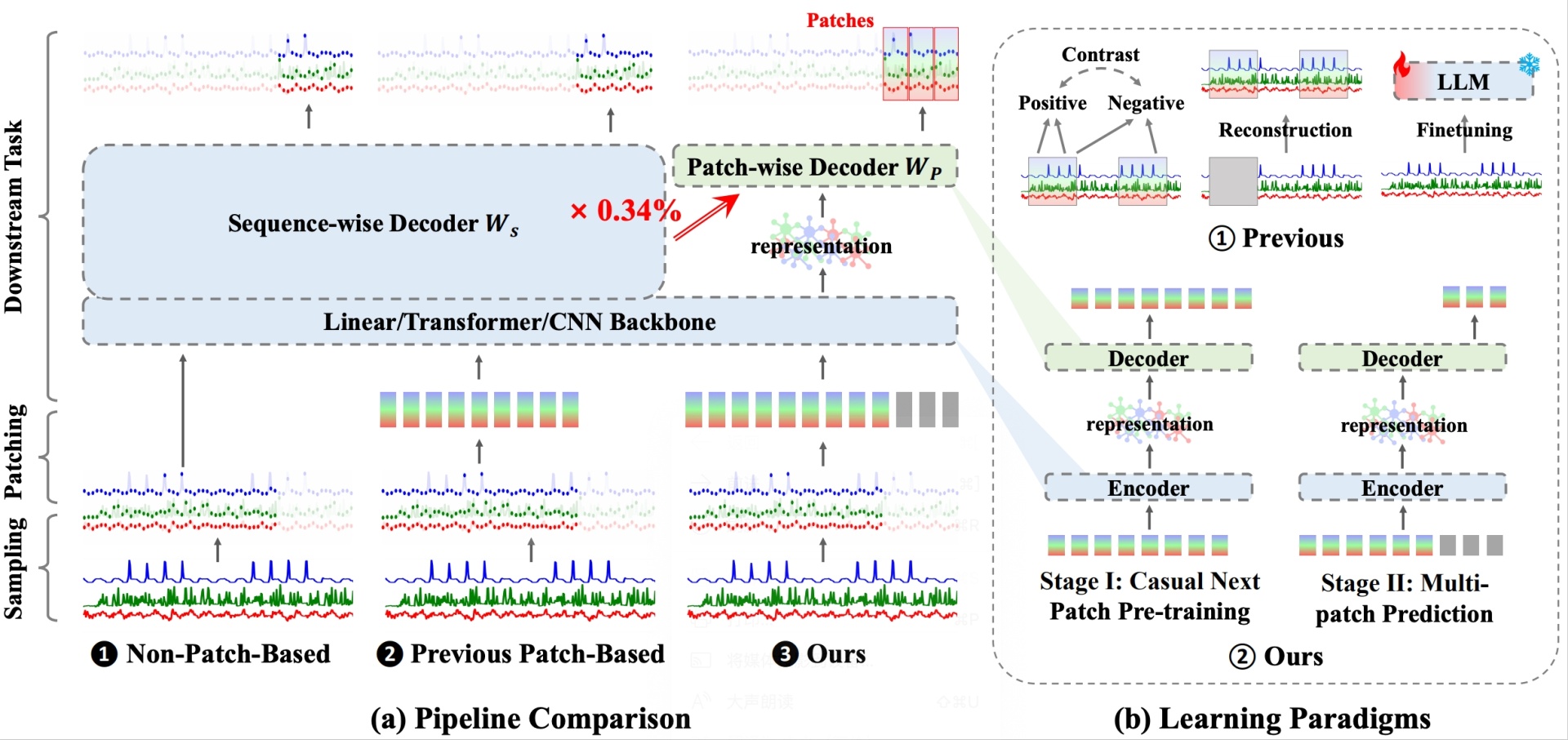

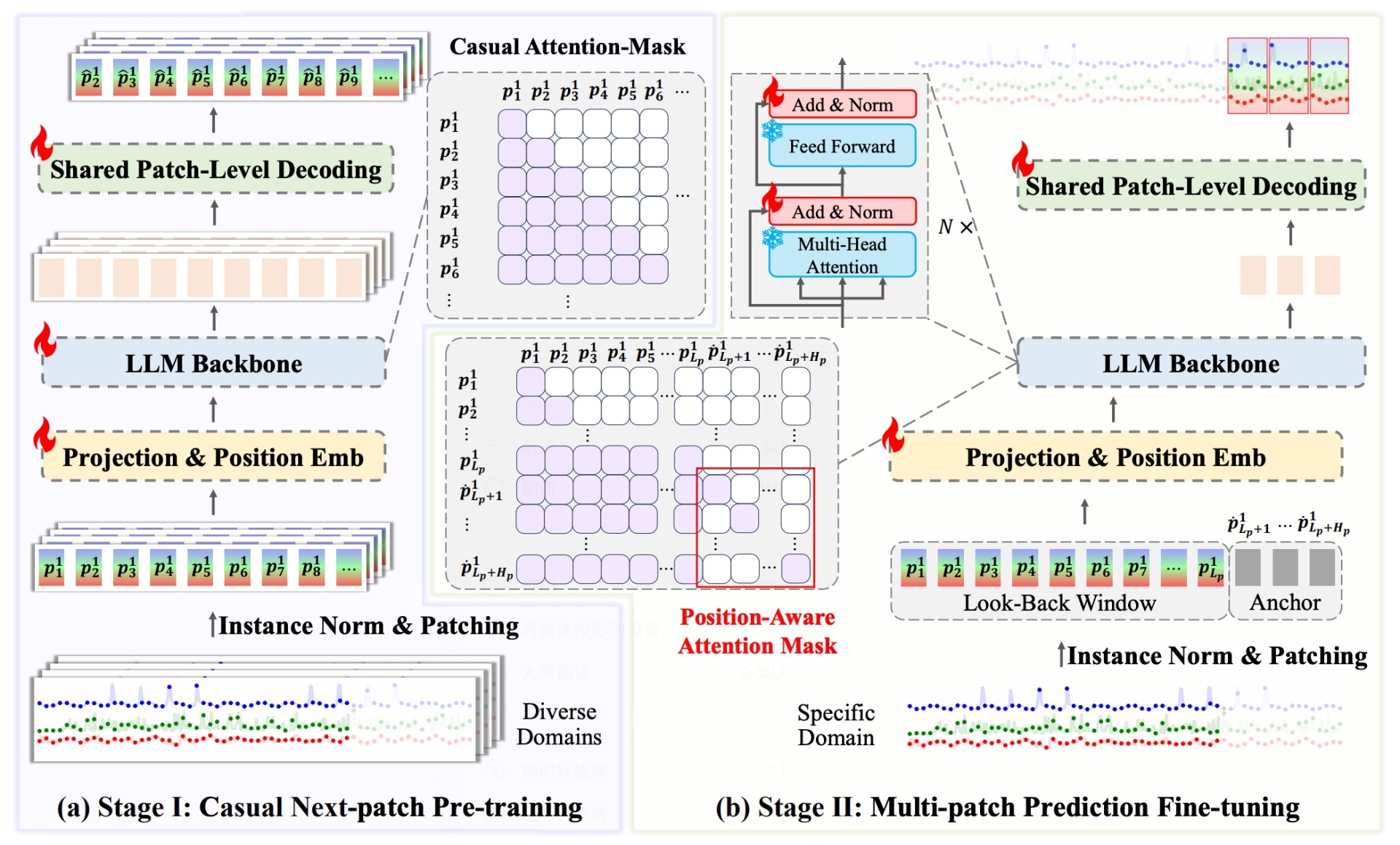

$\text{aL\small{LM}4T\small{S}}$ , an innovative framework that adapts Large Language Models (LLMs) for time-series representation learning. Central to our approach is that we reconceive time-series forecasting as a self-supervised, multi-patch prediction task, which, compared to traditional contrastive learning or mask-and-reconstruction methods, captures temporal dynamics in patch representations more effectively. Our strategy encompasses two-stage training: (i). a causal continual pre-training phase on various time-series datasets, anchored on next patch prediction, effectively syncing LLM capabilities with the intricacies of time-series data; (ii). fine-tuning for multi-patch prediction in the targeted time-series context. A distinctive element of our framework is the patch-wise decoding layer, which departs from previous methods reliant on sequence-level decoding. Such a design directly transposes individual patches into temporal sequences, thereby significantly bolstering the model's proficiency in mastering temporal patch-based representations.$\text{aL\small{LM}4T\small{S}}$ demonstrates superior performance in several downstream tasks, proving its effectiveness in deriving temporal representations with enhanced transferability and marking a pivotal advancement in the adaptation of LLMs for time-series analysis.

🌟 Two-stage Self-supervised Forecasting-based Training: Central to our approach is that we reconceive time-series forecasting as a self-supervised, multi-patch prediction task, which, compared to traditional mask-and-reconstruction methods, captures temporal dynamics in patch representations more effectively.

🌟 Patch-wise Decoding: A distinctive element of our framework is the patch-wise decoding layer, which departs from previous methods reliant on sequence-level decoding. Such a design directly transposes individual patches into temporal sequences, thereby significantly bolstering the model's proficiency in mastering temporal patch-based representations.

- accelerate==0.21.0

- bitsandbytes==0.41.1

- cmake==3.24.1.1

- Cython==0.29.34

- datasets==2.14.3

- deepspeed==0.9.3

- einops==0.6.1

- numpy==1.22.2

- safetensors==0.3.3

- scikit-learn==1.3.0

- sentencepiece==0.1.99

- sktime==0.25.0

- thop==0.1.1.post2209072238

- torch==2.0.0

- torchinfo==1.8.0

- torchsummary==1.5.1

- transformers==4.34.0

To create the environment and install all dependencies:

conda create -n allm4ts python=3.10 -y

conda activate allm4ts

pip install -r requirements.txtYou can access the well pre-processed datasets from [Google Drive], then place the downloaded contents under ./dataset

- Download datasets and place them under

./dataset - Conduct the stage 1: Casual Next-patch Continual Pre-training. We provide a experiment script for demonstration purpose under the folder

./scripts. For example, you can conduct stage 1 continual pre-training by:

bash ./scripts/pretrain/all_s16.sh- Tune the model in different time-series analysis tasks. We provide many experiment scripts for demonstration purpose under the folder

./scripts. For example, you can evaluate the long-term forecasting or the anomaly detection by:

bash ./scripts/long-term-forecasting/all.sh

bash ./scripts/anomaly-detection/all.shIf you find the code is useful in your research, please cite us:

@article{bian2024multi,

title={Multi-Patch Prediction: Adapting LLMs for Time Series Representation Learning},

author={Bian, Yuxuan and Ju, Xuan and Li, Jiangtong and Xu, Zhijian and Cheng, Dawei and Xu, Qiang},

journal={International Conference on Machine Learning ({ICML})},

year={2024}

}

We appreciate the following github repo very much for the valuable code base and datasets: DLinear, PatchTST, Time-Series-Library, and OneFitsAll. Thanks to all contributors!