This sofware is intended to run experiments that overlap communication and computation in order collect performance data. It runs a toy model where GPU kernels are overlap with MPI GPU communication. It provides a synchornous mode (where the host timeline is block until the communication has finished) and an aysnchronous mode where communication is split into Start and Wait methods wrapping the calls to the kernel launch.

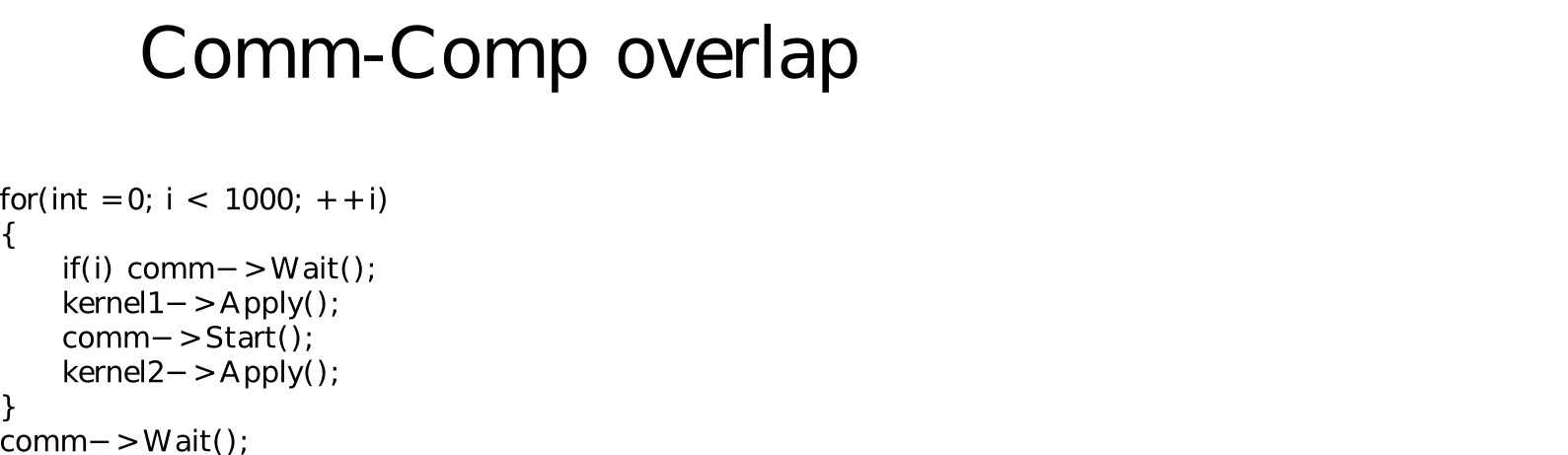

The following picture shows an example of flow of the code for a simple communication pattern:

- CMake

- CUDA

- MPI

- Boost: Optional

Building is straightforward. Create a build directory and run CMake:

mkdir build

cd build

cmake ..

The build contains multiple options for building.

-DENABLE_BOOST_TIMER=ONwill enable the boost timers to measure the performance. This requires boost.-DENABLE_MPI_TIMER=ONwill enable MPI timers.-DVERBOSE=1will print extra information when running the code.-DMPI_VENDORallows setting an MPI vendor. Typical values are:unknownmvapich2oropenmpi. Enabling this variables will simply increase the debug output.

A typical build can be achieved with:

mkdir build

cd build

cmake .. -DVERBOSE=1 -DENABLE_MPI_TIMER=1

Buildscripts for CSCS machines are provided with the code. Simply run ./build_MACHINE.sh to get a build for your machine.

Simply call

src/comm_overlap_benchmark

to run the code. The code can be run in several configurations:

comm_overlap_benchmark [--ie isize] [--je jsize] [--ke ksize] \

[--sync] [--nocomm] [--nocomp] \

[--nh nhaloupdates] [-n nrepetitions] [--inorder]

--ie NNdomain size in x. Default: 128--je NNdomain size in y. Default: 128--ke NNdomain size in z. Default: 60--syncenable synchronous communication.--nocommdisable communication.--nocompdisable computation.--nh NNthe number of halo updates. Default: 2-n NNthe number of benchmark repetetitions. Default: 5000--inorderenable in order halo exchanges.

Running without any MPI configuration (e.g. test the perofrmance of the CUDA kernel):

src/comm_overlap_benchmark --nocomm

Profiling the kernel with CUDA:

nvprof --analysis-metrics -o benchmark.prof ./src/comm_overlap_benchmark --nocomm --nrep=1

Runscripts for CSCS machines are provided with the code. Simply run ./run_MACHINE.sh. The scripts accepts arguments such as the number of MPI ranks jobs=$MPI_RANKS and the GDR setup G2G=1:

jobs=8 G2G=1 ./run_kesch.sh

jobs=16 G2G=1 ./run_kesch.sh

jobs=32 G2G=1 ./run_kesch.sh

Code needs cleanup...