MetaData: Exploring unprecedented avenues for data harvesting in emerging AR/VR environments

Paper | Website | Game | Scripts | Sample Data | Sample Results | Developer | Co-Developer

This repository contains a virtual reality "escape room" game written in C# using the Unity game engine. It is the chief data collection tool for a research study called "MetaData," which aims to shed light on the unique privacy risks of virtual telepresence ("metaverse") applications. While the game appears innocuous, it attempts to covertly harvest 25+ private data attributes about its player from a variety of sources within just a few minutes of gameplay.

We appreciate the support of the National Science Foundation, the National Physical Science Consortium, the Fannie and John Hertz Foundation, and the Berkeley Center for Responsible, Decentralized Intelligence.

https://doi.org/10.48550/arXiv.2207.13176

Contents

- Getting Started

- Game Overview

- Data Collection Tools

- Data Analysis Overview

- Data Analysis Scripts

- Sample Data & Results

The entire repository (outermost folder) is a Unity project folder built for editor version 2021.3.1f1. It can be opened in Unity and built for a target platform of your choice. We have also provided a pre-built executable for SteamVR on Windows (64-bit), which has been verified to work on the HTC Vive, HTC Vive Pro 2, and Oculus Quest 2 using Oculus Link. If you decide to build the project yourself, make sure you add a "Data" folder to the output directory where the game can store collected telemetry.

Run Metadata.exe to launch the game. Approximately once every second, the game will output a .txt file containing the relevant observations from the last second of gameplay. At the end of the experiment, these files can be concatenated into a single data file to be used for later analysis: cat *.txt > data.txt.

The following keyboard controls are available (intended for use by the researcher conducting the experiment):

space: teleport to next room

b: teleport to previous room

r: reset all rooms

p: pop balloon (room #6)

u: reveal letter (room #8)

m: reveal letter (room #9)

c: play sentence 1 (room #20)

v: play sentence 2 (room #20)

Many of these controls will make more sense in the context of the game overview. The data logging template included below under data collection tools also includes these controls in the relevant locations.

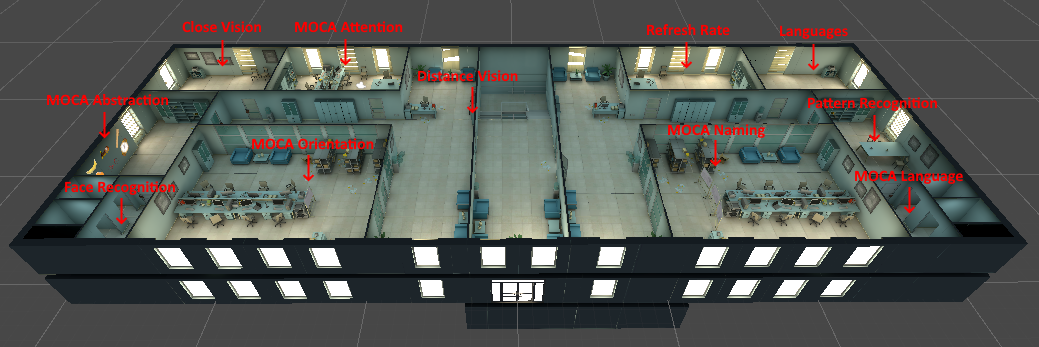

The above figure shows an overview of the escape room building and several of the rooms contained within it. Players move from room to room within the building, completing one or more puzzles to find a "password" before moving to the next room. Further details about each of the rooms are included below.

For most rooms, the researcher simply needs to press the “space” button to move to the next room when the subject says the correct password. The few exceptions are as follows:

- Room 6: The researcher presses the “P” button when the subject presses the red button in the game, then presses “space” to move to the next room when the subject says “red.”

- Room 8: The researcher presses the “U” button when the subject adopts the correct physical posture according to the figures on the wall, then presses “space” when the subject says “cave.”

- Room 9: The researcher presses the “M” button when the subject adopts the correct position according to the figures on the wall, then presses “space” when the subject says “motivation.”

- Room 20: Press “C” when the subject enters this room. Press “V” after the subject repeats the first sentence. Press “space” after the subject repeats the second sentence.

We have included in this repository the data collection log template used by the experimenters to record gameplay observations. This can be digitized to produce a log.csv file for all participants (see example). We have also included a post-game survey for collecting ground truth, which can be digitized to produce a truth.csv file for all participants (see example). To obtain user consent before the experiment, you are welcome to use our IRB-cleared consent language. We additionally suggest the use of audio/video recordings which can be reviewed if necessary.

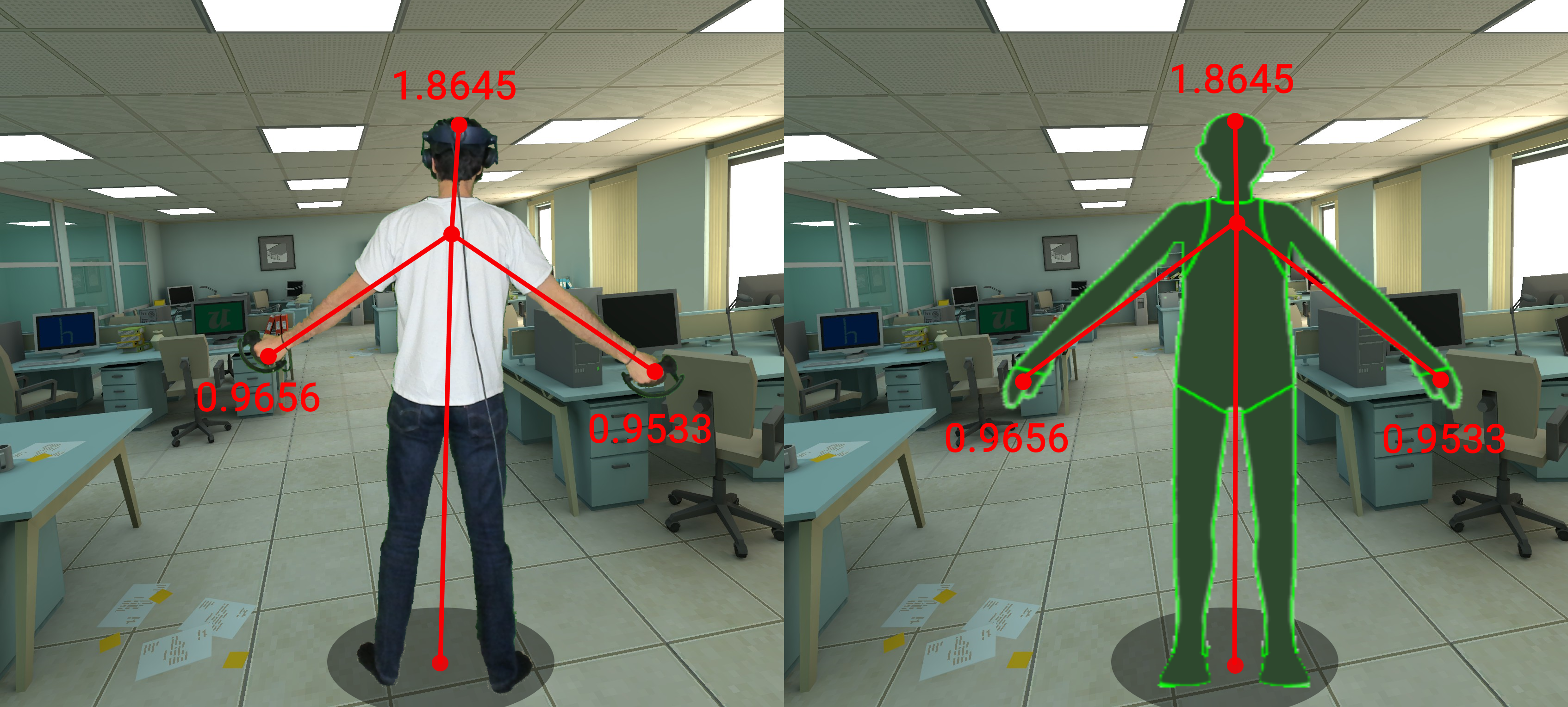

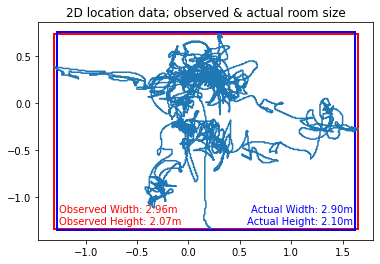

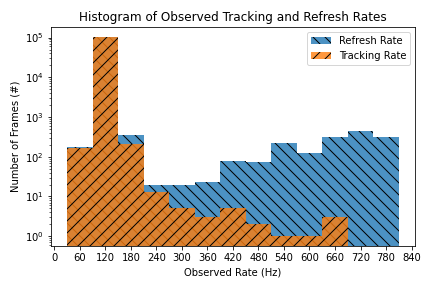

The above diagram summarizes the data sources and attributes we observe from collected data. Examples of data sources and corresponding attributes are included below:

(E.g. Identifying height and wingspan from tracking telemetry)

(E.g. Identifying room dimensions from tracking telemetry)

(E.g. Identifying device tracking and refresh rate)

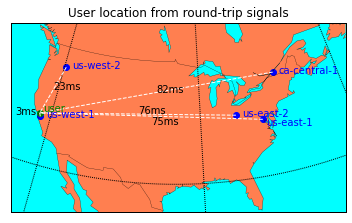

(E.g. Identifying user location from server latency multilateration)

(E.g. Identifying handedness from observed interactions)

The /Scripts folder includes data collection and analysis scripts for a number of different primary and secondary attributes. The scripts require the /Data folder to include a sequentially numbered .txt file for each participant, a log.csv file with the observations for all participants, and a truth.csv file with the ground truth for all participants. Statistical results will be printed to the CLI, and generated figures will be stored in /Figures.

Dependencies

The python 3 data analysis scripts depend on the following libraries:

- matplotlib

- numpy

- scipy

- geopy

Running

With the data in the Data folder and the dependencies installed, you can simply run the scripts one by one: py Arms.py, py Device.py, etc. The results will be printed to the command line.

If you do not wish to run the experiment yourself to generate raw data, we have included sample data files for the PI (1.txt) and co-PI (2.txt) in the /Data directory, along with corresponding log.csv and truth.csv files. The /Figures directory contains the outputs corresponding to this sample data.