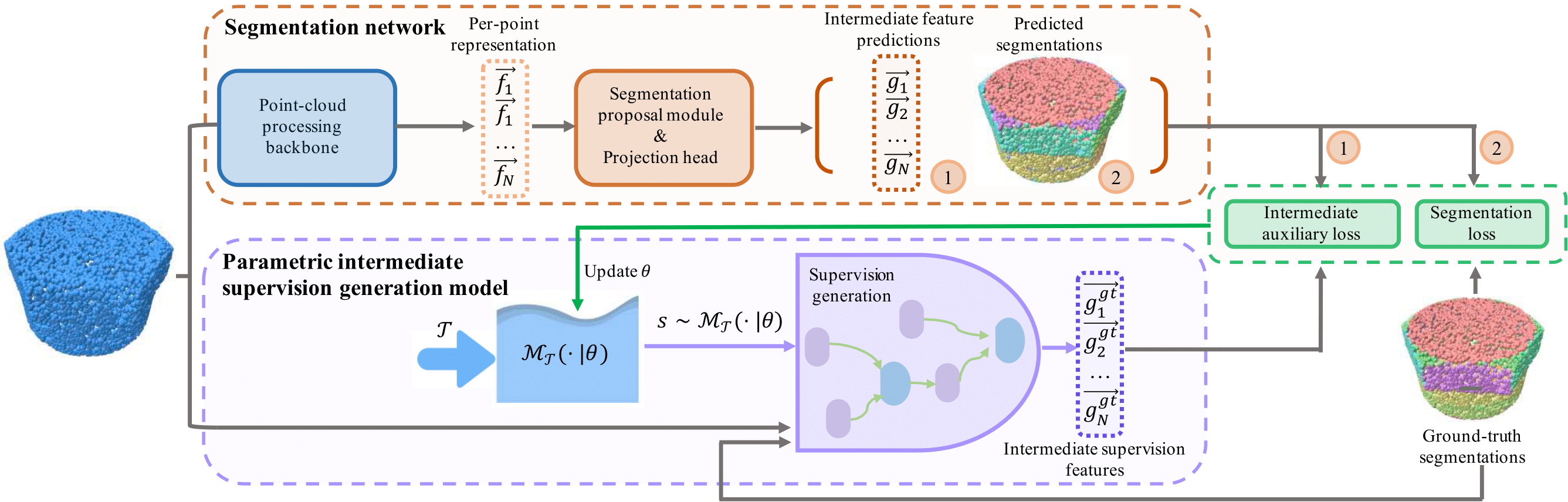

AutoGPart is a method that builds an intermediate supervision space to search from to improve the generalization ability of 3D part segmentation networks.

This repository contains the PyTorch implementation of our paper:

AutoGPart: Intermediate Supervision Search for Generalizable 3D Part Segmentation, Xueyi Liu, Xiaomeng Xu, Anyi Rao, Chuang Gan, Li Yi, CVPR 2022.

- Project Page (including videos, visualizations for searched intermediate supervisions and segmentations)

- arXiv Page

The main experiments are implemented on PyTorch 1.9.1, Python 3.8.8. Main dependency packages are listed as follows:

torch_cluster==1.5.9

torch_scatter==2.0.7

horovod==0.23.0

pykdtree==1.3.4

numpy==1.20.1

h5py==2.8.0

cd data

mkdir part-segmentationDownload data from here and put the file under the data/part-segmentation folder.

Unzip the downloaded data and zipped files under its subfolders as well.

To create and optimize the intermediate supervision space for the mobility-based part segmentation task, please use the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np ${n_device} -H ${your_machine_ip}:${n_device} python -W ignore main_prm.py -c ./cfgs/motion_seg_h_mb_cross.yamlThe default backbone is DGCNN.

The following command samples a set of operations with relatively high sampling probabilities from the optimized supervision space (distribution parameters are stored in dist_params.npy under the logging directory):

python load_and_sample.py -c cfgs/${your_config_file} --ty=loss --params-path=${your_parameter_file}Insert supervisions to use in the corresponding trainer file and use the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np ${n_device} -H ${your_machine_ip}:${n_device} python -W ignore main_prm.py -c ./cfgs/motion_seg_h_mb_cross_tst_perf.yamlChange the resume argument in the ./cfgs/motion_seg_h_mb_cross_pure_test_perf.yaml file to the saved model's checkpoint to test and run the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np 1 -H ${your_machine_ip}:1 python -W ignore main_prm.py -c ./cfgs/motion_seg_h_mb_cross_pure_test_perf.yamlPlease download optimized distribution parameters and trained models from here.

- We change the number of segmentations sampled for each training shape from at most 5 to at most 2 for release.

- We test on 4 GPUs.

- Step 1: Download the Traceparts data from SPFN repo. Put it under the

data/folder. - Step 2: Download data splitting from Data Split. Put it under the

data/traceparts_datafolder. Unzip the zip file to get data splitting files.

To create and optimize the intermediate supervision space for the primitive fitting task, please use the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np ${n_device} -H ${your_machine_ip}:${n_device} python -W ignore main_prm.py -c ./cfgs/prim_seg_h_mb_cross_v2_tree.yamlThe default backbone is DGCNN.

To optimize the supervision distribution space for the first stage of primitive fitting task using HPNet-style network architecture, please use the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np ${n_device} -H ${your_machine_ip}:${n_device} python -W ignore main_prm.py -c ./cfgs/prim_seg_h_mb_v2_tree_optim_loss.yamlThe following command samples a set of operations with relatively high sampling probabilities from the optimized supervision space (distribution parameters are stored in dist_params.npy under the logging directory):

python load_and_sample.py -c cfgs/${your_config_file} --ty=loss --params-path=${your_parameter_file}Insert supervisions to use in the corresponding trainer file and use the following command:

CUDA_VISIBLE_DEVICES=${devices} horovodrun -np ${n_device} -H ${your_machine_ip}:${n_device} python -W ignore main_prm.py -c ./cfgs/prim_seg_h_ob_v2_tree.yamlReplace resume in prim_inference.yaml to the path to saved model weights and use the following command to evaluate the trained model:

python -W ignore main_prm.py -c ./cfgs/prim_inference.yamlRemember to select a free GPU in the config file.

You should modify the file prim_inference.py to choose whether to use the clustering-based segmentation module or classification-based one.

For clustering-based segmentation, use the _clustering_test function; For another, use the _test function.

Please download optimized distribution parameters from here.

-

Unfriendly operations: Sometimes the model will sample operations which would result in a supervision feature with very large absolute values. It would scarcely hinder the optimization process (since such supervisions would cause low metric values; thus, the model using them will not be passed to the next step), making the optimization process ugly.

The problem could probably be solved by forbidding certain operation combinations/sequences. And please feel free to submit a pull request if you can solve it.

-

Unnormalized rewards: Reward values used for such three tasks may have different scales. It may affect the optimization process to some extent. They could probably be normalized using prior knowledge of generalization gaps of each task and its corresponding training data.

Our code and data are released under MIT License (see LICENSE file for details).

Part of the code is taken from HPNet, SPFN, Deep Part Induction, PointNet2, MixStyle.

[1] Yi, L., Kim, V. G., Ceylan, D., Shen, I. C., Yan, M., Su, H., ... & Guibas, L. (2016). A scalable active framework for region annotation in 3d shape collections. ACM Transactions on Graphics (ToG), 35(6), 1-12.

[2] Mo, K., Zhu, S., Chang, A. X., Yi, L., Tripathi, S., Guibas, L. J., & Su, H. (2019). Partnet: A large-scale benchmark for fine-grained and hierarchical part-level 3d object understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 909-918).

[3] Yi, L., Huang, H., Liu, D., Kalogerakis, E., Su, H., & Guibas, L. (2018). Deep part induction from articulated object pairs. arXiv preprint arXiv:1809.07417.

[4] Li, L., Sung, M., Dubrovina, A., Yi, L., & Guibas, L. J. (2019). Supervised fitting of geometric primitives to 3d point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2652-2660).