My implementation of TabNet: Attentive Interpretable Tabular Learning from Sercan O Arik and Tomas Pfister (https://arxiv.org/pdf/1908.07442.pdf) with tensorflow.

Video from Sercan: https://www.youtube.com/watch?v=tQuIcLDO5iE

https://medium.com/deeplearningmadeeasy/sparsemax-from-paper-to-code-351e9b26647b

-

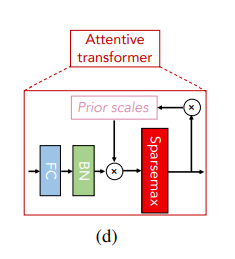

Learnable mask:

$\mathbf{M[i]} \in \Re ^ {B \times D}$ -

$\mathbf{P[i]} = \prod\nolimits_{j=1}^{i} (\gamma - \mathbf{M[j]})$ where$\gamma$ is a relaxation parameter -

$ \mathbf{M[i]} = \text{sparsemax}(\mathbf{P[i-1]} \cdot \text{h}_i(\mathbf{a[i-1]})). $

-

$\sum\nolimits_{j=1}^{D} \mathbf{M[i]_{b,j}} = 1$ -

$L_{sparse} = \sum\nolimits_{i=1}^{N_{steps}} \sum\nolimits_{b=1}^{B} \sum\nolimits_{j=1}^{D} \frac{-\mathbf{M_{b,j}[i]} \log(\mathbf{M_{b,j}[i]} ! +! \epsilon)}{N_{steps} \cdot B},$

From the original paper, the feature transformation is given by:

where:

-

$B$ denotes the batch size. -

$D$ represents the number of features. -

$\mathbf{f} \in \Re ^ {B \times D}$ is the matrix of input features. -

$\mathbf{M[i]} \in \Re ^ {B \times D}$ is the learnable mask applied to the features. -

$\mathbf{d[i]} \in \Re ^ {B \times N_d}$ and$\mathbf{a[i]} \in \Re ^ {B \times N_a}$ are the outputs of the transformation.

Following this:

-

$\mathbf{M[i]} \odot \mathbf{f}$ is an element-wise multiplication so the result is of shape$(B, D)$ - Consequently,

$[\mathbf{d[i]}, \mathbf{a[i]}]$ possesses a shape of$(B, N_a + N_d)$

The function

Note on the $\sqrt{0.5}$

Residual connections in neural networks involve adding the output of one layer to the output of one or more previous layers. This can be beneficial in deep architectures to mitigate the vanishing gradient problem and improve convergence.

When performing an element-wise addition of two tensors, the variance of the output can increase. To understand why, consider:

Let

To keep the variance of the sum consistent with

This reasoning underpins the division by

Reference: Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; and Dauphin, Y. N. 2017. Convolutional Sequence to Sequence Learning. arXiv:1705.03122 .

Reference: Dauphin, Y. N., Fan, A., Auli, M., & Grangier, D. (2017). Language Modeling with Gated Convolutional Networks. Proceedings of the 34th International Conference on Machine Learning - Volume 70, ICML'17, 933–941. (arXiv).

The Gated Linear Unit (GLU) activation function is employed in TabNet. The authors observed an empirical advantage of using GLU over conventional nonlinearities like ReLU.

What I believe: GLUs offer a more refined modulation of information flowing through the network, allowing for a nuanced blend of features, in contrast to the more binary behavior of ReLU (either activated or not). This nuanced control might provide TabNet with enhanced flexibility and performance in handling diverse feature interactions

where:

-

$x$ is the input -

$\sigma$ is the sigmoid function -

$W$ is the weight matrix -

$b$ the bias vector

In TabNet, the linear transformation is handled by the FC layer. Thus, my implementation of GLU will focus on the sigmoid activation and the element-wise multiplication.

References:

- Hoffer, E.; Hubara, I.; and Soudry, D. 2017. Train longer, generalize better: closing the generalization gap in large batch training of neural networks. (arXiv:1705.08741).

- Neofytos Dimitriou, Ognjen Arandjelovic (2020). A New Look at Ghost Normalization. (arXiv:2007.08554).

Ghost Batch Normalization (GBN) is a variation of the conventional Batch Normalization (BN) technique. While BN computes normalization statistics (mean and variance) over the entire batch, GBN calculates these statistics over "ghost batches". These ghost batches are subpartitions of the original batch.

Let's consider a full batch

For a specific ghost batch

- Mean

where

- Variance

- Normalization

where

- Scaling and shift

where

This methodology is applied to each ghost batch

TODO:

- écrire un test unitaire pour vérifier que chaque ligne d'un masque = 1

- Ajouter doc dans README.md pour sparsemax

- Implementer L_sparse

- finir la doc mathématique de README

PRIO Revoir complètement le design concernant batch_size et virtual_batch_size > GBN > FT > TabNet

- Créer des classes TabNetClassifier TabNetRegressor plutôt que target_is_discrete

https://github.com/google-research/google-research/tree/master/tabnet