This repo offers some code to illustrate the following blog article https://blog.octo.com/amener-son-projet-de-machine-learning-jusquen-production-avec-wheel-et-docker/.

It aims to demonstrate one way to package with Wheel and Docker a Machine Learning application able to classify muffins and chihuahua in an image.

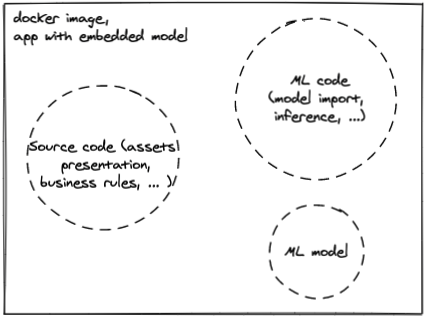

This way is described as the packaging of an ML application with "an embedded model" in the Continuous Delivery for Machine Learning (CD4ML) article from Martin Fowler's blog.

This other repo is available to demonstrate another packaging approach : with a "model isolated as a separate service", also described in CD4ML article.

This app needs :

- a pre-trained Deep Learning model 🧠,

- some images of muffins 🍪 and chihuahuas 🐶, for demonstration purposes,

- some Python code 🐍.

Packaging of this Python app is done with :

- the Wheel format ☸️, with setuptools

- and docker 🐳.

You can test the end result immediately with the following docker container :

$> docker pull mho7/muffin-v-chihuahua-embedded:v1;

$> docker run -p 8080:8080 mho7/muffin-v-chihuahua-embedded:v1;The remaining parts of this README document explains how to build this muffin-v-chihuahua-embedded docker container.

You can test the end result immediately with the Wheel distribution available as a release artifact from this repo, by execution the following command in a Python>=3.8 environment :

$> pip install https://github.com/Mehdi-H/muffin-v-chihuahua-with-embedded-model/releases/download/v1.0/muffin_v_chihuahua_with_embedded_model-1.0-py3-none-any.whl;

$> muffin-v-chihuahua-with-embedded-model run-demo;This muffin-v-chihuahua classifier works with 1 application that contains :

-

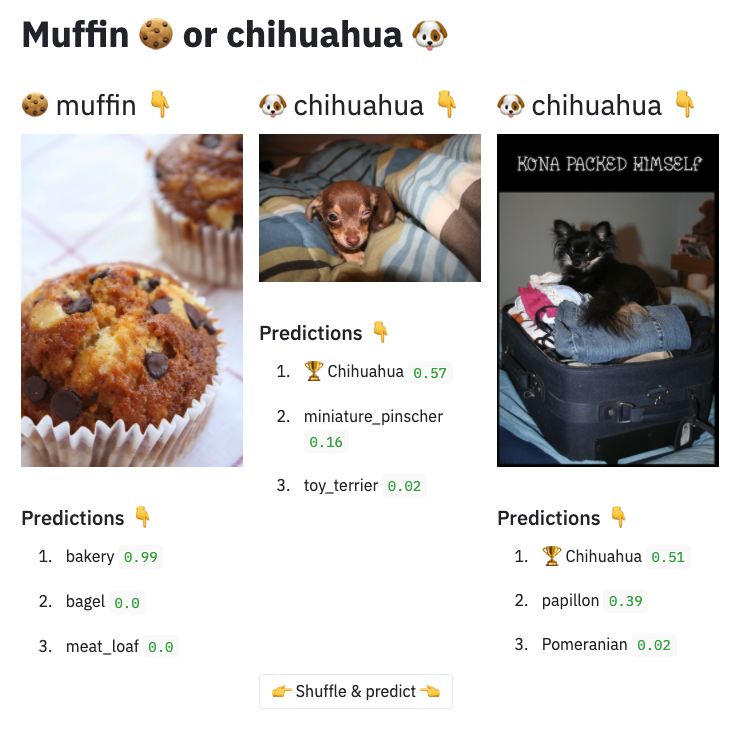

A Streamlit frontend application, with the responsibility to expose images of muffins or chihuahua with their associated classification prediction

-

A Deep Learning model able to predict the presence of a muffin or a chihuahua in an image.

-

Some images of muffins and chihuahuas for demonstration purposes.

Thus, the model is embedded in the application to create a unique artifact.

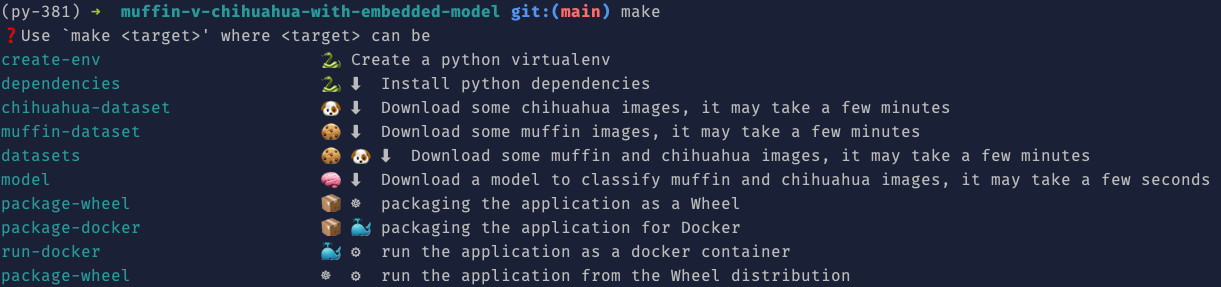

Basically, everything you can do in this repo is available when running make or make help in your terminal, according to the self-documented makefile convention.

You can run make datasets at the root of this repo.

This command will populate the muffin_v_chihuahua/data/chihuahua/ folder with a dataset of chihuahua images and muffin_v_chihuahua/data/muffin/ with muffin images.

Those images are downloaded from http://image-net.org/.

To avoid training an ad-hoc model for this computer vision task, we can download a pre-trained model with make model.

This command will download the InceptionV3 model listed on Keras website.

This model is downloaded from François Chollet deep-learning-models repository, in which the official InceptionV3 model is exposed as an artefact in release v0.5.

You can run make package-wheel to build a Wheel distribution from

- the setup.py, setup.cfg and MANIFEST.in files,

- the Python sources in the muffin_v_chihuahua package,

- the images of muffins and chihuahuas in data/ folder,

- the ML pre-trained model in the muffin_v_chihuahua package.

The result of this command will be the creation of a dist/ folder containing the *.whl distribution.

You can run make package-docker to build a docker image from

- the dockerfile that describes the image,

- the Wheel distribution generated in the

dist/folder

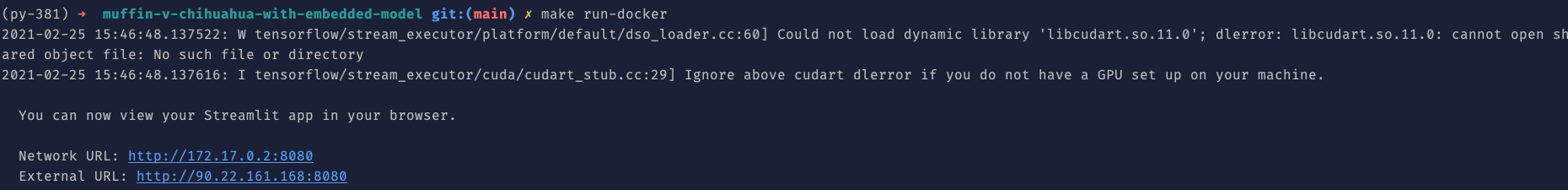

You can run the application with docker by running the following command : make run-demo.

While the application is running in your terminal, you can open your browser at http://localhost:8080 to access the Streamlit frontend.

It should look like the following :

- in your terminal 👇

- in your browser 👇

You can quit the application in your terminal with Ctrl+c when you are done.

Once the Wheel distribution is produced with make package-wheel, you can install the muffin_v_chihuahua Python package locally, in a Python virtual environment, with the following command : pip install dist/muffin_v_chihuahua_with_embedded_model-1.0-py3-none-any.whl.

You can check that the app is available with pip freeze | grep muffin.

Then, the muffin_v_chihuahua application will be available locally with the command line, as described in muffin_v_chihuahua/main.py script.

You can run the muffin-v-chihuahua classification demo with the following command : muffin-v-chihuahua-with-embedded-model run-demo.