by Jingyu Yang, Ji Xu, Kun Li, Yu-Kun Lai, Huanjing Yue, Jianzhi Lu, Hao Wu and Yebin Liu.

This code is related to our paper "Learning to Reconstruct and Understand Indoor Scenes from Sparse Views" published on IEEE T-IP 2020.

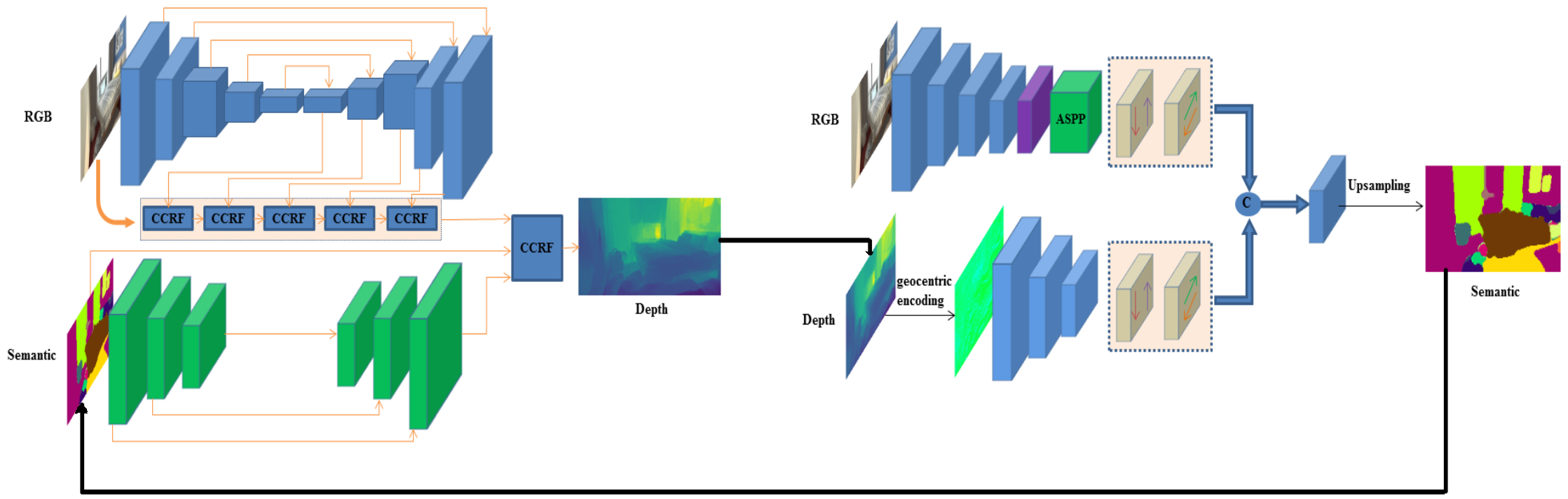

The source code is build for IterNet, which is an itertive joint optimiation method for depth estimation and semantic segmntation proposed by our paper. For installtion, please make sure to install caffe environment.

In addition, we proposed IterNet RGB-D dataset, including photorealistic high-resolution RGB images, accurate depth maps, and pixel-level semantic labels for thousands of complex layouts.

Our proposed dataset is a synthetic dataset and is generted by a third-party platform which includes various real-life house styles, real prototype rooms designed by professional designers, and detailed model materials. Besides, we also implement high-quality photorealistic rendering. Compared to traditional rendering, we adopt the method of image splitting and recombination to achieve distributed rendering.

Table 1 compares various publicly available 2.5/3D indoor datasets with our IterNet RGB-D dataset. Our dataset provides a total of 12,856 photorealistic images for thousands of layouts, and has a higher image resolution: 1280 × 960 and 1280 × 720, covering more indoor scenes. Moreover, our dataset provides absolute depth maps and pixel-level semantic segmentation that are more precise and accurate. Compared with other datasets, the indoor scenes covered by our dataset are more general and more complex.

| Dataset | NYUv2 | SUN RGB-D | Building Paraser | Matterport 3D | ScanNet | SUNCG | SceneNet RRG-D | IterNet RGB-D |

|---|---|---|---|---|---|---|---|---|

| Year | 2012 | 2015 | 2017 | 2017 | 2017 | 2017 | 2016 | 2019 |

| Type | Real | Real | Real | Real | Real | Synthetic | Synthetic | Synthetic |

| Image/Scans | 1449 | 10k | 70k | 194k | 1513 | 130k | 5M | 12856 |

| Layouts | 464 | - | 270 | 90 | 1513 | 45622 | 57 | 3214 |

| Object Classes | 894 | 800 | 13 | 40 | >=50 | 84 | 255 | 333 |

| RGB | ✅ | ✅ | ✅ | ✅ | ❎ | ❎ | ✅ | ✅ |

| Depth | ✅ | ✅ | ✅ | ✅ | ❎ | ✅ | ✅ | ✅ |

| Semantic Label | ✅ | ✅ | ✅ | ✅ | ❎ | ✅ | ✅ | ✅ |

| RGB Texturing | Real | Real | Real | Real | Real | Not Photorealistic | Photorealistic | Photorealistic |

| Image Resolution | 640X480 | 640X480 | 1080X1080 | 1280X1024 | 640X480 | 640X480 | 320X240 | 1280×960;1280×720 |

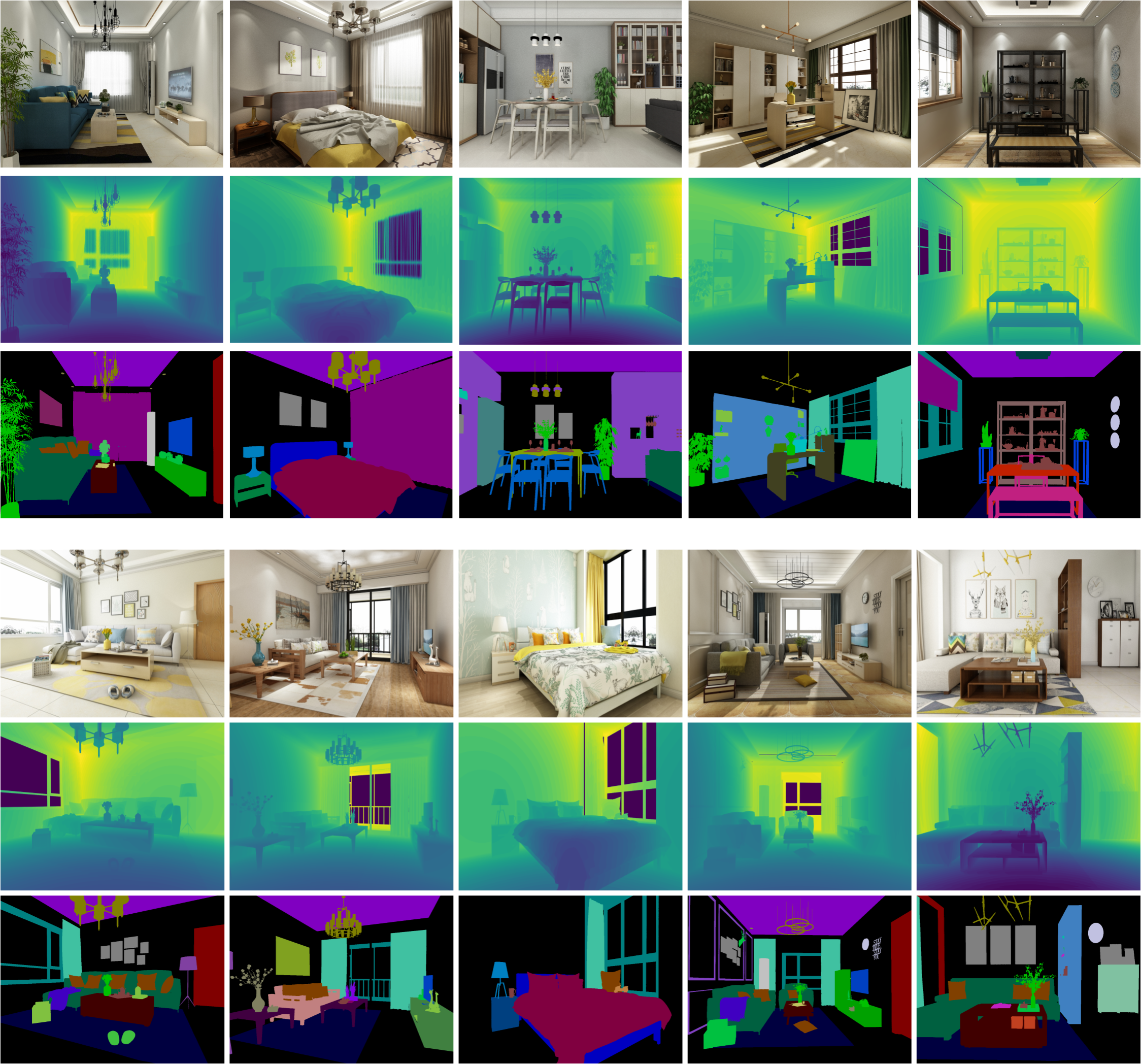

Figure 1 shows some examples of different scenarios in our dataset. It can be seen that our dataset contains more complex indoor layouts, richer textures, colorful and realistic lightings, and higher resolution images, which are more photorealistic and closer to real-world images.

Each sample of the dataset is composed of 4 parts. The picture in jpeg format represents the RGB image, and the "zDepth" suffix is the depth image. The remaining picture suffixed with "VRayObjectID" and a "txt" file express the semantic information of the scene. Each combination of RGB corresponds to a material id, which corresponds to an object category.

We divide the dataset into two parts for everyone to use. The first part is artificially filtered, in which a small amount of scenes is removed (when the window is rendered, it is rendered outdoors). The second part is not processed manually, and the scenes are more abundant. You can download the dataset from Google Drive.

Figure 2 shows our proposed IterNet architecture, which is uesd for itertive joint optimiation for depth estimation and semantic segmntation. More implementation deatails can be found in the paper.

If our work is useful for your research, please consider citing the paper:

@article{Yang2020Learning,

title={Learning to Reconstruct and Understand Indoor Scenes from Sparse Views},

author={Yang, Jingyu and Xu, Ji and Li, Kun and Lai, Yu-Kun and Yue, Huanjing and Lu, Jianzhi and Wu, Hao and Liu, Yebin},

journal={IEEE Transactions on Image Processing},

year={2020},

volume={29},

number={1},

pages={5753-5766}

}

Please contact Prof. Kun Li at lik@tju.edu.cn , if you have any questions.