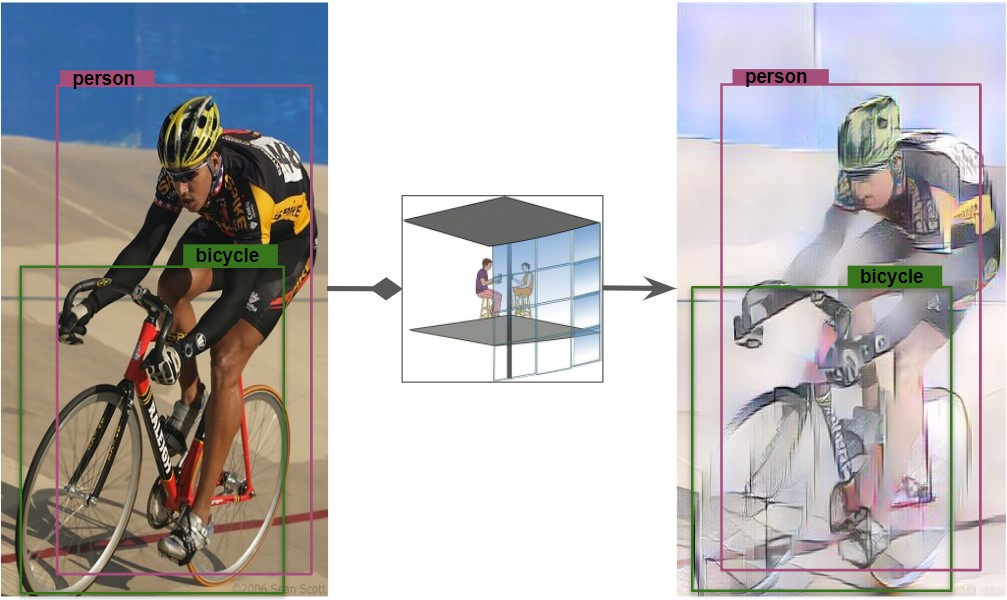

We present a framework for real-time Unsupervised Domain Adaptation (UDA) for object detection. We start from a fully supervised SSD (Single Shot MultiBox Detector) trained on a source domain (e.g., natural image) composed of instance-level annotated images and progressively adapt the detector using unsupervised images from a target domain (e.g., artwork). Our framework performs fine-tuning without previously translated samples, achieving a fast and versatile domain adaptation. We also improve the mean average precision (mAP) compared to other domain translation methods.

framework

Implementation

| Task | Choise | Implementation |

|---|---|---|

| OD | SSD | lufficc |

| Style transfer | AdaIN | irasin |

| Source Domain | natural | PASCAL VOC |

| Target Domain | artistic | Clipart1k |

- Python3

- PyTorch 1.0 or higher

- yacs

- Vizer

- GCC >= 4.9

- OpenCV

git clone https://github.com/lufficc/SSD.git

cd SSD

pip install -r requirements.txtFor Pascal VOC source dataset and Clipart1k target dataset, make the folder structure like this:

datasets

|__ VOC2007

|_ JPEGImages

|_ Annotations

|_ ImageSets

|_ SegmentationClass

|__ VOC2012

|_ JPEGImages

|_ Annotations

|_ ImageSets

|_ SegmentationClass

|__ ...

|

|__ clipart

|_ JPEGImages

|_ Annotations

|_ ImageSets

See vgg_ssd300_voc0712_variationVx examples

You can find an example code on this Colab project

# for example, train SSD300:

python train.py --config-file configs/vgg_ssd300_voc0712.yaml# for example, train SSD300 with 4 GPUs:

export NGPUS=4

python -m torch.distributed.launch --nproc_per_node=$NGPUS train.py --config-file configs/vgg_ssd300_voc0712.yaml SOLVER.WARMUP_FACTOR 0.03333 SOLVER.WARMUP_ITERS 1000The configuration files that I provide assume that we are running on single GPU. When changing number of GPUs, hyper-parameter (lr, max_iter, ...) will also changed according to this paper: Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour.

# for example, evaluate SSD300:

python test.py --config-file configs/vgg_ssd300_voc0712.yaml# for example, evaluate SSD300 with 4 GPUs:

export NGPUS=4

python -m torch.distributed.launch --nproc_per_node=$NGPUS test.py --config-file configs/vgg_ssd300_voc0712.yaml- the proposed framework leverages the accuracy of the baseline FSD by approximately 10 to 12 percentage points in terms of mAP

- In comparison against the best performing unsupervised domain mapping algorithms in the cross domain adaptive detection, our framework outperforms these algorithms by 4 to 5 percentage points

- In addition to the best performances related to mAP, we stated a relevant reduction in terms of DT time.

The code is available at

/Images/variation_architecture.jpg)