by Max (Letian) Fu, Gaurav Datta*, Huang Huang*, William Chung-Ho Panitch*, Jaimyn Drake*, Joseph Ortiz, Mustafa Mukadam, Mike Lambeta, Roberto Calandra, Ken Goldberg at UC Berkeley, Meta AI, TU Dresden, and CeTI (*equal contribution).

[Paper] | [Project Page] | [Checkpoints] | [Dataset] | [Citation]

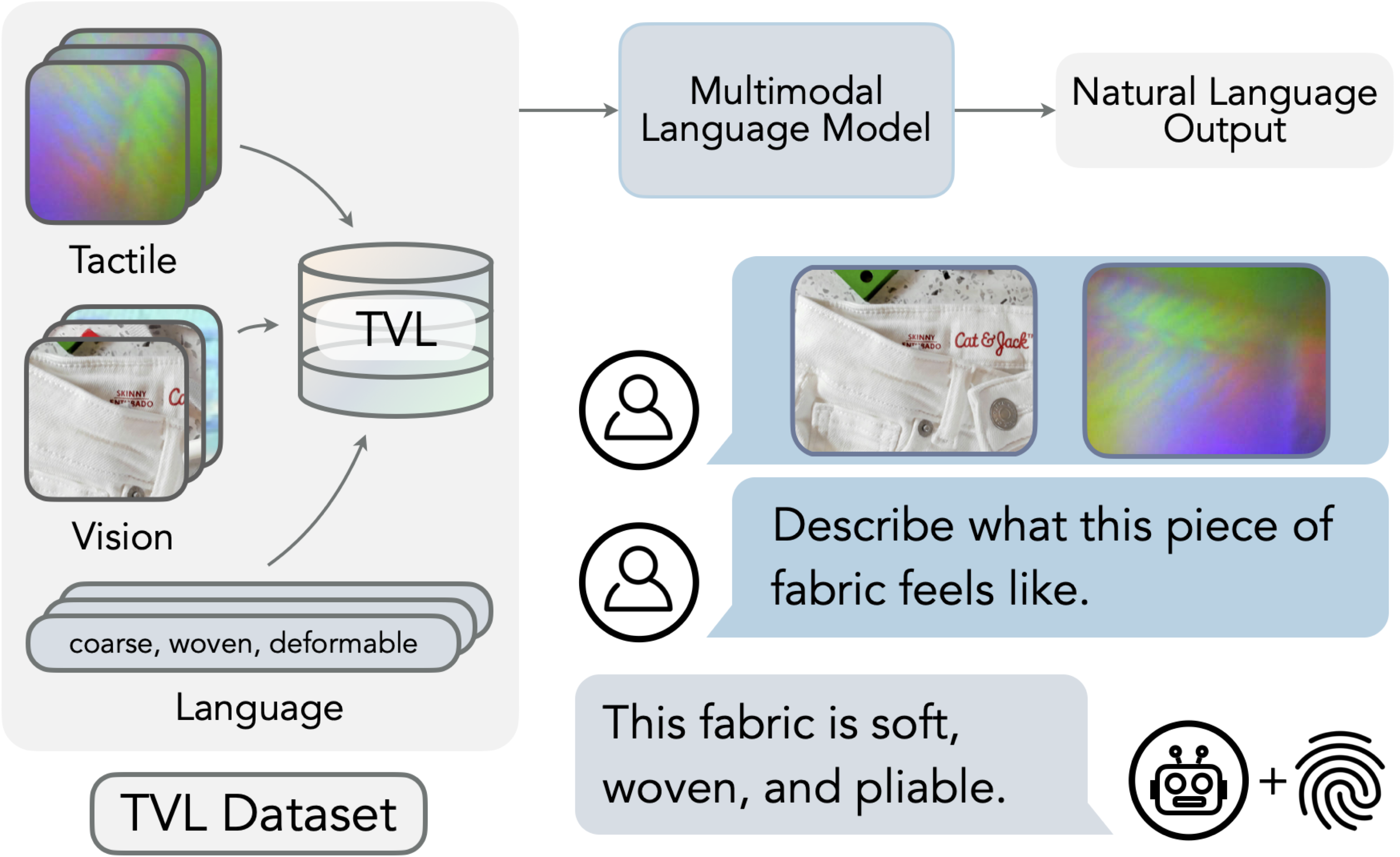

This repo contains the official implementation for A Touch, Vision, and Language Dataset for Multimodal Alignment. This code is based MAE, CrossMAE, and the ImageBind-LLM repos.

Please install the dependencies in requirements.txt:

# Optionally create a conda environment

conda create -n tvl python=3.10 -y

conda activate tvl

conda install pytorch==2.1.2 cudatoolkit==11.8.0 -c pytorch -y

# Install dependencies

pip install packaging

pip install -r requirements.txt

pip install -e . The dataset is hosted on HuggingFace. To use the dataset, we first download them using the GUI or use git:

# install git-lfs

sudo apt install git-lfs

git lfs install

# clone the dataset

git clone git@hf.co:datasets/mlfu7/Touch-Vision-Language-Dataset

# or you can download the zip files manually from here: https://huggingface.co/datasets/mlfu7/Touch-Vision-Language-Dataset/tree/main

cd Touch-Vision-Language-Dataset

zip -s0 tvl_dataset_sharded.zip --out tvl_dataset.zip

unzip tvl_dataset.zip Touch-Vision-Language (TVL) Models can be separated into 1) tactile encoders that are aligned to the CLIP latent space and 2) TVL-LLaMA, a variant of ImageBind-LLM that is finetuned on the TVL dataset. The tactile encoders come in three different sizes: ViT-Tiny, ViT-Small, and ViT-Base. As a result, we provide three different TVL-LLaMA. The statistics presented here differ from those in the paper as the checkpoints are re-trained using this repository.

For zero-shot classification, we use OpenCLIP with the following configuration:

CLIP_VISION_MODEL = "ViT-L-14"

CLIP_PRETRAIN_DATA = "datacomp_xl_s13b_b90k"The checkpoints for the tactile encoders are provided below:

| ViT-Tiny | ViT-Small | ViT-Base | |

|---|---|---|---|

| Tactile Encoder | download | download | download |

| Touch-Language Acc (@0.64) | 36.19% | 36.82% | 30.85% |

| Touch-Vision Acc | 78.11% | 77.49% | 81.22% |

Please request access to the pre-trained LLaMA-2 from this form. In particular, we use llama-2-7b as the base model. The weights here contains the trained adapter, the tactile encoder, and the vision encoder for the ease of loading.

The checkpoints for TVL-LLaMA are provided below:

| ViT-Tiny | ViT-Small | ViT-Base | |

|---|---|---|---|

| TVL-LLaMA | download | download | download |

| Reference TVL Benchmark Score (1-10) | 5.03 | 5.01 | 4.87 |

We provide tactile encoder training script in tvl_enc and TVL-LLaMA training script in tvl_llama. In particular, TVL-Benchmark is described here.

This project is under the Apache 2.0 license. See LICENSE for details.

Please give us a star 🌟 on Github to support us!

Please cite our work if you find our work inspiring or use our code in your work:

@article{fu2024tvl,

title={A Touch, Vision, and Language Dataset for Multimodal Alignment},

author={Letian Fu and Gaurav Datta and Huang Huang and William Chung-Ho Panitch and Jaimyn Drake and Joseph Ortiz and Mustafa Mukadam and Mike Lambeta and Roberto Calandra and Ken Goldberg},

journal={arXiv preprint arXiv:2402.13232},

year={2024}

}