by Max (Letian) Fu*, Huang Huang*, Gaurav Datta*, Lawrence Yunliang Chen, William Chung-Ho Panitch, Fangchen Liu, Hui Li, and Ken Goldberg at UC Berkeley and Autodesk (*equal contribution).

[Paper] | [Project Page] | [Checkpoints] | [Dataset]

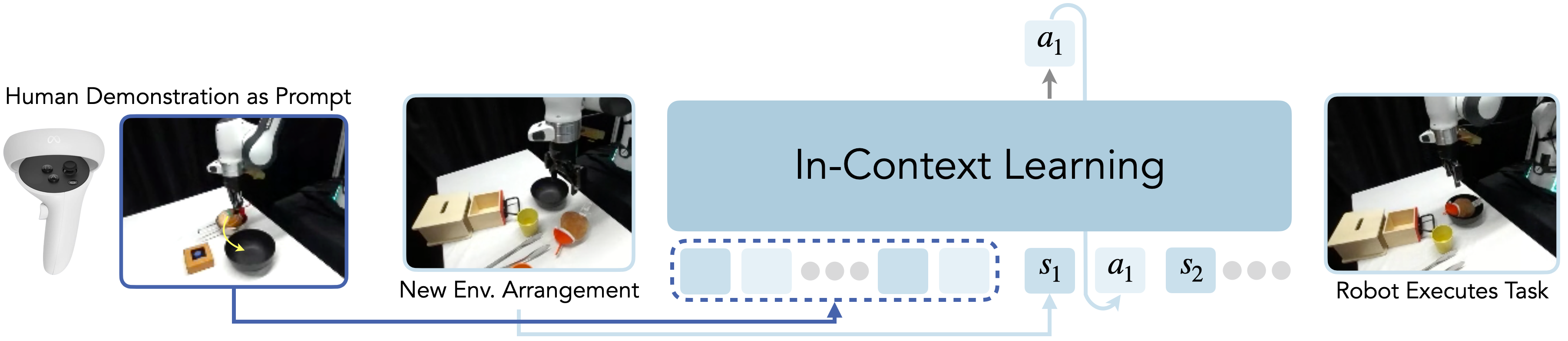

This repo contains the official implementation for In-Context Imitation Learning via Next-Token Prediction. We investigate how to extend few-shot, in-context learning capability of next-token prediction models to real-robot imitation learning. Specifically, given a few teleop demonstrations of the task, we want the model to predict what to do in a new setting, without additional finetuning on these demonstrations.

Further information please contact Max Fu and Huang Huang, or post an issue on Github!

- Release DROID subset that is used for pre-training ICRT.

- [2024-08-23] Initial Release

# create conda env

conda create -n icrt python=3.10 -y

conda activate icrt

# install torch

conda install pytorch torchvision torchaudio pytorch-cuda=12.4 -c pytorch -c nvidia

conda install -c conda-forge ffmpeg

# download repo

git clone https://github.com/Max-Fu/icrt.git

cd icrt

pip install -e .Please refer to DATASET.md for downloading datasets and constructing your own dataset.

We host the checkpoints on 🤗HuggingFace. Please follow the following instructions to download them.

# install git-lfs

sudo apt install git-lfs

git lfs install

# cloning checkpoints

git clone git@hf.co:mlfu7/ICRT checkpointsPlease refer to TRAIN.md for training the model.

Please look at inference.ipynb for examples on inferencing ICRT.

This project is under the Apache 2.0 license. See LICENSE for details.

Please give us a star 🌟 on Github to support us!

Please cite our work if you find our work inspiring or use our code in your work:

@article{fu2024icrt,

title={In-Context Imitation Learning via Next-Token Prediction},

author={Letian Fu and Huang Huang and Gaurav Datta and Lawrence Yunliang Chen and William Chung-Ho Panitch and Fangchen Liu and Hui Li and Ken Goldberg},

journal={arXiv preprint arXiv:2408.15980},

year={2024}

}