AlphaSQL provides Automatic Parallelization for sets of SQL files and integrated Type/Scheme Checker to eliminate syntax, type and schema errors from your datawarehouse.

You can quickly introduce AlphaSQL by CI Example.

- Docker Image

- Use our AlphaSQL on Docker ;)

- Fast Binaries

- For local use, binary installation is fast!

- Dependency Analysis

- Extract DAG from your SQL file set.

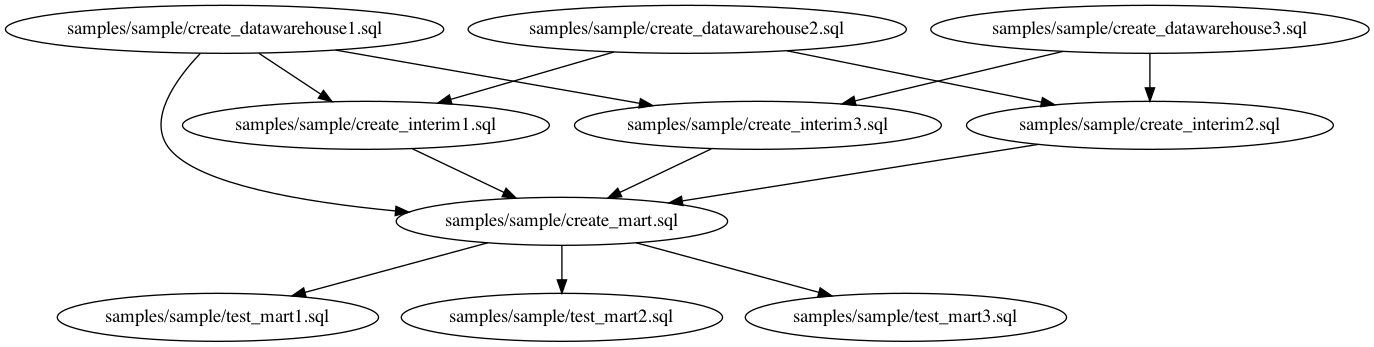

- Sample DAG output

- Side effect first

- With tables and functions

- Parallel Execution

- Automatically parallelize your SQL file set.

- Integrate with Airflow.

- Schema Checker

- Eliminate syntax, type and schema errors from your datawarehouse.

- Schema specification by JSON

- Input your lake schema in JSON.

- CI Example

- Use our AlphaSQL to continuously check your datawarehouse on BigQuery using CloudBuild.

- Supports

_TABLE_SUFFIX.

You can run commands below with docker

docker run --rm -v `pwd`:/home matts966/alphasql:latest [command]like

docker run --rm -v `pwd`:/home matts966/alphasql:latest alphacheck ./samples/sample/dag.dotCommands are installed in the PATH of the image.

# To install for MacOSX

temp=$(mktemp -d)

wget -P $temp https://github.com/Matts966/alphasql/releases/latest/download/alphasql_darwin_x86_64.tar.gz \

&& sudo tar -zxvf $temp/alphasql_darwin_x86_64.tar.gz -C /usr/local/bin# To install for Linux

temp=$(mktemp -d)

wget -P $temp https://github.com/Matts966/alphasql/releases/latest/download/alphasql_linux_x86_64.tar.gz \

&& sudo tar -zxvf $temp/alphasql_linux_x86_64.tar.gz -C /usr/local/bin --strip=1alphadag finds dependencies between table references and create table statements, function calls and create function statements.

# To extract DAG from your SQL set

$ alphadag --output_path ./samples/sample/dag.dot ./samples/sample/

# Or you can check the output in stdout by

$ alphadag [paths]

# with graphviz

$ dot -Tpng samples/sample/dag.dot -o samples/sample/dag.pngNote that sometimes the output has cycle, and refactoring SQL files or manual editing of the dot file is needed (see this issue).

If there are cycles, warning is emitted, type checker reports error, and bq_jobrunner raise error before execution. You can see the example in ./samples/sample-cycle .

If you want to serially execute some statements, you can write SQL script that contains multiple statements. See samples/sample/create_interim1.sql as an example.

The image below is extracted from SQL set in ./samples/sample . You can write tests for created tables and run them parallely only by separating SQL file.

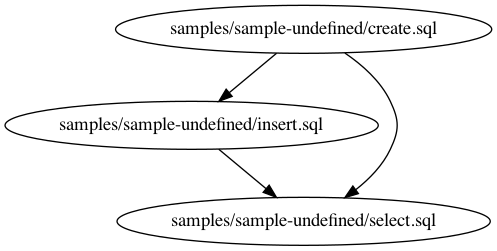

You can resolve side effects such as INSERT and UPDATE statements before simple references by the --side_effect_first option.

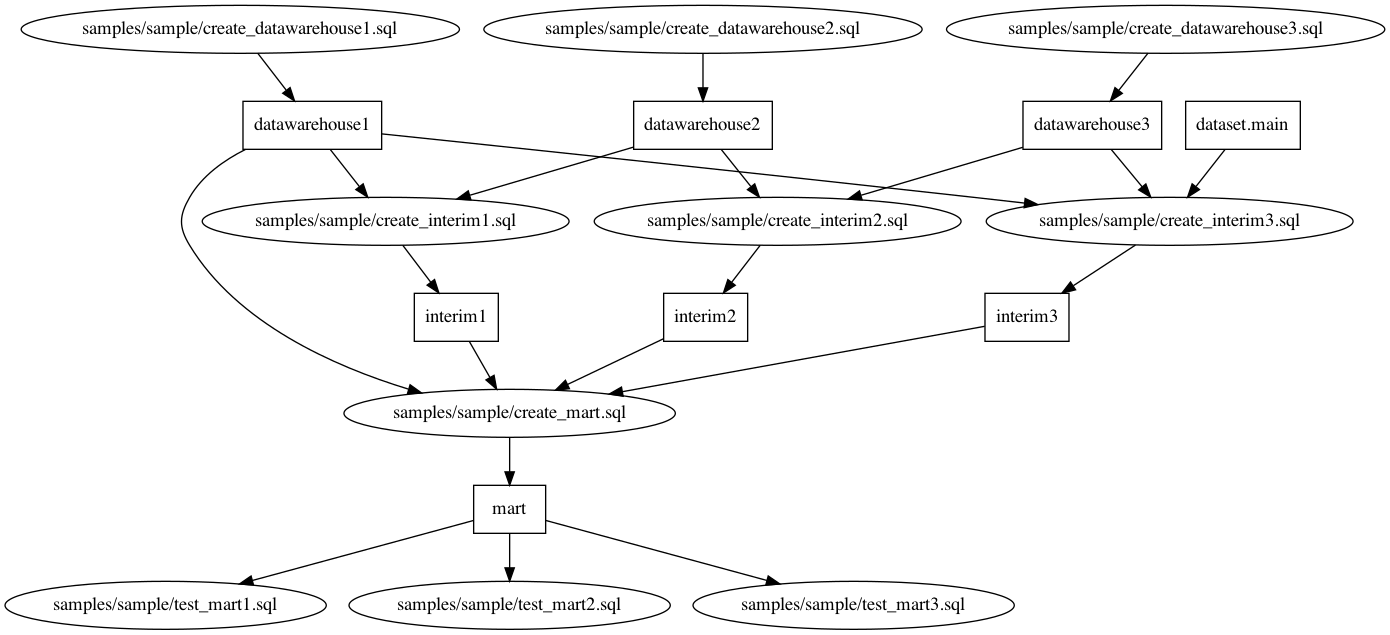

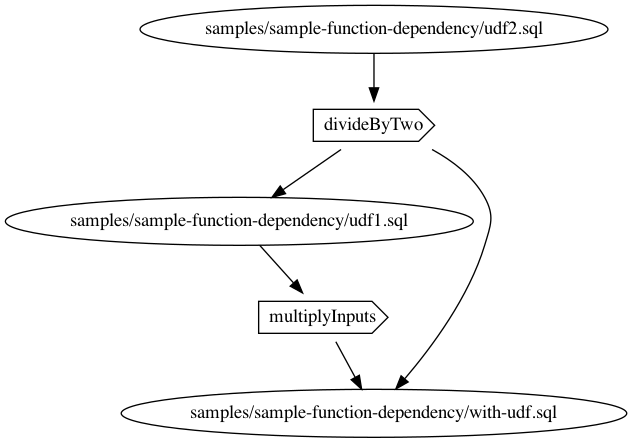

You can extract dependencies containing tables and functions by --with_tables and --with_functions options.

For BigQuery, the output DAG can be run parallely using

bq-airflow-dag-generator as Python package can generate Airflow DAG by simple Python script.

dagpath = "/path/to/dag.dot"

dag = generate_airflow_dag_by_dot_path(dagpath)See usage on README for more details.

from bq_jobrunner.bq_jobrunner import BQJobrunner

FILE_PATH = "./path/to/dag.dot"

PROJECT_ID = "your-project-id"

REGION = "asia-northeast1" # your region

runner = BQJobrunner(

PROJECT_ID,

location=REGION,

)

runner.compose_query_by_dot_path(FILE_PATH)

runner.execute()Note that you should run job_runner in the same path as in extracting DAG.

Note that you should run type_checker in the same path as in extracting DAG.

# to check type and schema of SQL set

$ alphacheck ./samples/sample.dot

Analyzing "./samples/sample/create_datawarehouse3.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_datawarehouse2.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_interim2.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/update_interim2.sql"

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_datawarehouse1.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_interim3.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_interim1.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/update_interium1.sql"

SUCCESS: analysis finished!

Analyzing "./samples/sample/insert_into_interim1.sql"

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_mart.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/test_mart1.sql"

SUCCESS: analysis finished!

Analyzing "./samples/sample/test_mart2.sql"

SUCCESS: analysis finished!

Analyzing "./samples/sample/test_mart3.sql"

SUCCESS: analysis finished!

Successfully finished type check!If you change column x's type in ./samples/sample/create_datawarehouse3.sql to STRING, type checker reports error.

$ alphacheck ./samples/sample/dag.dot

Analyzing "./samples/sample/create_datawarehouse3.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_datawarehouse2.sql"

DDL analyzed, adding table to catalog...

SUCCESS: analysis finished!

Analyzing "./samples/sample/create_interim2.sql"

ERROR: INVALID_ARGUMENT: Column 1 in UNION ALL has incompatible types: INT64, STRING [at ./samples/sample/create_interim2.sql:7:1]

catalog:

datawarehouse3

datawarehouse2You can specify external schemata (not created by queries in SQL set) by passing JSON schema path.

# with external schema

$ alphacheck --json_schema_path ./samples/sample-schema.json ./samples/sample/dag.dotYou can extract required external tables by

$ alphadag --external_required_tables_output_path ./required_tables.txt {./path/to/sqls}

# and get schemata using bq command

$ cat ./required_tables.txt | while read line

do

bq show \

--schema \

--format=prettyjson \

$line

doneJSON schema file should have only a top level map element keyed by string element, which specifies table name, and each value specifies schema for its key. name and type elements in the schema elements are recognized like the official API.

{

"tablename1": [

{"mode": "NULLABLE", "name": "column1", "type": "STRING", "description": null}

],

"tablename2": [

{"mode": "NULLABLE", "name": "column1", "type": "STRING", "description": null},

{"mode": "NULLABLE", "name": "column2", "type": "INT64", "description": null}

]

}The pipeline level type check above is also useful in CI context. The sample in ./samples/sample-ci contains an example for extracting DAG, retrieving schema and checking schema and type of SQL set quering bigquery public dataset. You can introduce the CI to your environment only by copying cloudbuild_ci_sample.yaml and python_entrypoint.py to your project.

You can try the example CI with gcloud command by

(cd ./samples/sample-ci && gcloud builds submit --config=cloudbuild_ci_sample.yaml .)

This example

- Supports

_TABLE_SUFFIXfeature! - Does not execute actual BigQuery and very cheap!

The development of this project is sponsored by Japan Data Science Consortium and Cybozu Lab.