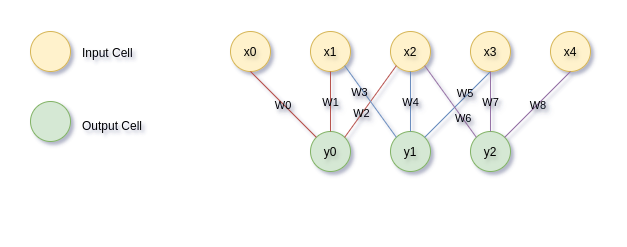

A Parallel implementation for a particular kind of multi-layer Neural Network. Each output neuron is connected to (small) subset of the input neurons. The network is composed of K layers. The network is shown in the following image:

The general formulation of the network is the following:

where

The network computes only the forward pass without backpropagation.

Compiled with the flags -std=c99 -Wall -Wpedantic -fopenmp and linked with the math library -lm.

gcc -std=c99 -Wall -Wpedantic -fopenmp multi-layer-nn.c -o multi-layer-nn -lmnvcc cuda-multi-layer-nn.cu -o cuda-multi-layer-nnDefault parameters are

OMP_NUM_THREADS=<NUMBER OF THREADS> ./multi-layer-nn [N] [K]./cuda-multi-layer-nn [N] [K]Strong scaling is the ability of a parallel system to solve a fixed-size problem in less time as more processors are added to the system.

The strong-scaling.sh script runs the program with a fixed-size problem and a varying number of threads in OpenMP.

For further analysis, the output was redirected to a csv file and plotted using pandas and matplotlib python libraries.

bash strong-scaling.sh > csv/strong-scaling.csvWeak scaling is the ability of a parallel system to solve larger problems in the same amount of time as the number of processors increases.

The weak-scaling.sh script runs the program with a varying size problem that scales with the number of threads in OpenMP,

The same output redirection and plotting process was used for the weak scaling analysis.

bash weak-scaling.sh > csv/weak-scaling.csvThe cuda-perf.sh script runs the program with a varying size problem and checks the performance of the program with and without shared memory. It computes the throughput of the program with and without shared memory as well as the execution time of the program.

bash cuda-perf.sh > csv/cuda-perf.csvThe following image shows the wall clock time of the CUDA program vs the wall clock time of the OpenMP program.

The following image shows the throughput of the CUDA program vs the throughput of the OpenMP program.

The following image shows the speedup of the CUDA program vs the speedup of the OpenMP program.

The following image shows the efficiency of the CUDA program vs the efficiency of the OpenMP program.