Please fill in your name and student id:

| First name: | Last name: | Student ID: |

|---|---|---|

For a more readable version of this document, see Readme.pdf or Readme.html.

Deadline: March 15th 2019, 1pm. Only commits pushed to github before this date and time will be considered for grading.

Issues and questions: Please use the issue tracker of the main repository if you have any general issues or questions about this assignment. You can also use the issue tracker of your personal repository or email me.

Note: The bug in github classroom was fixed. This repository should be automatically imported into your private one.

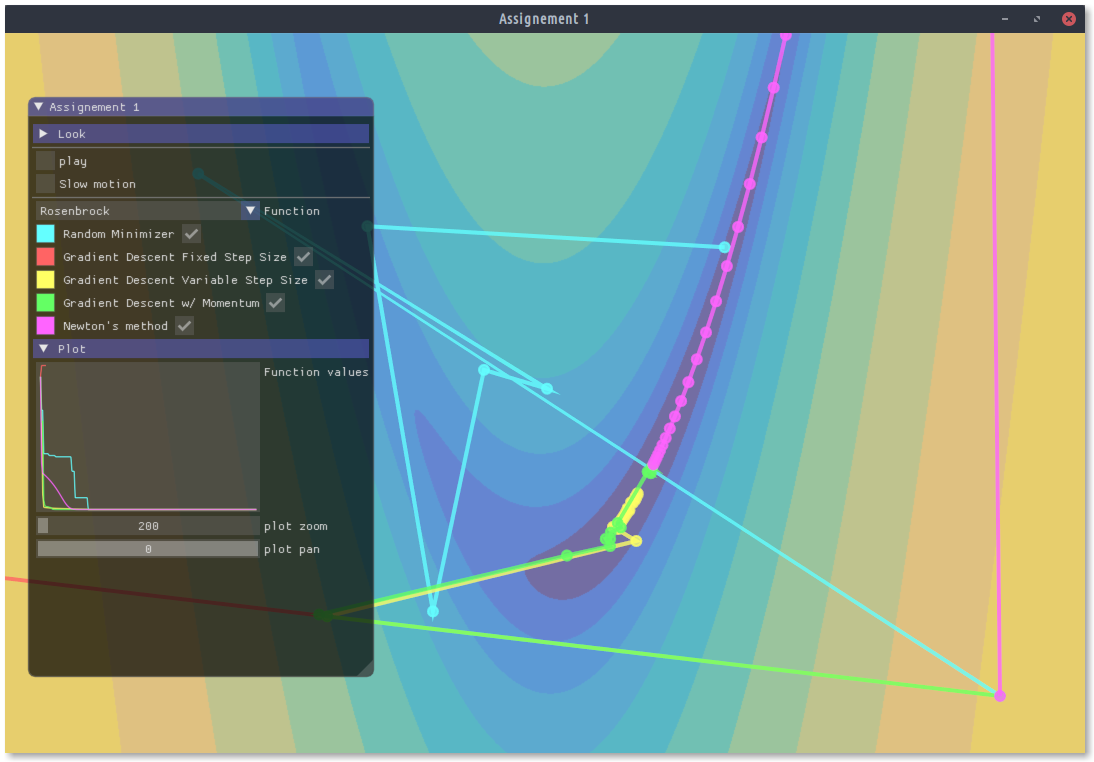

In this first assignment, you will implement and compare various strategies to solve the unconstrained minimization problem $$ x = \text{argmin}_\tilde{x} f(\tilde{x}). $$

A naive way to find a minimum of

Task: Implement an optimization routine, that samples a search domain

Relevant code:

In the file src/optLib/RandomMinimzier.h, implement the method minimize(...) of class RandomMinimizer.

The members searchDomainMin/Max define the minimum and maximum values of the search domain, searchDomainMin[i] is the lower bound of the search domain for dimension xBest and fBest should store the best candidate

The method runs in a for loop. To compare it to other optimization techniques, iterations has been set to 1.

**Hints: ** std::uniform_real_distribution can generate random numbers.

Task: Implement Gradient Descent with fixed step size, variable step size and with Momentum.

Relevant code:

The file src/optLib/GradientDescentMinimizer.h contains three classes:

-

GradientDescentFixedStep: implement the methodstep(...). It shall updatexto take a step of sizestepSizein the direction of the search directiondx. The search direction was previously computed in the methodcomputeSearchDirection(...), which callsobj->addGradient(...)to compute$\nabla f(x)$ . -

GradientDescentVariableStep: implement the methodstep(...). Instead of fixed step size, use the Line Search method to iteratively find the best step size.maxLineSearchIterationsdefines the maximum number of iterations to perform. -

GradientDescentMomentum: implement the methodcomputeSearchDirection(...)to compute the search direction according to Gradient Descent with Momentum.

Hint: Note that before calling obj->addGradientTo(grad, x), you need to make sure grad is set to zero (grad.setZero()). Or you simply use obj->getGradient(x), which returns

Task: Implement Newton's method with global Hessian regularization, where we take a step

Relevant code:

In the file src/optLib/NewtonFunctionMinimizer.h , implement the method computeSearchDirection(...) to compute the search direction according to Newton's method. The ObjectiveFunction has a method getHessian(...) that computes the Hessian. Use the member reg for the value of

Hint: The Eigen library provides various solvers to solve a linear system

Eigen::SimplicialLDLT<SparseMatrix<double>, Eigen::Lower> solver;

solver.compute(A);

x = solver.solve(b);Now that you have implemented different optimization methods, let's compare and try them out on different functions. Give an answer to the following questions. Please write your answers below the questions, and don't forget to commit and push!

-

Let's look at the Gradient Descent with fixed step size and the quadratic function

$f(x) = x^Tax + b^Tx + c$ . What is the maximum step size, such that Gradient Descent with fixed step size still converges and finds the minimum? Can you give an explanation for this particular value of the step size? What happens when we change$a$ ,$b$ or$c$ ?your answer

-

Let's look at Gradient Descent with variable step size, Gradient Descent with Momentum and the Rosenbrock function. Which method performs better? Why?

your answer

-

Let's look at Newton's method and the quadratic function. What is the best (in terms of convergence) choice for the Hessian regularizer? How many iterations does it take to converge and why does it take exactly that many iterations?

your answer

-

Let's look at Newton's method and Gradient Descent with fixed step size. Set the step size of Gradient Descent to

$0.001$ . What value for the (approximate) Hessian regularizer of Newton's do you choose, to make both methods converge almost identically? (They will not converge exactly the same.) Why is this the case?your answer

-

Finally, let's look at Newton's method and the sine function. The sine function has many local minima. Explain the effect the choice of the Hessian regularizer has on the local minimum Newton's method will converge to.

your answer

Make sure you install the following:

- Git (https://git-scm.com)

- Windows: download installer from website

- Linux: e.g.

sudo apt install git - MacOS: e.g.

brew install git

- CMake (https://cmake.org/)

- Windows: download installer from website

- Linux: e.g.

sudo apt install cmake - MacOS: e.g.

brew install cmake

On Windows, you can use Git Bash to perform the steps mentioned below.

- Clone this repository and load submodules:

git clone --recurse-submodules YOUR_GIT_URL - Make build folder and run cmake

cd comp-fab-a0-XXX mkdir build && cd build cmake .. - Compile code and run executable

- for MacOS and Linux:

make ./src/app/app - for Windows:

- open

assignement0.slnin Visual Studio - in the project explorer, right-click target "app" and set as startup app.

- Hit F5!

- open

- for MacOS and Linux:

-

If you are new to git, there are many tutorials online, e.g. http://rogerdudler.github.io/git-guide/.

-

You will not have to edit any CMake file, so no real understanding of CMake is required. You might however want to generate build files for your favorite editor/IDE: https://cmake.org/cmake/help/latest/manual/cmake-generators.7.html