v# Semantic-based Retriever for Security Patch Tracing

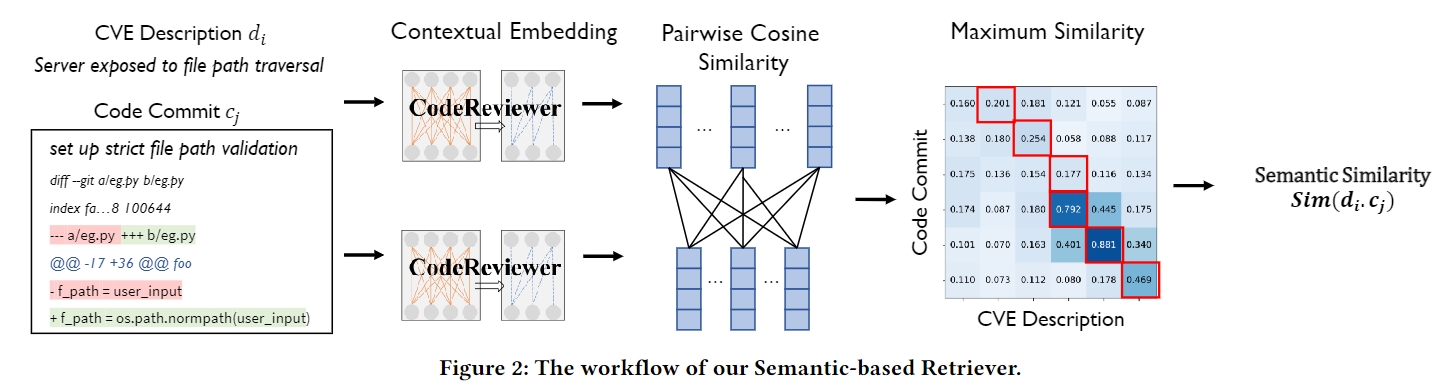

PatchFinder's Semantic-based Retriever is an innovative component designed specifically for linking CVE descriptions with their corresponding patches in code repositories. It stands as a key feature in the PatchFinder tool, enhancing the accuracy and efficiency of security patch tracing in open-source software.

- Semantic Analysis: Utilizes context embedding to understand and match the semantics between CVE descriptions and code changes.

- Integration with CodeReviewer: Employs a pretrained CodeReviewer model to deeply analyze code semantics, ensuring precise patch identification.

For an illustration, BERTScore recall can be computed as

- Python version >= 3.6

- PyTorch version >= 1.0.0

There are two ways to use the Semantic-based Retriever:

pip install .and you can use it as a python module.

On a high level, we provide a python function cr_score.score and a python object cr_score.CRScorer.

The function provides all the supported features while the scorer object caches the BERT model to faciliate multiple evaluations.

Check our demo to see how to use these two interfaces.

Please refer to cr_score/score.py for implementation details.

We provide a command line interface (CLI) of BERTScore as well as a python module. For the CLI, you can use it as follows:

- To evaluate the semantic similarity between two text files:

cr-score -r example/refs.txt -c example/hyps.txt -m microsoft/codereviewerSee more options by cr-score -h.

- To visualize matching scores:

cr-score-show -m microsoft/codereviewer -r "Server exposed to file path traversal" -c "set up strict file path validation" -f out.pngThe figure will be saved to out.png.

- Using inverse document frequency (idf) on the reference

sentences to weigh word importance may correlate better with human judgment.

However, when the set of reference sentences become too small, the idf score

would become inaccurate/invalid.

We now make it optional. To use idf,

please set

--idfwhen using the CLI tool oridf=Truewhen callingcr_score.scorefunction. - When you are low on GPU memory, consider setting

batch_sizewhen callingcr_score.scorefunction. - To use a particular model please set

-m MODEL_TYPEwhen using the CLI tool ormodel_type=MODEL_TYPEwhen callingcr_score.scorefunction. - We tune layer to use based on WMT16 metric evaluation dataset. You may use a

different layer by setting

-l LAYERornum_layers=LAYER. To tune the best layer for your custom model, please follow the instructions in tune_layers folder. - Limitation: Because CodeReviewer with learned positional embeddings are pre-trained on sentences with max length 512, Our Semantic-based retriever is undefined between sentences longer than 510 (512 after adding [CLS] and [SEP] tokens). The sentences longer than this will be truncated.

This repo wouldn't be possible without the awesome BertScore, CodeReviewer, fairseq, and transformers.