First I refered datasets from Apollo, BDD100K, SUN, but they failed to meet the demands in quantity for YOLO training.

Finally I downloaded this dataset, with about 260 photos of traffic cones, combined with photos taken by Xiaomi. Since annotation in this dataset is incomplete, I labeled them manually using the tool provided in labelImg.

Dataset now available at here (updated), in PASCAL VOC format, and YOLOv3 format.

example of label

At first I want to detect cones in simple graphic method, that is, using Hough Transform to find convex cones in the image, and then identifying them based on color, shape or so.

simple graphic method

However, considering the complex background on lane while real-time driving, this method appears to be inaccurate. Then I took SVM+HOG into account, which is also frequently-used in feature detection.

But SVM failed in speed. YOLO(You Only Look Once) network performs well both in spped and accuracy(reaching 70 fps), so finally I choose YOLOv3. (Before this choice I considered YOLOv2, but failed in compiling CUDA env.)

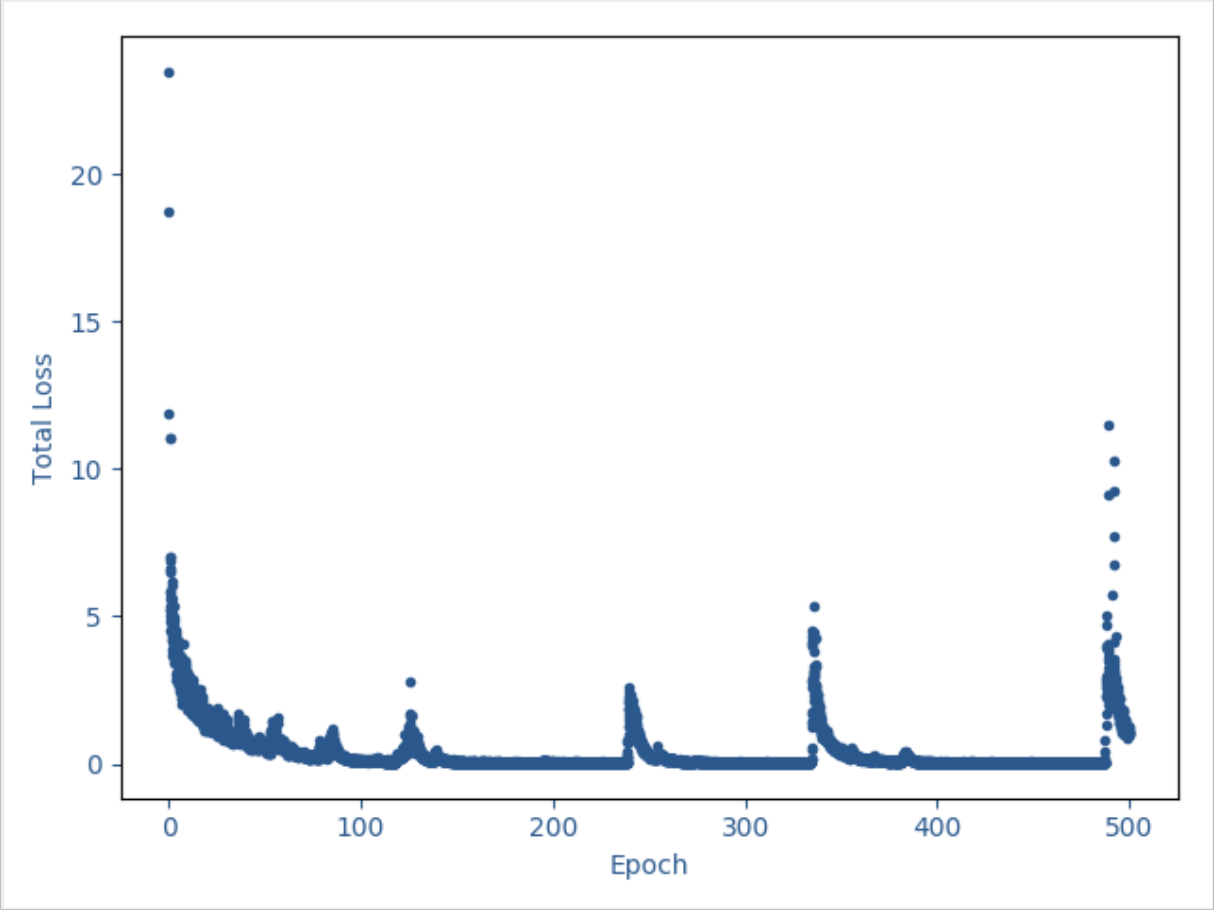

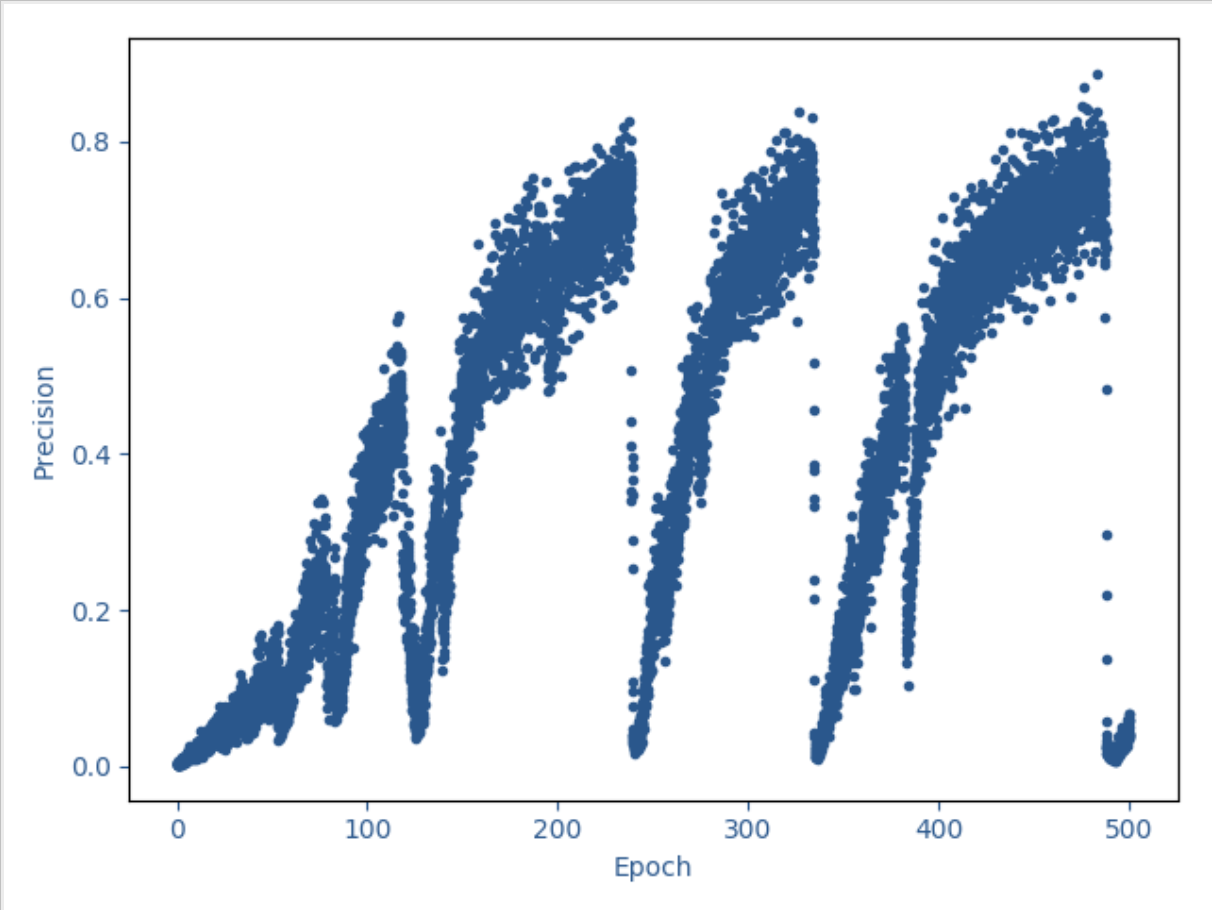

After 500 epochs of training, total loss has been reduced to a fairly low level (min=0.011), with precision up to 0.887. However in the detection valid task, nothing can be detected. I've opened an issue.

What's more, exploding gradient problem happened, as shown below:

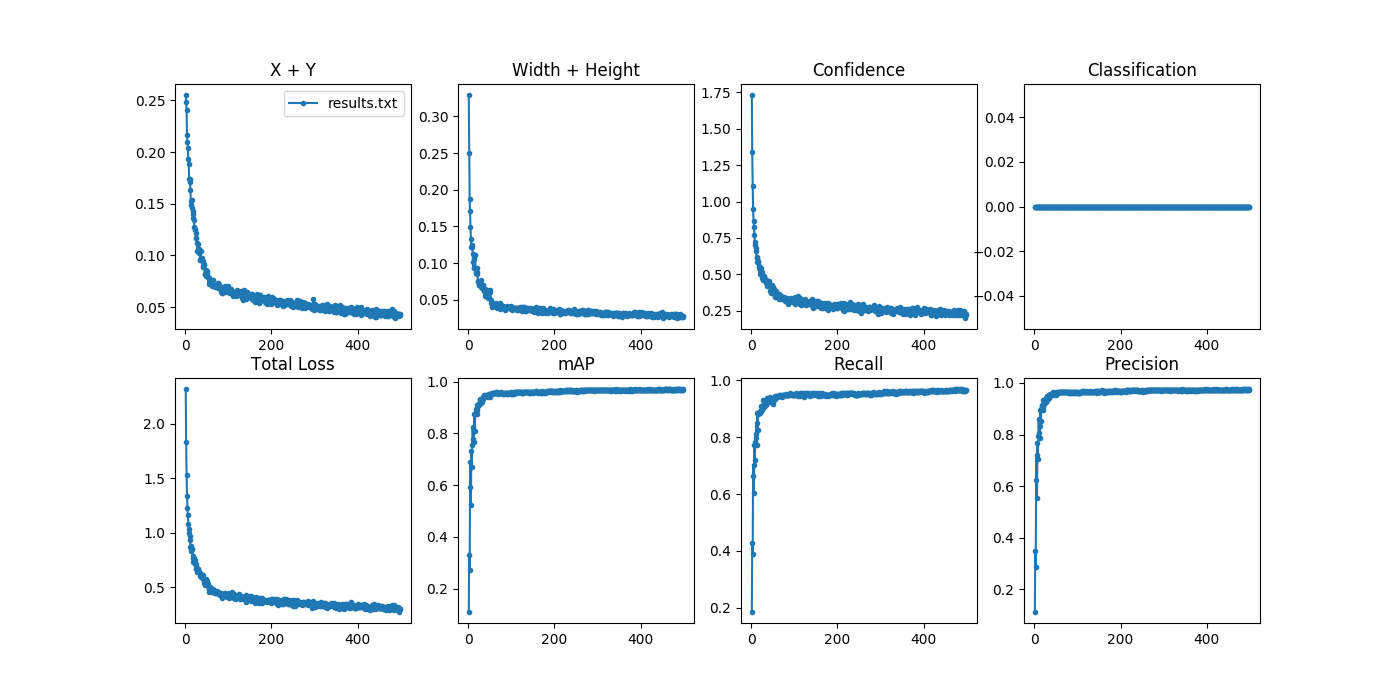

After fixing 2 bugs mentioned in issue127, now pretty good results.

As is shown above, after transforming to another impletation and modifying parameters, the exploding gradient problem has been solved. In this single-class network, total loss can be as low as 0.03, with mAP reaching 98%, recall 98%, precision 99%.

As is shown above, after transforming to another impletation and modifying parameters, the exploding gradient problem has been solved. In this single-class network, total loss can be as low as 0.03, with mAP reaching 98%, recall 98%, precision 99%.

As for speed, testing single images(not video stream) on 1080Ti is about 60 fps. Haven't tested on tx2 yet. To accelerate, consider tiny-yolov3 or turn down the input size.

In addition, the model just detects traffic cone, regardless of its color. So for each cone detected, we extract the half bottom of the bounding box's perpendicular and calculate its average RGB value, and decide which color the cone is(red, green, blue, yellow, dontknow). It only works well for standing cones, inclination or white reflective tape may affect accuracy. And mapping from sampling RGB to color name also depends on color and shape of cones in actual games. Counter-example like:

-

Test:

- Download weights file best.pt and put it in

weights/. - Put the images you want to detect in

data/samples/. - Run

detect.py.

- Download weights file best.pt and put it in

-

Train:

- Edit your own dirs in

convert_yolov3.pyand run it. - Run

train.py.

- Edit your own dirs in

-

Improve color decision:

- edit

utils/utils.py/plot_one_box().

- edit